Python Multithreading – Threads, Locks, Functions of Multithreading

Python course with 57 real-time projects - Learn Python

In this lesson, we’ll learn to implement Python Multithreading with Example. We will use the module ‘threading’ for this.

We will also have a look at the Functions of Python Multithreading, Thread – Local Data, Thread Objects in Python Multithreading and Using locks, conditions, and semaphores in the with-statement in Python Multithreading.

So, let’s start the Python Multithreading Tutorial.

What is Python Multithreading?

The module ‘threading’, for Python, helps us with thread-based parallelism.

It constructs higher-level threading interfaces on top of the lower level _thread module. Where _thread is missing, we can’t use threading.

For such situations, we have dummy_threading.

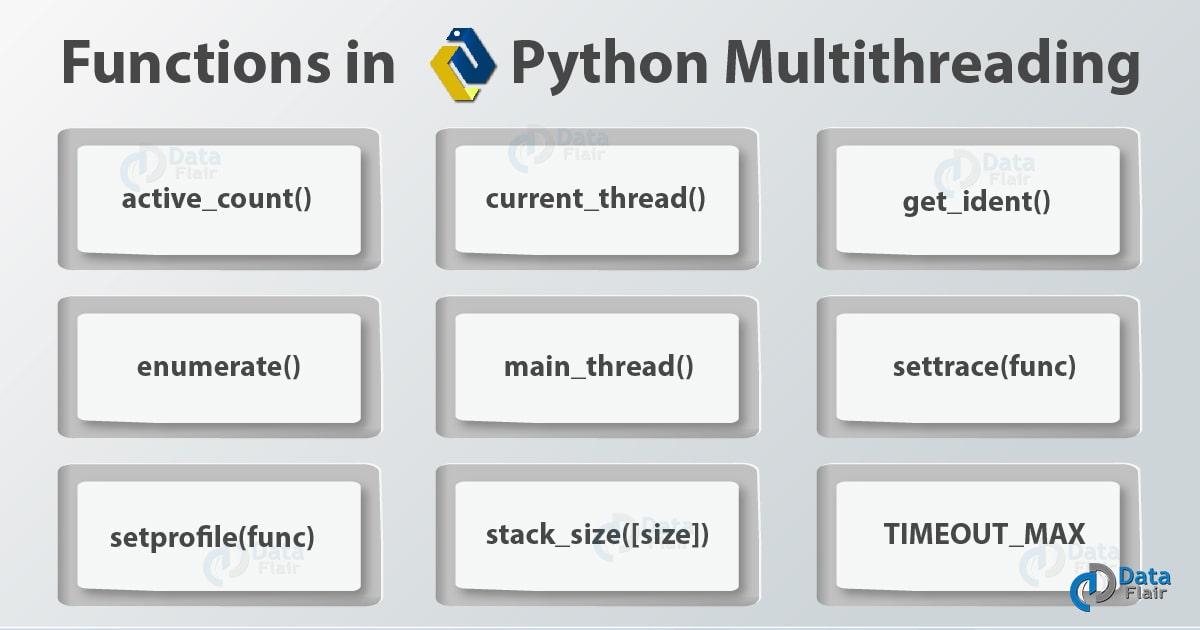

Functions in Python Multithreading

We have the following functions in the Python Multithreading module:

1. active_count()

This returns the number of alive(currently) Thread objects. This is equal to the length the of the list that enumerate() returns.

>>> threading.active_count()

Output

2. current_thread()

Based on the caller’s thread of control, this returns the current Thread object.

If this thread of control isn’t through ‘threading’, it returns a dummy thread object with limited functionality.

>>> threading.current_thread()

Output

3. get_ident()

get_ident() returns the current thread’s identifier, which is a non-zero integer. We can use this to index a dictionary of thread-specific data.

Apart from that, it has no special meaning. When one thread exits and another creates, Python recycles such an identifier.

>>> threading.get_ident()

Output

4. enumerate()

This returns a list of all alive(currently) Thread objects. This includes the main thread, daemonic threads, and dummy thread objects created by current_thread().

This obviously doesn’t include terminated threads as well as those that haven’t begun yet.

>>> threading.enumerate()

Output

[<_MainThread(MainThread, started 14352)>, <Thread(SockThread, started daemon 9864)>

5. main_thread()

This method returns the main Thread object. Normally, it is that thread which started the interpreter.

>>> threading.main_thread()

Output

<_MainThread(MainThread, started 14352)>

6. settrace(func)

settrace() traces a function for all threads we started using ‘threading’. The argument func passes to sys.settrace() for each thread before it calls its run() method.

>>> def sayhi():

print("Hi")

>>> threading.settrace(sayhi)

>>>7. setprofile(func)

This method sets a profile function for all threads we started from ‘threading’. It passes func to sys.setprofile() for each thread before it calls its run() method.

>>> threading.setprofile(sayhi) >>>

8. stack_size([size])

stack_size() returns the stack size of a thread when creating new threads. size is the stack size we want to use for subsequently created threads.

This must be equal to 0 or a positive integer of value at least 32,768 (32KiB). When not specified, it uses 0.

And if it doesn’t support changing thread stack size, it raises a RuntimeError.

When we pass an invalid stack size, it raises a ValueError, and does not modify it.

The minimum stack size it currently supports to guarantee enough stack space for the interpreter itself is 32KiB.

Some platforms may need a minimum stack size of greater than 32KiB. Others may need to allocate in multiples of system memory page size.

>>> threading.stack_size()

Apart from functions, ‘threading’ also defines a constant.

9. TIMEOUT_MAX

This holds the maximum allowed value for this constant, the timeout parameter for blocking functions like Lock.acquire(), Condition.wait(), RLock.acquire(), and others.

If we denote a timeout greater than this, it raises an OverflowError.

>>> threading.TIMEOUT_MAX

Output

4294967.0

In Java, locks and condition variables are the basic behavior of every object. Whereas in Python, they are individual objects.

Here, the class Thread supports some of the functionality of class Thread in Java.

However, currently, we have no thread groups, priorities, and we cannot destroy, stop, suspend, resume, or interrupt threads.

When we implement the static methods from Java’s Thread, they map to module-level functions. This way, ‘threading’ is much like Java’s threading model in design.

Next in the Python Multithreading tutorial is Thread – Local Data and Thread Objects.

Python Thread-Local Data

That data for which the values are thread-specific, is thread-local. To manage such data, we can create an instance of local/a subclass, and then store attributes on it.

>>> mydata=threading.local() >>> mydata.x=7 >>>

These instance values differ for each thread. We have the following class denoting thread-local data:

class threading.local

Next in Python Multithreading Tutorial is Thread Objects.

Python Thread Objects

The Thread class that we mentioned earlier in this blog denotes an activity running in a separate thread of control.

We can represent this activity either by passing a callable object to the constructor, ot by overriding the method run() in a subclass.

You must make sure to not override other methods in a subclass, except for the constructor. In short, only override a class’ __init__() and run() methods.

Once the interpreter creates a thread object, we must start its activity by calling its start() method. This will invoke its run() method in a separate thread of control.

Once this happens, we consider the thread to be ‘alive’. When run() terminates normally or raising an exception we did not handle, it is no longer alive.

To test whether a thread is alive, we may use the method is_alive().

A thread may call another’s join() method. This will block the calling thread until the other terminates.

Threads have names, and we can pass these names to the constructor, and even read or modify them.

We can flag a thread as a ‘daemon thread’. This means that the whole program exits when only the daemon threads remain.

This initial value comes from the creating thread. We can set this flag via the property ‘daemon’, or via the constructor argument ‘daemon’.

Daemons abruptly stop at shutdown, and they may not properly release all the resources held. These resources may include open files, database transactions, and others.

To stop our threads gracefully, we must make them non-daemonic. It is also preferable to use a suitable signaling process, like an Event.

The ‘main thread’ object pertains to the initial thread of control in our program; it isn’t a daemon.

Finally, it is possible that the interpreter creates ‘dummy thread objects’. These are ‘alien threads’ (threads of control started outside ‘threading’, for ex, directly from C code).

Such objects have limited functionality, and are always live and daemonic. We cannot join() them. We can also never delete them since it is impossible to detect when they terminate.

This is the class:

class threading.Thread(group=None, target=None, name=None, args=(), kwargs={}, *, daemon=None)

Take note:

- Always call the constructor with keyword arguments. It has the following arguments:

- group must be None. Python reserves this for future extension when we implement a ThreadGroup class.

- target is a callable object that run() will invoke. The default for this is None, which means it calls nothing.

- name is the name of the thread. The default for this is “Thread-N”. Here, N is a small decimal number.

- args is an argument tuple. It helps invoke the target. The default for this is ().

- Kwargs is a dictionary holding keyword arguments. Even this helps invoke the target. The default for this is {}.

- daemon decides whether the thread is daemonic. When None, it inherits the daemonic property from the current thread. The default for this is None.

- Ensure that you invoke the base class constructor(Thread.__init__()) first if the subclass overrides the constructor.

Thread has the following methods:

1. start()

This starts thread activity. For a thread object, we can call it maximum once; if we call it again, it raises a RuntimeError.

This lets run() for the object invoke in a separate thread of control.

>>> threading.Thread.start(threading.current_thread())

Output

Traceback (most recent call last):File “<pyshell#135>”, line 1, in <module>

threading.Thread.start(threading.current_thread())

RuntimeError: threads can only be started once

2. run()

This method explains the thread’s activity. It invokes the callable object we passed to the object’s constructor as the target argument, if it exists.

This is with keyword and sequential arguments from kwargs and args.

We can override run() in a subclass.

3. join(timeout=None)

For join() to work, we must wait until the thread terminates.

Because when that happens, it blocks the calling thread until the one on which we call join() terminates normally or via an exception we did not handle, or until timeout occurs.

When you do provide a timeout (other than None), make sure it is a floating point number. This is so you can pass a timeout in seconds or fractions.

So, what is the return value? Well, join() always returns None. Hence, you’ll need to call is_alive() after calling join() to determine if a timeout happened.

If we find out that it is indeed still alive, then we infer that the join() call timed out.

However, if timeout is None, or if we did not pass it, this blocks the operation until the thread terminates. We can join() a thread many times.

Finally, join() will raise a RuntimeError if we try to join the current thread, because that causes a deadlock.

To join() a thread before we start it also causes an error.

>>> threading.Thread.join(threading.current_thread())

Output

Traceback (most recent call last):File “<pyshell#138>”, line 1, in <module>

threading.Thread.join(threading.current_thread())

RuntimeError: cannot join current thread

4. name

This is a string we use for identification; it has no meaning. We can also give the same meaning to multiple threads. The constructor sets the initial name.

>>> threading.Thread.name='First' >>>

5. getName() and setName()

These are old getter and setter APIs for name. We use them directly as properties.

6. ident

If we started the thread, this returns its identifier. Otherwise, it returns None. Note that it is a non-zero integer, like in the get_ident() function.

Python may recycle identifiers when one thread exits and another creates. Such identifiers exist even after a thread exits.

7. is_alive()

This returns whether the thread is alive. is_alive() returns true from just before run() starts until just after it terminates.

>>> threading.Thread.is_alive(threading.current_thread())

Output

True

8. daemon

daemon is a Boolean value that tells us whether the thread is a daemon. If it is, it returns True. We must set it before we call start().

Otherwise, it raises a RuntimeError. Its initial value comes from the creating thread. The main thread is not a daemon; hence, all threads in the main thread have a default of False for daemon.

When only daemon threads remain, the whole program exits.

9. isDaemon() and setDaemon()

These are old getter and setter APIs for daemon. You can use them directly as properties. With this lets move to our next topic in Python Multithreading tutorial.

Python Lock Objects

A synchronization primitive, a primitive lock does not belong to a certain thread when locked.

This is the lowest-level synchronization primitive we currently have in Python, and we implement it using the extension module _thread.

Such a lock can be in one of two states: ‘locked’ and ‘unlocked’. When we create a lock, it is in the ‘unlocked’ state. It also has two methods- acquire() and release().

When we want to lock it, acquire() changes its state to ‘locked’, and immediately returns it. If it was ‘locked’ instead, then acquire() blocks until another thread calls release().

This changes the state to ‘unlocked’. Finally, acquire() resets it to ‘locked’, and then returns immediately.

If you try to release a lock that is already unlocked, it raises a RuntimeError.

These locks also support the CMP(Context Management Protocol).

When acquire() blocks more than one thread, only one thread continues when release() resets the state to ‘unlocked’. Which one, you ask? Well, we can’t say.

Also, all methods execute atomically.

This is the class:

class threading.Lock

This call implements primitive lock objects. Once a thread acquires a lock, the interpreter blocks further attempts to acquire it.

Only after it releases, does any other thread have a chance in acquiring it. Any thread may release a lock.

1. acquire(blocking=True, timeout=-1)

This method acquires a blocking or non-blocking lock. When blocking=True, it blocks until the lock unlocks. Then, it changes its state to ‘locked’, and returns True.

And when it is False, it does not block. A call with blocking=True that blocks, immediately returns False. Otherwise, it sets the lock to ‘locked’ and returns True.

timeout is a floating-point argument. When it has a positive value, it blocks for a maximum of timeout number of seconds; as long as the lock isn’t acquirable.

When it is -1, it denotes an unbounded wait.

When blocking is False, we cannot specify timeout.

Also, if the lock acquires successfully, it returns True; otherwise, False, like when timeout expires.

2. release()

This method releases a lock. You can call it from any thread. This means that any thread can release a lock, no matter which thread has acquired it.

When ‘locked’, release() resets it to ‘unlocked’, and returns. If other threads wait for it to unlock, only one gets to continue once it unlocks.

When we call release() on an ‘unlocked’ lock, it raises a RuntimeError.

release() doesn’t return any value.

Any doubt yet in Python Multithreading Tutorial. Please Comment.

Python RLock Objects

RLock is very important topic when you learn Python Multithreading. An RLock is a reentrant lock. It is a synchronization primitive that a certain thread can acquire again and again.

It does so using concepts like ‘owning thread’ and ‘recursion level’, and locked/unlocked states. When locked, an RLock belongs to a certain thread; but when unlocked, no thread owns it.

Now, how does this work? To lock, a thread calls acquire(). Now that this thread owns the lock, it returns. To unlock it, a thread calls release().

It is also possible to nest acquire()/release() pairs. The outermost release() resets the lock to the ‘unlocked’ state. It also lets another blocked thread to continue.

Reentrant locks also support CMP(Context Management Protocol).

This is the class:

class threading.RLock

RLock implements reentrant lock objects. Such a lock only release by the thread holding it. A thread can acquire it again without blocking.

However, it must release it once each time it acquires it.

It has two methods:

1. acquire(blocking=True, timeout=-1)

acquire() lets us acquire a blocking or non-blocking lock. Without arguments, if the thread already owns the lock, this method ups the recursion level by one, and then returns.

If it doesn’t already own it, and another thread owns it, it blocks until the lock ‘unlocks’.

And once unlocked, and if it does not belong to any other thread, acquire() declares ownership and sets recursion level to 1, and then returns.

If more than one thread waits blocked, at once, only one will get ownership.

This method returns no value. Finally, when we set blocking to True, it does the same things we discussed, and then returns True.

When blocking is False, however, it doe not block. When a call without arguments blocks, it returns False.

Otherwise, it does what it does for a call without arguments, and then returns True.

And when we call acquire() with timeout, which is a floating-point number, with a positive value, this blocks for a maximum of timeout number of seconds, as long as we cannot acquire the lock.

If a thread has acquired it, it returns True; if timeout has elapsed, it returns False.

2. release()

This method releases a lock and decrements the recursion level. Once the decrement is 0, it resets the lock to the ‘unlocked’ state.

This means no thread owns it. If other threads are blocked, only one of them may continue. If the decrement is non-zero, the lock stays in the ‘locked’ state, and belongs to the calling thread.

You should only call release() when the calling thread actually owns the lock. If it is already ‘unlocked’, this raises a RuntimeError.

release() returns no value.

Now lets come to Condition Objects in Python Multithreading.

Python Condition Objects

A condition variable always pertains to a lock, and we can pass it in, or it creates by default. When several such condition variables must share a lock, we can pass it in.

But we don’t need to exclusively track a lock; it is a part of the condition object.

A condition variable follows CMP (Context Management Protocol) in that it uses the with-statement to acquire the associated lock as long as the enclosed block is alive. acquire() and release() call the lock’s methods.

For other methods, we must call them with the associated lock the thread holds. Once wait() releases the lock, it blocks until another thread wakes it up with a call to notify() or notify_all().

After this, wait() acquires the lock again, and then returns. We can also specify a timeout.

While notify() awakens one waiting thread, if any, notify_all() awakens all threads waiting for the condition variable.

Note that these two methods do not release the lock. So, the threads awakened do not return from wait() immediately.

They return only when the calling thread for notify() or notify_all() gives up ownership for the lock.

This is the class:

class threading.Condition(lock=None)

Condition implements condition variable objects. A condition variable lets any number of threads wait until another thread notifies them.

If lock is not None, and we do pass it, make sure it’s a Lock or RLock object. This should also serve as the underlying lock, otherwise this creates a new RLock object.

It has the following methods:

1. acquire(*args)

This acquires the underlying lock. It calls the corresponding method on it, and returns what that method returns.

2. release()

This releases the underlying lock. It calls the corresponding method on it, and returns nothing.

3. wait(timeout=None)

This method waits until a timeout happens or until someone notifies it. If at the time of calling wait(), the calling thread doesn’t own the lock, this raises a RuntimeError.

wait() releases the underlying lock, then blocks until a notify()/notify_all() call for the same condition variable in another thread wakes it up, or until timeout happens.

And once this happens, it acquires the lock again, and then returns.

When we do pass timeout, and that isn’t None, make sure it’s a floating point number denoting a timeout for the operation in seconds or fractions.

If the underlying lock is an RLock, its release() method doesn’t release it, because this doesn’t necessarily unlock it if it was acquired multiple times recursively.

So, what do we do? We use an internal interface of the RLock class. This unlocks it even when it was recursively acquired many times.

Then, we use another internal interface to restore the recursion level when the thread acquires the lock again.

wait() returns False if timeout expires. Otherwise, it returns True.

4. wait_for(predicate, timeout=None)

This method waits until a condition becomes True. The predicate is a callable with a Boolean result. We may provide a timeout to specify a maximum time to wait.

wait_for() is a utility method, and it can repeatedly make a call to wait() until the predicate satisfies, or until a timeout happens.

It returns the predicate’s last return value, and returns False if the method times out.

With this method, the same rules apply as do to wait(). When we call it, the lock must be held, and acquires again on return. This evaluates the predicate with the lock held.

5. notify(n=1)

notify() wakes up a thread waiting on this condition, if there is any. When we call it, if the calling thread doesn’t own the lock, this raises a RuntimeError.

It wakes up a maximum of n threads that wait for the condition variable. If no threads wait, then it is a no-operation(NOP).

If at least n threads wait, this implementation will wake exactly n threads up. But we can’t rely on this behavior.

An optimized implementation can occasionally wake more than n threads up.

6. notify_all()

This wakes up all threads that wait on this condition. So, this is like notify(), except that it wakes all waiting threads instead of exactly one.

If at the time of calling it, if the calling thread doesn’t own the lock, this raises a RuntimeError.

Python Semaphore Objects

Early Dutch computer scientist Edsger W. Dijkstra invented one of the oldest synchronization primitives. Instead of acquire() and release(), he used P() and V().

What is a semaphore? It is a primitive that lets us manage an internal counter. Each call to acquire() decrements, and each call to release() decrements it.

But let us tell you, the counter never goes below zero. When it is 0, acquire() blocks, and waits until a thread makes a call to release().

Semaphores in Python Multithreading support CMP(Context Management Protocol).

This is the class we have:

class threading.Semaphore(value=1)

It implements semaphore objects.

A semaphore holds an atomic counter denoting the count of release() calls minus the count of acquire() calls, added to an initial value.acquire() blocks if needed until it can leave the counter non-negative and still return.

The default value for the counter is 1.

This class implements semaphore objects.

A semaphore manages an atomic counter representing the number of release() calls minus the number of acquire() calls, plus an initial value.

The acquire() method blocks if necessary until it can return without making the counter negative. If not given, value defaults to 1.

value can serve as an initial value for the internal counter. The default for this is 1. If we pass a value less than 0, this raises a ValueError.

It has the following methods:

1. acquire(blocking=True, timeout=None)

This acquires a semaphore. When we pass a timeout value other than None, it blocks for a maximum of timeout seconds.

If in that interval, acquire() doesn’t complete successfully, it returns False. Otherwise, it returns True.

When we call it without arguments, the following cases may be:

- If, on entry, the internal counter is greater than zero, it decrements it by one, and then returns.

- If, on entry, the internal counter is zero, it blocks until a call to release() wakes it up. Now that the counter is greater than 0, it decrements it by 1, and then returns True. Each call to release() wakes exactly one thread. We cannot say what order this happens in.

- When we call it with blocking with a value of False, it doesn’t block. And if a call without arguments blocks, then it returns False. Otherwise, it does the same as when called without arguments, and then returns True.

2. release()

This method releases a semaphore, and increments the internal counter by 1. When, on entry, it is 0, and another thread waits for it to grow again, it wakes that thread up.

We also have bounded semaphores:

class threading.BoundedSemaphore(value=1)

This class implements bounded semaphore objects. Such objects ensure that their current values do not exceed their initial values.

It this happens, this raises a ValueError. Mostly, semaphores guard resources with limited capacity, for ex., a database server. Where the resource size is fixed, use a bounded semaphore.

But if it releases the semaphore way too many times, then you may have a bug in your code. The default for this is 1.

Let’s take an example. The main thread initializes the semaphore before spawning any worker threads:

>>> maxconnections=5 >>> pool_sema=threading.BoundedSemaphore(value=maxconnections)

Now that it is spawned, the worker threads call acquire() and release() when they must connect to the server:

>>>with pool_sema:

conn=connectdb()

try:

#use connection

finally:

conn.close()Using a bounded semaphore lessens the chances of programming errors.

Python Event Objects

An extremely simple tool in Python Multithreading to communicate, it lets one thread play an event, and the other must wait for it.

An event object deals an internal flag. The methods set() and clear() allow us to set and reset it to True and False, respectively. Until flag is True, wait() blocks.

This is the class:

class threading.Event

This class implements event objects. An event handles a flag, and we can use the methods set() and clear() to set and reset it to True and False, respectively.

Initially, the flag is False. wait() blocks it until it becomes True.

It has the following methods:

1. is_set()

If the internal flag is True, it returns True.

2. set()

This method sets the internal flag to True, and wakes all threads waiting for it to become True. Once it is True, waiting threads do not block at all.

3. clear()

This resets the internal flag to False. Eventually, waiting threads block until somebody calls set() to set the internal flag to True yet again.

4. wait(timeout=None)

Until the internal flag is True, this method blocks. On entry, if it is True, it returns immediately. Otherwise, it blocks until another thread makes a call to set() to set the flag to True, or until

timeout happens.

When timeout exists, and isn’t Now, make sure it’s a floating-point number denoting a timeout for the operation in seconds or fractions.

It returns True only if the internal flag is True- either before the call to wait(), or after. This way, wait() always returns True. However, if timeout exists and the operation times out, it returns False.

Python Timer Objects

Timer denotes an action that should run only after a given amount of time; it is a timer in Python Multithreading.

This is a subclass of Thread, and we can also use it to learn how to create our own threads.

When we call start() on a thread, a timer start with it. We can stop it before it begins, if we call cancel() on it. Before executing, a timer waits for some interval; this may differ from the interval we specify.

Take an example:

>>> def hello():

print("Hello")

>>> t=threading.Timer(30.0,hello)

>>> t.start()This is the class:

class threading.Timer(interval, function, args=None, kwargs=None)

It creates a timer that runs a function(with arguments args and kwargs) after interval seconds. When args is None, it uses an empty list.

This is the default. And when kwargs is None, it uses an empty dict. This is a default too.

It has one method:

1. cancel()

This stops the timer, and then cancels its action. This only works if the timer is waiting.

Now the last in Python Multithreading is Barrier Objects.

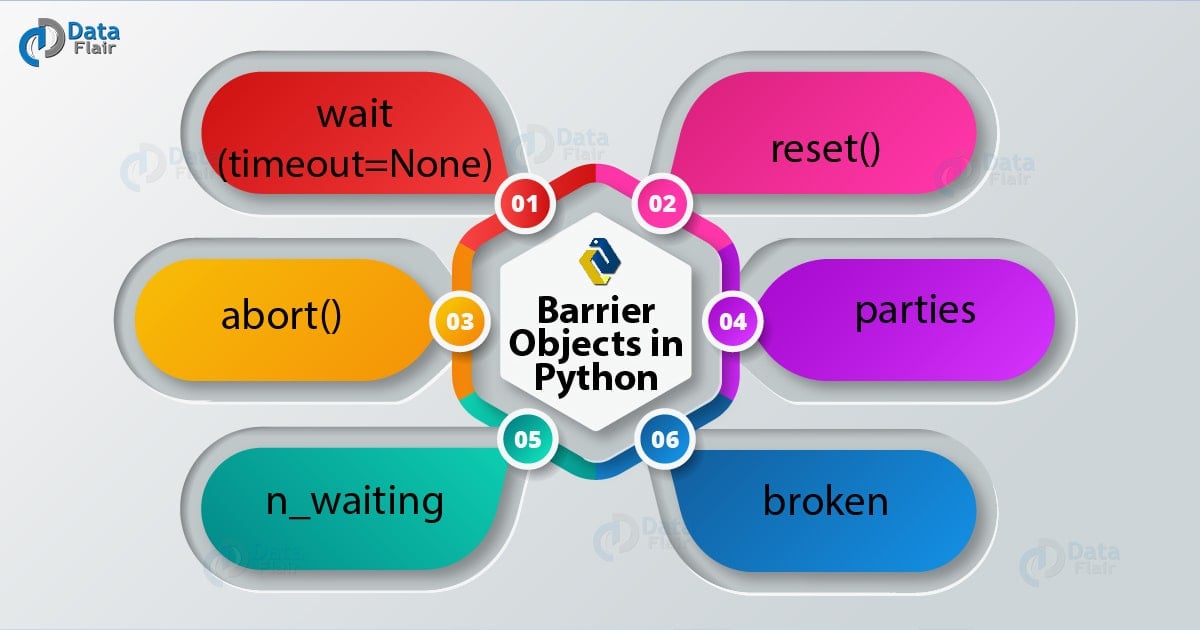

Barrier Objects

Barrier is a simple synchronization primitive to a fixed number of threads that must wait for each other.

Each thread tries to pass the barrier by making a call to wait; it blocks until all threads have done this. Then, the threads release simultaneously.

You can reuse a barrier any number of times for the same number of threads. Let’s take an example.

A way to synchronize a client and server thread is:

>>> b=threading.Barrier(2,timeout=5)

>>> def server():

start_server()

b.wait()

while True:

connection = accept_connection()

process_server_connection(connection)>>> def client():

b.wait()

while True:

connection = make_connection()

process_client_connection(connection)This is the class:

class threading.Barrier(parties, action=None, timeout=None)

Barrier creates a barrier object for parties number of threads. When we do pass action, it is a callable that a thread will call when we release it.

Finally, timeout is a default value for timeout if we don’t specify the same for wait().

It has the following methods:

1. wait(timeout=None)

wait() passes the barrier. Once all thread parties have called wait(), they all release together.

If we do pass a value for timeout, it uses this one, no matter whether we provided a value for the same to the class constructor.

It returns any integer value from 0 to parties-1. This is different for each thread. You can use this to choose a thread to do special housekeeping.

Take an example:

>>> i=barrier.wait()

>>> if i==0:

#Only one thread must print this

print("passed the barrier")If we provided an action to the constructor, one thread calls it before releasing. If this raises an error, the barrier sinks into a ‘broken’ state.

The same happens if the call times out.

Finally, wait() may raise a BrokenBarrierError if the barrier breaks or resets as a thread waits.

2. reset()

This function resets the barrier to its default, empty state. Any waiting threads receive a BrokenBarrierError.

reset() may need external synchronization if other threads with unknown states exist. If it breaks a barrier, just create a new one.

3. abort()

abort() puts a barrier into a ‘broken’ state. Consequently, active/future calls to wait() fail with a BrokenBarrierError.

To avoid deadlocking an application, we may need to abort it. This is one use-case.

Try to create the barrier with a sensible value for timeout so it automatically guards against a thread going haywire.

4. parties

This returns the number of threads we need to pass the barrier.

5. n_waiting

This returnd the number of threads that currently wait in the barrier.

6. broken

This is a Boolean value that is True if the barrier is in a ‘broken’ state.

Check out this exception that Barrier may raise:

exception threading.BrokenBarrierError

This is a subclass of RuntimeError, and it raises if a Barrier object resets or breaks.

Now lets learn using locks, conditions and semaphores in the with-statement in the last topic of out Python Multithreading Tutorial.

Using locks, conditions, and semaphores in the with-statement

If an object in this module has acquire() and release(), we can use it as a context-manager for a with-statement.

When it enters the block, it calls acquire(), and when it exits it, it calls release().

This is the syntax for the same:

with some_lock:

#do somethingThis is the same as:

>>> some_lock.acquire()

>>> try:

#do something

finally:

some_lock.release()We can currently use Lock, RLock, Condition, Semaphore, and BoundedSemaphore objects as context-managers for with-statements.

So, this was all about Python Multithreading Tutorial. Hope you like our explanation.

Python Interview Questions on Multi-threading

- What is Python Multithreading? Explain with example.

- How is Multithreading achieved in Python?

- What is the use of Multithreading in Python?

- Which is better multithreading or multiprocessing in Python?

- How many threads can you have in Python?

Conclusion

This is all about the Python Multithreading with Example, Functions of Python Multithreading, Thread – Local Data, Thread Objects in Python Multithreading and Using locks, conditions, and semaphores in the with-statement in Python Multithreading.

Your 15 seconds will encourage us to work even harder

Please share your happy experience on Google