Python Mini Project – Speech Emotion Recognition with librosa

Free Machine Learning courses with 130+ real-time projects Start Now!!

Python Mini Project

Speech emotion recognition, the best ever python mini project. The best example of it can be seen at call centers. If you ever noticed, call centers employees never talk in the same manner, their way of pitching/talking to the customers changes with customers. Now, this does happen with common people too, but how is this relevant to call centers? Here is your answer, the employees recognize customers’ emotions from speech, so they can improve their service and convert more people. In this way, they are using speech emotion recognition. So, let’s discuss this project in detail.

Speech emotion recognition is a simple Python mini-project, which you are going to practice with DataFlair. Before, I explain to you the terms related to this mini python project, make sure you bookmarked the complete list of Python Projects.

- Fake News Detection Python Project

- Parkinson’s Disease Detection Python Project

- Color Detection Python Project

- Speech Emotion Recognition Python Project

- Breast Cancer Classification Python Project

- Age and Gender Detection Python Project

- Handwritten Digit Recognition Python Project

- Chatbot Python Project

- Driver Drowsiness Detection Python Project

- Traffic Signs Recognition Python Project

- Image Caption Generator Python Project

What is Speech Emotion Recognition?

Speech Emotion Recognition, abbreviated as SER, is the act of attempting to recognize human emotion and affective states from speech. This is capitalizing on the fact that voice often reflects underlying emotion through tone and pitch. This is also the phenomenon that animals like dogs and horses employ to be able to understand human emotion.

SER is tough because emotions are subjective and annotating audio is challenging.

What is librosa?

librosa is a Python library for analyzing audio and music. It has a flatter package layout, standardizes interfaces and names, backwards compatibility, modular functions, and readable code. Further, in this Python mini-project, we demonstrate how to install it (and a few other packages) with pip.

What is JupyterLab?

JupyterLab is an open-source, web-based UI for Project Jupyter and it has all basic functionalities of the Jupyter Notebook, like notebooks, terminals, text editors, file browsers, rich outputs, and more. However, it also provides improved support for third party extensions.

To run code in the JupyterLab, you’ll first need to run it with the command prompt:

C:\Users\DataFlair>jupyter lab

This will open for you a new session in your browser. Create a new Console and start typing in your code. JupyterLab can execute multiple lines of code at once; pressing enter will not execute your code, you’ll need to press Shift+Enter for the same.

Speech Emotion Recognition – Objective

To build a model to recognize emotion from speech using the librosa and sklearn libraries and the RAVDESS dataset.

Speech Emotion Recognition – About the Python Mini Project

In this Python mini project, we will use the libraries librosa, soundfile, and sklearn (among others) to build a model using an MLPClassifier. This will be able to recognize emotion from sound files. We will load the data, extract features from it, then split the dataset into training and testing sets. Then, we’ll initialize an MLPClassifier and train the model. Finally, we’ll calculate the accuracy of our model.

The Dataset

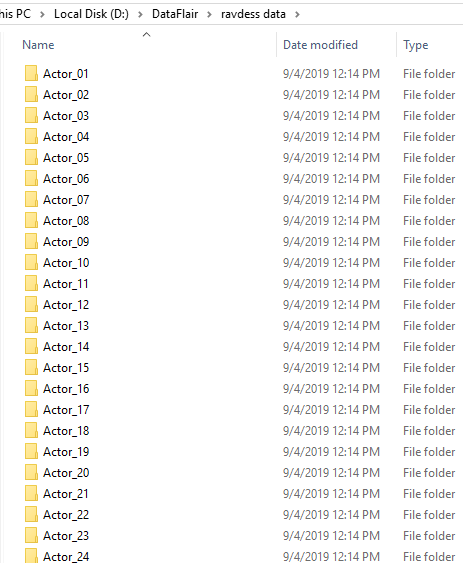

For this Python mini project, we’ll use the RAVDESS dataset; this is the Ryerson Audio-Visual Database of Emotional Speech and Song dataset, and is free to download. This dataset has 7356 files rated by 247 individuals 10 times on emotional validity, intensity, and genuineness. The entire dataset is 24.8GB from 24 actors, but we’ve lowered the sample rate on all the files, and you can download it here.

Prerequisites

You’ll need to install the following libraries with pip:

pip install librosa soundfile numpy sklearn pyaudio

If you run into issues installing librosa with pip, you can try it with conda.

Steps for speech emotion recognition python projects

1. Make the necessary imports:

import librosa import soundfile import os, glob, pickle import numpy as np from sklearn.model_selection import train_test_split from sklearn.neural_network import MLPClassifier from sklearn.metrics import accuracy_score

Screenshot:

2. Define a function extract_feature to extract the mfcc, chroma, and mel features from a sound file. This function takes 4 parameters- the file name and three Boolean parameters for the three features:

- mfcc: Mel Frequency Cepstral Coefficient, represents the short-term power spectrum of a sound

- chroma: Pertains to the 12 different pitch classes

- mel: Mel Spectrogram Frequency

Learn more about Python Sets and Booleans

Open the sound file with soundfile.SoundFile using with-as so it’s automatically closed once we’re done. Read from it and call it X. Also, get the sample rate. If chroma is True, get the Short-Time Fourier Transform of X.

Let result be an empty numpy array. Now, for each feature of the three, if it exists, make a call to the corresponding function from librosa.feature (eg- librosa.feature.mfcc for mfcc), and get the mean value. Call the function hstack() from numpy with result and the feature value, and store this in result. hstack() stacks arrays in sequence horizontally (in a columnar fashion). Then, return the result.

#DataFlair - Extract features (mfcc, chroma, mel) from a sound file

def extract_feature(file_name, mfcc, chroma, mel):

with soundfile.SoundFile(file_name) as sound_file:

X = sound_file.read(dtype="float32")

sample_rate=sound_file.samplerate

if chroma:

stft=np.abs(librosa.stft(X))

result=np.array([])

if mfcc:

mfccs=np.mean(librosa.feature.mfcc(y=X, sr=sample_rate, n_mfcc=40).T, axis=0)

result=np.hstack((result, mfccs))

if chroma:

chroma=np.mean(librosa.feature.chroma_stft(S=stft, sr=sample_rate).T,axis=0)

result=np.hstack((result, chroma))

if mel:

mel=np.mean(librosa.feature.melspectrogram(X, sr=sample_rate).T,axis=0)

result=np.hstack((result, mel))

return resultScreenshot:

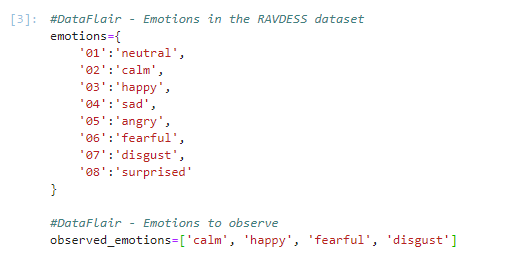

3. Now, let’s define a dictionary to hold numbers and the emotions available in the RAVDESS dataset, and a list to hold those we want- calm, happy, fearful, disgust.

#DataFlair - Emotions in the RAVDESS dataset

emotions={

'01':'neutral',

'02':'calm',

'03':'happy',

'04':'sad',

'05':'angry',

'06':'fearful',

'07':'disgust',

'08':'surprised'

}

#DataFlair - Emotions to observe

observed_emotions=['calm', 'happy', 'fearful', 'disgust']Screenshot:

Facing Failure in Interview?

Prepare with DataFlair – Frequently Asked Python Interview Questions

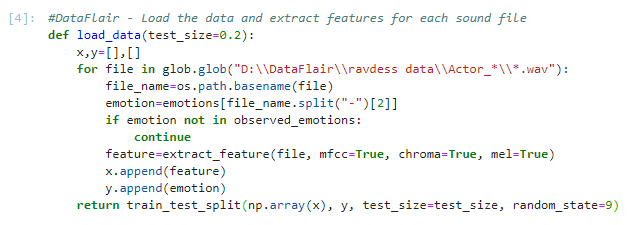

4. Now, let’s load the data with a function load_data() – this takes in the relative size of the test set as parameter. x and y are empty lists; we’ll use the glob() function from the glob module to get all the pathnames for the sound files in our dataset. The pattern we use for this is: “D:\\DataFlair\\ravdess data\\Actor_*\\*.wav”. This is because our dataset looks like this:

Screenshot:

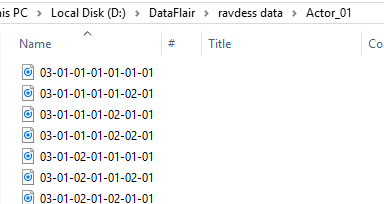

So, for each such path, get the basename of the file, the emotion by splitting the name around ‘-’ and extracting the third value:

Screenshot:

Using our emotions dictionary, this number is turned into an emotion, and our function checks whether this emotion is in our list of observed_emotions; if not, it continues to the next file. It makes a call to extract_feature and stores what is returned in ‘feature’. Then, it appends the feature to x and the emotion to y. So, the list x holds the features and y holds the emotions. We call the function train_test_split with these, the test size, and a random state value, and return that.

#DataFlair - Load the data and extract features for each sound file

def load_data(test_size=0.2):

x,y=[],[]

for file in glob.glob("D:\\DataFlair\\ravdess data\\Actor_*\\*.wav"):

file_name=os.path.basename(file)

emotion=emotions[file_name.split("-")[2]]

if emotion not in observed_emotions:

continue

feature=extract_feature(file, mfcc=True, chroma=True, mel=True)

x.append(feature)

y.append(emotion)

return train_test_split(np.array(x), y, test_size=test_size, random_state=9)Screenshot:

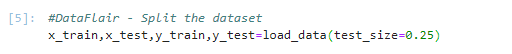

5. Time to split the dataset into training and testing sets! Let’s keep the test set 25% of everything and use the load_data function for this.

#DataFlair - Split the dataset x_train,x_test,y_train,y_test=load_data(test_size=0.25)

Screenshot:

6. Observe the shape of the training and testing datasets:

#DataFlair - Get the shape of the training and testing datasets print((x_train.shape[0], x_test.shape[0]))

Screenshot:

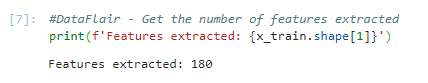

7. And get the number of features extracted.

#DataFlair - Get the number of features extracted

print(f'Features extracted: {x_train.shape[1]}')Output Screenshot:

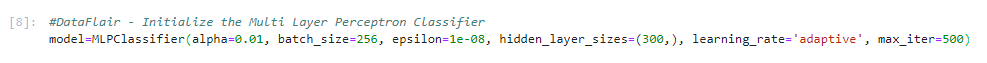

8. Now, let’s initialize an MLPClassifier. This is a Multi-layer Perceptron Classifier; it optimizes the log-loss function using LBFGS or stochastic gradient descent. Unlike SVM or Naive Bayes, the MLPClassifier has an internal neural network for the purpose of classification. This is a feedforward ANN model.

#DataFlair - Initialize the Multi Layer Perceptron Classifier model=MLPClassifier(alpha=0.01, batch_size=256, epsilon=1e-08, hidden_layer_sizes=(300,), learning_rate='adaptive', max_iter=500)

Screenshot:

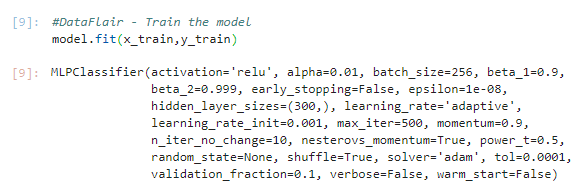

9. Fit/train the model.

#DataFlair - Train the model model.fit(x_train,y_train)

Output Screenshot:

10. Let’s predict the values for the test set. This gives us y_pred (the predicted emotions for the features in the test set).

#DataFlair - Predict for the test set y_pred=model.predict(x_test)

Screenshot:

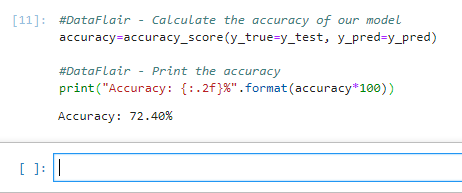

11. To calculate the accuracy of our model, we’ll call up the accuracy_score() function we imported from sklearn. Finally, we’ll round the accuracy to 2 decimal places and print it out.

#DataFlair - Calculate the accuracy of our model

accuracy=accuracy_score(y_true=y_test, y_pred=y_pred)

#DataFlair - Print the accuracy

print("Accuracy: {:.2f}%".format(accuracy*100))Output Screenshot:

Summary

In this Python mini project, we learned to recognize emotions from speech. We used an MLPClassifier for this and made use of the soundfile library to read the sound file, and the librosa library to extract features from it. As you’ll see, the model delivered an accuracy of 72.4%. That’s good enough for us yet.

Hope you enjoyed the mini python project.

Want to become next Python Developer??

Enroll for Online Python Course at DataFlair NOW!!

Reference – Zenodo

Your opinion matters

Please write your valuable feedback about DataFlair on Google

IndexError Traceback (most recent call last)

in

—-> 1 x_train,x_test,y_train,y_test=load_data(test_size=0.25)

in load_data(test_size)

3 for file in glob.glob(“C:\\Users\\N. Tejashwini\\Downloads\\speech-emotion-recognition-ravdess-data\\Actor_01”):

4 file_name=os.path.basename(file)

—-> 5 emotion=emotions[file_name.split(“-“)[2]]

6 if emotion not in observed_emotions:

7 continue

IndexError: list index out of range

I am getting the above error. How to solve could you please help me?

ParameterError: Invalid shape for monophonic audio: ndim=2 can be resolved by loading the file using librosa.

X = sound_file.read()

sample_rate=sound_file.samplerate

The above two lines in the method extract_feature can be replaced with the below line.

X, sample_rate = librosa.load(file_name)

The path seems to be incorrect in your code.

Replace line 3 in above code snippet with

for file in glob.glob(“C:\\Users\\N. Tejashwini\\Downloads\\speech-emotion-recognition-ravdess-data\\Actor_01\\*.wav”):

And, if you want to extract data for all the actors, try

for file in glob.glob(“C:\\Users\\N. Tejashwini\\Downloads\\speech-emotion-recognition-ravdess-data\\Actor_*\\*.wav”):

How to plot accuracy for this dateset model?

how to do visualization for this dataset

how to do visualization for this dataset

#DataFlair – Get the shape of the training and testing datasets

print((x_train.shape[0], x_test.shape[0]))

>(0, 0)

Getting this after completing all the above steps.

How to solve this

#DataFlair – Get the shape of the training and testing datasets

print((x_train.shape[0], x_test.shape[0]))

(0, 0)

I cannot load the data

can anybody help

@Aditya, I got that when I had the wrong path to the data set. It has a shape of (0,0) because no data is being loaded.

Check that you’re passing the correct path to the data.

njnj

Hello ,anyone can help me with increasing accuracy of the model.The accuracy for me it is coming around:59.9%

I have got accuracy of 59.9% with MLP classifier.So,anyone please help me to increase my accuracy.

could you please tell me how you load the data

Where have you tested? Please test on unseen dataset before uploading

Got this error when splitting the dataset:

ValueError Traceback (most recent call last)

in

1 #Split the dataset

—-> 2 x_train,x_test,y_train,y_test=load_data(test_size=0.2)

in load_data(test_size)

11 x.append(feature)

12 y.append(emotion)

—> 13 return train_test_split(np.array(x), y, test_size=test_size, random_state=9)

ValueError: With n_samples=0, test_size=0.2 and train_size=None, the resulting train set will be empty. Adjust any of the aforementioned parameters.

is there any other solution ?

I am not getting this one please

I got the same error too, please let me know how to go about it.

I have resolved it by making some changes…

import zipfile

def load_data(test_size=0.2):

x,y=[],[]

with zipfile.ZipFile(‘speech-emotion-recognition-ravdess-data.zip’, ‘r’) as z:

z.extractall(‘temp’)

for file in glob.glob(“temp\\Actor_*\\*.wav”):

——

The dataset is actually a .zip file which needs to be extracted( here I have extracted in a separate folder called ‘temp’ in read mode) and retrieved .wav files from temp folder in glob.glob() in for loop.

try this

Hey, you passed the path for the zip file in the “with zipfile.Zipfile()” command right?

File “”, line 6

with zipfile.ZipFile(‘speech-emotion-recognition-ravdess-data.zip’, ‘r’) as z:

^

SyntaxError: invalid character in identifier

I have used your code but its showing errors..how to solve this

and how to accesss the load data

change your quotation mark from backquote/backtick to single quote if you have copy-pasted the line(s) from here or else type the statement(s)…

Also make sure to pass the correct path.

Here the project is about recognising the emotion if the speech, So the output must be the emotin of our speech, like happy,angry etc

but here the output is accuracy…

what is the need of accuracy and how it is related to the project?

could anyone please clarify this.

Can you please show us how to test the model and the output of detecting emotion of input audio?

—————————————————————————

ModuleNotFoundError Traceback (most recent call last)

in

—-> 1 import librosa

2 import soundfile

3 import os, glob, pickle

4 import numpy as np

5 from sklearn.model_selection import train_test_split

~/conda/envs/python/lib/python3.6/site-packages/librosa/__init__.py in

10 # And all the librosa sub-modules

11 from ._cache import cache

—> 12 from . import core

13 from . import beat

14 from . import decompose

~/conda/envs/python/lib/python3.6/site-packages/librosa/core/__init__.py in

123 “””

124

–> 125 from .time_frequency import * # pylint: disable=wildcard-import

126 from .audio import * # pylint: disable=wildcard-import

127 from .spectrum import * # pylint: disable=wildcard-import

~/conda/envs/python/lib/python3.6/site-packages/librosa/core/time_frequency.py in

9 import six

10

—> 11 from ..util.exceptions import ParameterError

12 from ..util.deprecation import Deprecated

13

~/conda/envs/python/lib/python3.6/site-packages/librosa/util/__init__.py in

75 “””

76

—> 77 from .utils import * # pylint: disable=wildcard-import

78 from .files import * # pylint: disable=wildcard-import

79 from .matching import * # pylint: disable=wildcard-import

~/conda/envs/python/lib/python3.6/site-packages/librosa/util/utils.py in

13 from .._cache import cache

14 from .exceptions import ParameterError

—> 15 from .decorators import deprecated

16

17 # Constrain STFT block sizes to 256 KB

~/conda/envs/python/lib/python3.6/site-packages/librosa/util/decorators.py in

7 from decorator import decorator

8 import six

—-> 9 from numba.decorators import jit as optional_jit

10

11 __all__ = [‘moved’, ‘deprecated’, ‘optional_jit’]

ModuleNotFoundError: No module named ‘numba.decorators’

I’m getting this error. How can i resolve it?

pip install numba==0.48

use this in your cmd

Could this library be used for Voice Identification and authentication?

what other ML technique i can use on this project to improve accuracy?

how to convert video file of dataset to speech???

what kind of neural network use is in this projekt?

NameError Traceback (most recent call last)

in

13 chroma=np.mean(librosa.feature.chroma_stft(S=stft, sr=sample_rate).T,axis=0)

14 result=np.hstack((result, chroma))

—> 15 if mel:

16 mel=np.mean(librosa.feature.melspectrogram(X, sr=sample_rate).T,axis=0)

17 result=np.hstack((result, mel))

NameError: name ‘mel’ is not defined

This is the error I am getting

what should I do now?

how do you solve this??? I am also getting this error

ValueError Traceback (most recent call last)

in

1 #DataFlair – Split the dataset

—-> 2 x_train,x_test,y_train,y_test=load_data(test_size=0.25)

in load_data(test_size)

10 x.append(feature)

11 y.append(emotion)

—> 12 return train_test_split(np.array(x), y, test_size=test_size, random_state=9)

C:\ProgramData\Anaconda3\lib\site-packages\sklearn\model_selection\_split.py in train_test_split(*arrays, **options)

2128

2129 n_samples = _num_samples(arrays[0])

-> 2130 n_train, n_test = _validate_shuffle_split(n_samples, test_size, train_size,

2131 default_test_size=0.25)

2132

C:\ProgramData\Anaconda3\lib\site-packages\sklearn\model_selection\_split.py in _validate_shuffle_split(n_samples, test_size, train_size, default_test_size)

1808

1809 if n_train == 0:

-> 1810 raise ValueError(

1811 ‘With n_samples={}, test_size={} and train_size={}, the ‘

1812 ‘resulting train set will be empty. Adjust any of the ‘

ValueError: With n_samples=0, test_size=0.25 and train_size=None, the resulting train set will be empty. Adjust any of the aforementioned parameters.

how do i solve this problem please help as soon as possible

How do I predict the emotion for a single sound file?

ValueError Traceback (most recent call last)

in

1 #DataFlair – Split the dataset

—-> 2 x_train,x_test,y_train,y_test=load_data(test_size=0.25)

in load_data(test_size)

10 x.append(feature)

11 y.append(emotion)

—> 12 return train_test_split(np.array(x), y, test_size=test_size, random_state=9)

~\anaconda3\lib\site-packages\sklearn\model_selection\_split.py in train_test_split(*arrays, **options)

2128

2129 n_samples = _num_samples(arrays[0])

-> 2130 n_train, n_test = _validate_shuffle_split(n_samples, test_size, train_size,

2131 default_test_size=0.25)

2132

~\anaconda3\lib\site-packages\sklearn\model_selection\_split.py in _validate_shuffle_split(n_samples, test_size, train_size, default_test_size)

1808

1809 if n_train == 0:

-> 1810 raise ValueError(

1811 ‘With n_samples={}, test_size={} and train_size={}, the ‘

1812 ‘resulting train set will be empty. Adjust any of the ‘

ValueError: With n_samples=0, test_size=0.25 and train_size=None, the resulting train set will be empty. Adjust any of the aforementioned parameters.

can someone help me to resolve this??

Q1. why you reduce the sample rate?

Q2. how to predict emotion from any random voice? this project doesn’t mention at all.

How to test a new audio file? Thanks!