Python Mini Project – Speech Emotion Recognition with librosa

Free Machine Learning courses with 130+ real-time projects Start Now!!

Python Mini Project

Speech emotion recognition, the best ever python mini project. The best example of it can be seen at call centers. If you ever noticed, call centers employees never talk in the same manner, their way of pitching/talking to the customers changes with customers. Now, this does happen with common people too, but how is this relevant to call centers? Here is your answer, the employees recognize customers’ emotions from speech, so they can improve their service and convert more people. In this way, they are using speech emotion recognition. So, let’s discuss this project in detail.

Speech emotion recognition is a simple Python mini-project, which you are going to practice with DataFlair. Before, I explain to you the terms related to this mini python project, make sure you bookmarked the complete list of Python Projects.

- Fake News Detection Python Project

- Parkinson’s Disease Detection Python Project

- Color Detection Python Project

- Speech Emotion Recognition Python Project

- Breast Cancer Classification Python Project

- Age and Gender Detection Python Project

- Handwritten Digit Recognition Python Project

- Chatbot Python Project

- Driver Drowsiness Detection Python Project

- Traffic Signs Recognition Python Project

- Image Caption Generator Python Project

What is Speech Emotion Recognition?

Speech Emotion Recognition, abbreviated as SER, is the act of attempting to recognize human emotion and affective states from speech. This is capitalizing on the fact that voice often reflects underlying emotion through tone and pitch. This is also the phenomenon that animals like dogs and horses employ to be able to understand human emotion.

SER is tough because emotions are subjective and annotating audio is challenging.

What is librosa?

librosa is a Python library for analyzing audio and music. It has a flatter package layout, standardizes interfaces and names, backwards compatibility, modular functions, and readable code. Further, in this Python mini-project, we demonstrate how to install it (and a few other packages) with pip.

What is JupyterLab?

JupyterLab is an open-source, web-based UI for Project Jupyter and it has all basic functionalities of the Jupyter Notebook, like notebooks, terminals, text editors, file browsers, rich outputs, and more. However, it also provides improved support for third party extensions.

To run code in the JupyterLab, you’ll first need to run it with the command prompt:

C:\Users\DataFlair>jupyter lab

This will open for you a new session in your browser. Create a new Console and start typing in your code. JupyterLab can execute multiple lines of code at once; pressing enter will not execute your code, you’ll need to press Shift+Enter for the same.

Speech Emotion Recognition – Objective

To build a model to recognize emotion from speech using the librosa and sklearn libraries and the RAVDESS dataset.

Speech Emotion Recognition – About the Python Mini Project

In this Python mini project, we will use the libraries librosa, soundfile, and sklearn (among others) to build a model using an MLPClassifier. This will be able to recognize emotion from sound files. We will load the data, extract features from it, then split the dataset into training and testing sets. Then, we’ll initialize an MLPClassifier and train the model. Finally, we’ll calculate the accuracy of our model.

The Dataset

For this Python mini project, we’ll use the RAVDESS dataset; this is the Ryerson Audio-Visual Database of Emotional Speech and Song dataset, and is free to download. This dataset has 7356 files rated by 247 individuals 10 times on emotional validity, intensity, and genuineness. The entire dataset is 24.8GB from 24 actors, but we’ve lowered the sample rate on all the files, and you can download it here.

Prerequisites

You’ll need to install the following libraries with pip:

pip install librosa soundfile numpy sklearn pyaudio

If you run into issues installing librosa with pip, you can try it with conda.

Steps for speech emotion recognition python projects

1. Make the necessary imports:

import librosa import soundfile import os, glob, pickle import numpy as np from sklearn.model_selection import train_test_split from sklearn.neural_network import MLPClassifier from sklearn.metrics import accuracy_score

Screenshot:

2. Define a function extract_feature to extract the mfcc, chroma, and mel features from a sound file. This function takes 4 parameters- the file name and three Boolean parameters for the three features:

- mfcc: Mel Frequency Cepstral Coefficient, represents the short-term power spectrum of a sound

- chroma: Pertains to the 12 different pitch classes

- mel: Mel Spectrogram Frequency

Learn more about Python Sets and Booleans

Open the sound file with soundfile.SoundFile using with-as so it’s automatically closed once we’re done. Read from it and call it X. Also, get the sample rate. If chroma is True, get the Short-Time Fourier Transform of X.

Let result be an empty numpy array. Now, for each feature of the three, if it exists, make a call to the corresponding function from librosa.feature (eg- librosa.feature.mfcc for mfcc), and get the mean value. Call the function hstack() from numpy with result and the feature value, and store this in result. hstack() stacks arrays in sequence horizontally (in a columnar fashion). Then, return the result.

#DataFlair - Extract features (mfcc, chroma, mel) from a sound file

def extract_feature(file_name, mfcc, chroma, mel):

with soundfile.SoundFile(file_name) as sound_file:

X = sound_file.read(dtype="float32")

sample_rate=sound_file.samplerate

if chroma:

stft=np.abs(librosa.stft(X))

result=np.array([])

if mfcc:

mfccs=np.mean(librosa.feature.mfcc(y=X, sr=sample_rate, n_mfcc=40).T, axis=0)

result=np.hstack((result, mfccs))

if chroma:

chroma=np.mean(librosa.feature.chroma_stft(S=stft, sr=sample_rate).T,axis=0)

result=np.hstack((result, chroma))

if mel:

mel=np.mean(librosa.feature.melspectrogram(X, sr=sample_rate).T,axis=0)

result=np.hstack((result, mel))

return resultScreenshot:

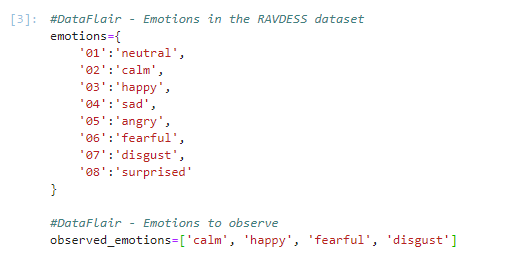

3. Now, let’s define a dictionary to hold numbers and the emotions available in the RAVDESS dataset, and a list to hold those we want- calm, happy, fearful, disgust.

#DataFlair - Emotions in the RAVDESS dataset

emotions={

'01':'neutral',

'02':'calm',

'03':'happy',

'04':'sad',

'05':'angry',

'06':'fearful',

'07':'disgust',

'08':'surprised'

}

#DataFlair - Emotions to observe

observed_emotions=['calm', 'happy', 'fearful', 'disgust']Screenshot:

Facing Failure in Interview?

Prepare with DataFlair – Frequently Asked Python Interview Questions

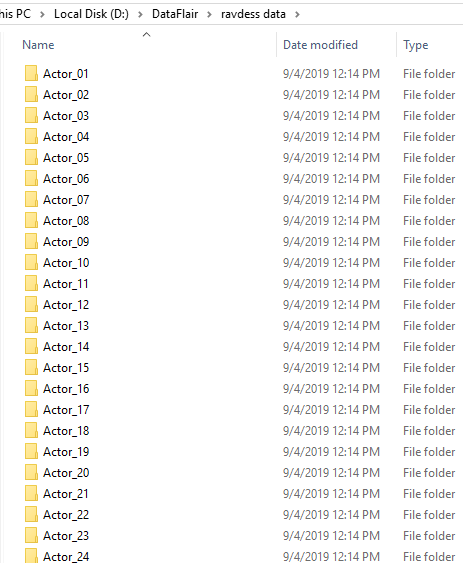

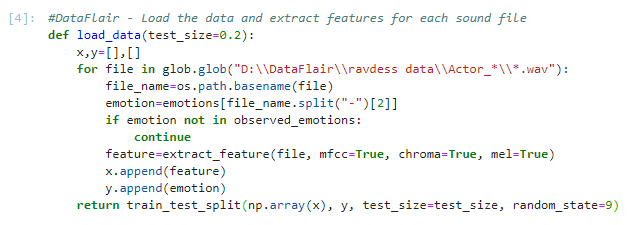

4. Now, let’s load the data with a function load_data() – this takes in the relative size of the test set as parameter. x and y are empty lists; we’ll use the glob() function from the glob module to get all the pathnames for the sound files in our dataset. The pattern we use for this is: “D:\\DataFlair\\ravdess data\\Actor_*\\*.wav”. This is because our dataset looks like this:

Screenshot:

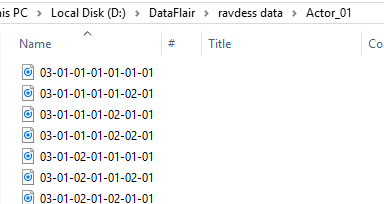

So, for each such path, get the basename of the file, the emotion by splitting the name around ‘-’ and extracting the third value:

Screenshot:

Using our emotions dictionary, this number is turned into an emotion, and our function checks whether this emotion is in our list of observed_emotions; if not, it continues to the next file. It makes a call to extract_feature and stores what is returned in ‘feature’. Then, it appends the feature to x and the emotion to y. So, the list x holds the features and y holds the emotions. We call the function train_test_split with these, the test size, and a random state value, and return that.

#DataFlair - Load the data and extract features for each sound file

def load_data(test_size=0.2):

x,y=[],[]

for file in glob.glob("D:\\DataFlair\\ravdess data\\Actor_*\\*.wav"):

file_name=os.path.basename(file)

emotion=emotions[file_name.split("-")[2]]

if emotion not in observed_emotions:

continue

feature=extract_feature(file, mfcc=True, chroma=True, mel=True)

x.append(feature)

y.append(emotion)

return train_test_split(np.array(x), y, test_size=test_size, random_state=9)Screenshot:

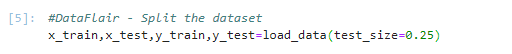

5. Time to split the dataset into training and testing sets! Let’s keep the test set 25% of everything and use the load_data function for this.

#DataFlair - Split the dataset x_train,x_test,y_train,y_test=load_data(test_size=0.25)

Screenshot:

6. Observe the shape of the training and testing datasets:

#DataFlair - Get the shape of the training and testing datasets print((x_train.shape[0], x_test.shape[0]))

Screenshot:

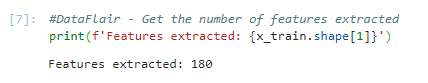

7. And get the number of features extracted.

#DataFlair - Get the number of features extracted

print(f'Features extracted: {x_train.shape[1]}')Output Screenshot:

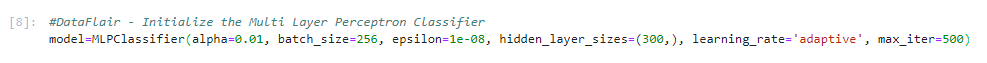

8. Now, let’s initialize an MLPClassifier. This is a Multi-layer Perceptron Classifier; it optimizes the log-loss function using LBFGS or stochastic gradient descent. Unlike SVM or Naive Bayes, the MLPClassifier has an internal neural network for the purpose of classification. This is a feedforward ANN model.

#DataFlair - Initialize the Multi Layer Perceptron Classifier model=MLPClassifier(alpha=0.01, batch_size=256, epsilon=1e-08, hidden_layer_sizes=(300,), learning_rate='adaptive', max_iter=500)

Screenshot:

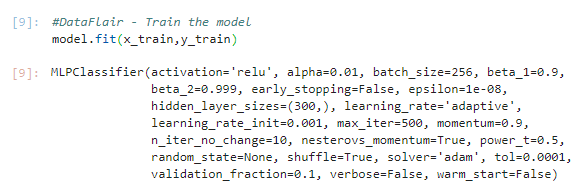

9. Fit/train the model.

#DataFlair - Train the model model.fit(x_train,y_train)

Output Screenshot:

10. Let’s predict the values for the test set. This gives us y_pred (the predicted emotions for the features in the test set).

#DataFlair - Predict for the test set y_pred=model.predict(x_test)

Screenshot:

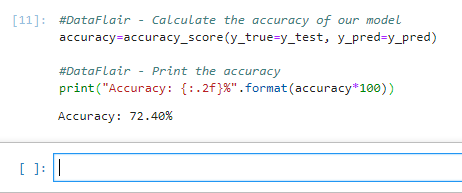

11. To calculate the accuracy of our model, we’ll call up the accuracy_score() function we imported from sklearn. Finally, we’ll round the accuracy to 2 decimal places and print it out.

#DataFlair - Calculate the accuracy of our model

accuracy=accuracy_score(y_true=y_test, y_pred=y_pred)

#DataFlair - Print the accuracy

print("Accuracy: {:.2f}%".format(accuracy*100))Output Screenshot:

Summary

In this Python mini project, we learned to recognize emotions from speech. We used an MLPClassifier for this and made use of the soundfile library to read the sound file, and the librosa library to extract features from it. As you’ll see, the model delivered an accuracy of 72.4%. That’s good enough for us yet.

Hope you enjoyed the mini python project.

Want to become next Python Developer??

Enroll for Online Python Course at DataFlair NOW!!

Reference – Zenodo

Your 15 seconds will encourage us to work even harder

Please share your happy experience on Google

Did you figure this out? If yes could you help me.

where can I find link to this source code?

The code is also given in the article. It is just that the code is given in parts. You can use the code, which can also help you nderstand it part by part. Hope it helps.

If someone who successfully ran this code, please help)

Run the code step by step in jupyter or spider. Its running fine and producing a test accuracy of 74%.

kindly send me code

Can you please help me

The code is running fine.

from sklearn.model_selection import train_test_split this is now working for me can you please help me to resolve this issue

we are getting this error

TypeError: melspectrogram() takes 0 positional arguments but 1 positional argument (and 1 keyword-only argument) were given

please help

Make this change in the extract_feature function

mel=np.mean(librosa.feature.melspectrogram(y=X, sr=sample_rate).T,axis=0)

ValueError: With n_samples=0, test_size=0.2 and train_size=None, the resulting train set will be empty. Adjust any of the aforementioned parameters.

help me out with this

Please try to check if the path from where the file is loaded exists or not. Also, then try checking if the variables reading the files hold any data. Hope it solves your problem.

Did your error get resloved? Because i am getting the same error

how do i input the test data and test for the output

yes plz, tell us how can we input a sound file and get output from this trained model.

We use the dataset we have for testing purposes. We split the data set into two parts having 75% and 25%. We use the 25% data to test the model.

ValueError: With n_samples=0, test_size=0.2 and train_size=None, the resulting train set will be empty. Adjust any of the aforementioned parameters.

help me out with this

Hi, did you get the answer?

I am stuck on the same question.

It’s because you might forget something on importing the directory of the sound-file.

probably on: for file in glob.glob(“//Users//Apple//Desktop//Program//coding//GitHub//speech-emotion-recognition-ravdess-data//Actor_*//*.wav”): You might forget something here, but some common mistake is the: …//Actor_*//*.wav”):

I am a 16 years old student learning machine learning.

Hope this helped you, it has been passed a lot of time. How the things are going?

meaning i didnt understand what you said

It’s because you might forget something on importing the directory of the sound-file.

probably on: for file in glob.glob(“//Users//Apple//Desktop//Program//coding//GitHub//speech-emotion-recognition-ravdess-data//Actor_*//*.wav”): You might forget something here, but some common mistake is the: …//Actor_*//*.wav”):

I am a 16 years old student learning machine learning.

Hope this helped you, it has been passed a lot of time. How the things are going?

The project is about recognising the speech,so theoutput should be like sad or,happy.but why the output is accuracy score.can you tell me

same question bro

Actually, the output of the model is sad or happy. After getting the outputs from the test input data, we are comparing them with the one’s predefined outputs. We are getting the accuracy from the comparison to find the efficiency of the model.

How to give test input data for actual outputs

Invalid shape for monophonic audio: ndim=2, shape=(166566, 2)

I am getting this error in 5th instruction while splitting the dataset. Can anyone help me?

Hi, did you get the answer?

I am stuck on the same question.

yes i got the same error did u solved it

You are getting this error because the wave file is stereo. You can solve this issue by converting it to mono using the code ” from pydub import AudioSegment” and setting it to only 1 channel. Or else, you can also use the below code “mono1 = audio_signal[1:len(audio_signal),0 ] mono2 = audio_signal[1:len(audio_signal),1 ]”. I hope this helps you.

yes plz tell us how can we input a sound file and get output from this trained model.

We can extract the features, here mfcc, chroma, and mel, from the audio file using the respective modules. The features can be given as inputs to the model for training.

name ‘mel’ is not defined

This is the error I am getting while executing the second part

Actually, mel is one of the outputs of the extract_feature() function. So, can you please tell us the specific line at which you are getting this error?

hi, could you please send me the complete executed source code. because I am currently working on this project. it is really so important to me. my emailid:[email protected]

hi can you please send me the complete executed source code, because currently, I am working on this project so it is so important to me.

my emailid:[email protected]

Do you have the code

hi sameera, could you please send me the complete executed source code, because currently, I am working on this project it is so important to me. my emailid:[email protected]

Could you please add accuracy and loss plots for better visualisation of training model

Invalid shape for monophonic audio: ndim=2, shape=(172972, 2)

I am getting this error in 5th instruction while splitting the dataset. Can anyone help me?

Calculate a piece of code many times and get different results

model=MLPClassifier(alpha=0.01, batch_size=256, epsilon=1e-08, hidden_layer_sizes=(300,), learning_rate=’adaptive’, max_iter=500)

model.fit(x_train,y_train)

y_pred=model.predict(x_test)

accuracy=accuracy_score(y_true=y_test, y_pred=y_pred)

print(“Accuracy: {:.2f}%”.format(accuracy*100))

I counted 3 times and here are the results

Accuracy: 59.38%

Accuracy: 73.96%

Accuracy: 61.98%

You got different results on running the same algorithm on the same data because the MLPClassifier is stochastic. That is, the behavior includes elements of randomness. Some of the small decisions made by the algorithm during the learning process can vary randomly.Due to this, we observe a slight difference in the performance. One solution for avoiding this could be to use seed.

this is really helpfull.thanks!

ValueError: With n_samples=0, test_size=0.2 and train_size=None, the resulting train set will be empty. Adjust any of the aforementioned parameters.

It’s because you might forget something on importing the directory of the sound-file.

probably on: for file in glob.glob(“//Users//Apple//Desktop//Program//coding//GitHub//speech-emotion-recognition-ravdess-data//Actor_*//*.wav”): You might forget something here, but some common mistake is the: …//Actor_*//*.wav”):

I am a 16 years old student learning machine learning. Feel free to network with me and chat some deep learning, AI, ML and something else: instagram: __lore.valenti__

Hope this helped you, it has been passed a lot of time. How the things are going?

Can you help me as well?

ValueError: With n_samples=0, test_size=0.2 and train_size=None, the resulting train set will be empty. Adjust any of the aforementioned parameters.

It’s because you might forget something on importing the directory of the sound-file.

probably on: for file in glob.glob(“//Users//Apple//Desktop//Program//coding//GitHub//speech-emotion-recognition-ravdess-data//Actor_*//*.wav”): You might forget something here, but some common mistake is the: …//Actor_*//*.wav”):

I am a 16 years old student learning machine learning. Feel free to network with me and chat some deep learning, AI, ML and something else: instagram: __lore.valenti__

Hope this helped you, it has been passed a lot of time. How the things are going?

this is path error.. set the path properly

I am getting error that x_test is not defined

I have tried the code explained in this tutorial and there are some things that must be cleared.

1) The dataset on the google drive have all the audio files with only 1 channel, which means the audio files are in mono channel instead of stereo. This is not explained since I have downloaded the dataset from where the authors of the dataset decided to put.

Doing this have brought an error which was the follow:

librosa.util.exceptions.ParameterError: Invalid shape for monophonic audio: ndim=2, shape=(172972, 2)

This can be easily solved by doing simply making the audio mono channel and can be done easily with the python package pydub.

2) After the training process is completed I had to check the confusion matrix of the trained model and the result I get isn’t always great.

Not only the accuracy vary from 48% through 69% but the confusion matrix shows actually how good is the model.

This is one of the results I got by executing the code and printing the confusion matrix:

(1080, 360)

Features extracted: 180

Accuracy: 45.83%

Confusion Matrix:

[[21 1 7 1 17 1 0 4]

[ 0 21 10 0 7 8 0 1]

[ 0 1 26 0 11 0 0 4]

[ 1 2 5 16 24 0 1 2]

[ 0 1 4 1 43 0 0 3]

[ 0 6 4 0 5 3 0 0]

[ 0 4 8 4 13 11 9 4]

[ 0 0 2 0 13 4 0 26]]

Confusion Matrix Normalized:

[

[0.40 0.01 0.13 0.01 0.32 0.01 0.00 0.07]

[0.00 0.44 0.21 0.00 0.14 0.17 0.00 0.02]

[0.00 0.02 0.61 0.00 0.26 0.00 0.00 0.09]

[0.01 0.03 0.09 0.31 0.47 0.00 0.01 0.03]

[0.00 0.01 0.07 0.01 0.82 0.00 0.00 0.05]

[0.00 0.33 0.22 0.00 0.27 0.16 0.00 0.00]

[0.00 0.07 0.15 0.07 0.24 0.20 0.16 0.07]

[0.00 0.00 0.04 0.00 0.28 0.08 0.00 0.57]

]

While some classifications have more than 50% correct, the rest is under 50% which is not great.

I think it’s the sklearn classifier that doesn’t actually do a good job on this.

Data flair can you make a deployment model using flask to make web application

I was getting this error when i train the data

C:\Users\saite\AppData\Local\Temp/ipykernel_10124/4098696342.py:16: FutureWarning: Pass y=[0. 0. 0. … 0. 0. 0.] as keyword args. From version 0.10 passing these as positional arguments will result in an error

mel=np.mean(librosa.feature.melspectrogram(X, sr=sample_rate).T,axis=0)

C:\Users\saite\AppData\Local\Temp/ipykernel_10124/4098696342.py:16: FutureWarning: Pass y=[0.0000000e+00 3.0517578e-05 3.0517578e-05 … 0.0000000e+00 0.0000000e+00

0.0000000e+00] as keyword args. From version 0.10 passing these as positional arguments will result in an error

mel=np.mean(librosa.feature.melspectrogram(X, sr=sample_rate).T,axis=0)

C:\Users\saite\AppData\Local\Temp/ipykernel_10124/4098696342.py:16: FutureWarning: Pass y=[ 0.0000000e+00 0.0000000e+00 -3.0517578e-05 … -3.0517578e-05

-3.0517578e-05 -3.0517578e-05] as keyword args. From version 0.10 passing these as positional arguments will result in an error

I am also getting the same error, did you find any fix for this?

Have u solved ur error??

If yes, then please help me out.

I can’t open the file. The error is: “RuntimeError: Error opening ’03-01-02-01-01-01-01.wav’: System error.”

can anyone help me?

i’m getting Name mel is not defined error…could u please send me the executed code & tell me how to solve that error

—————————————————————————

IndexError Traceback (most recent call last)

~\AppData\Local\Temp\ipykernel_19764\3618460512.py in

1 #DataFlair – Split the dataset

—-> 2 x_train,x_test,y_train,y_test=load_data(test_size=0.25)

~\AppData\Local\Temp\ipykernel_19764\1716210230.py in load_data(test_size)

4 for file in glob.glob(r”C:\Users\Ayesha\OneDrive\Desktop\speech”):

5 file_name=os.path.basename(file)

—-> 6 emotion=emotions[file_name.split(“-“)[3]]

7 if emotion not in observed_emotions:

8 continue

IndexError: list index out of range

PLEASE HELP