Python Chatbot Project – Learn to build your first chatbot using NLTK & Keras

Free Machine Learning courses with 130+ real-time projects Start Now!!

Hey Siri, What’s the meaning of Life?

As per all the evidence, it’s chocolate for you.

Soon as I heard this reply from Siri, I knew I found a perfect partner to savour my hours of solitude. From stupid questions to some pretty serious advice, Siri has been always there for me.

How amazing it is to tell someone everything and anything and not being judged at all. A top class feeling it is and that’s what the beauty of a chatbot is.

This is the 9th project in the 20 Python projects series by DataFlair and make sure to bookmark other interesting projects:

- Fake News Detection Python Project

- Parkinson’s Disease Detection Python Project

- Color Detection Python Project

- Speech Emotion Recognition Python Project

- Breast Cancer Classification Python Project

- Age and Gender Detection Python Project

- Handwritten Digit Recognition Python Project

- Chatbot Python Project

- Driver Drowsiness Detection Python Project

- Traffic Signs Recognition Python Project

- Image Caption Generator Python Project

What is Chatbot?

A chatbot is an intelligent piece of software that is capable of communicating and performing actions similar to a human. Chatbots are used a lot in customer interaction, marketing on social network sites and instantly messaging the client. There are two basic types of chatbot models based on how they are built; Retrieval based and Generative based models.

1. Retrieval based Chatbots

A retrieval-based chatbot uses predefined input patterns and responses. It then uses some type of heuristic approach to select the appropriate response. It is widely used in the industry to make goal-oriented chatbots where we can customize the tone and flow of the chatbot to drive our customers with the best experience.

2. Generative based Chatbots

Generative models are not based on some predefined responses.

They are based on seq 2 seq neural networks. It is the same idea as machine translation. In machine translation, we translate the source code from one language to another language but here, we are going to transform input into an output. It needs a large amount of data and it is based on Deep Neural networks.

About the Python Project – Chatbot

In this Python project with source code, we are going to build a chatbot using deep learning techniques. The chatbot will be trained on the dataset which contains categories (intents), pattern and responses. We use a special recurrent neural network (LSTM) to classify which category the user’s message belongs to and then we will give a random response from the list of responses.

Let’s create a retrieval based chatbot using NLTK, Keras, Python, etc.

Download Chatbot Code & Dataset

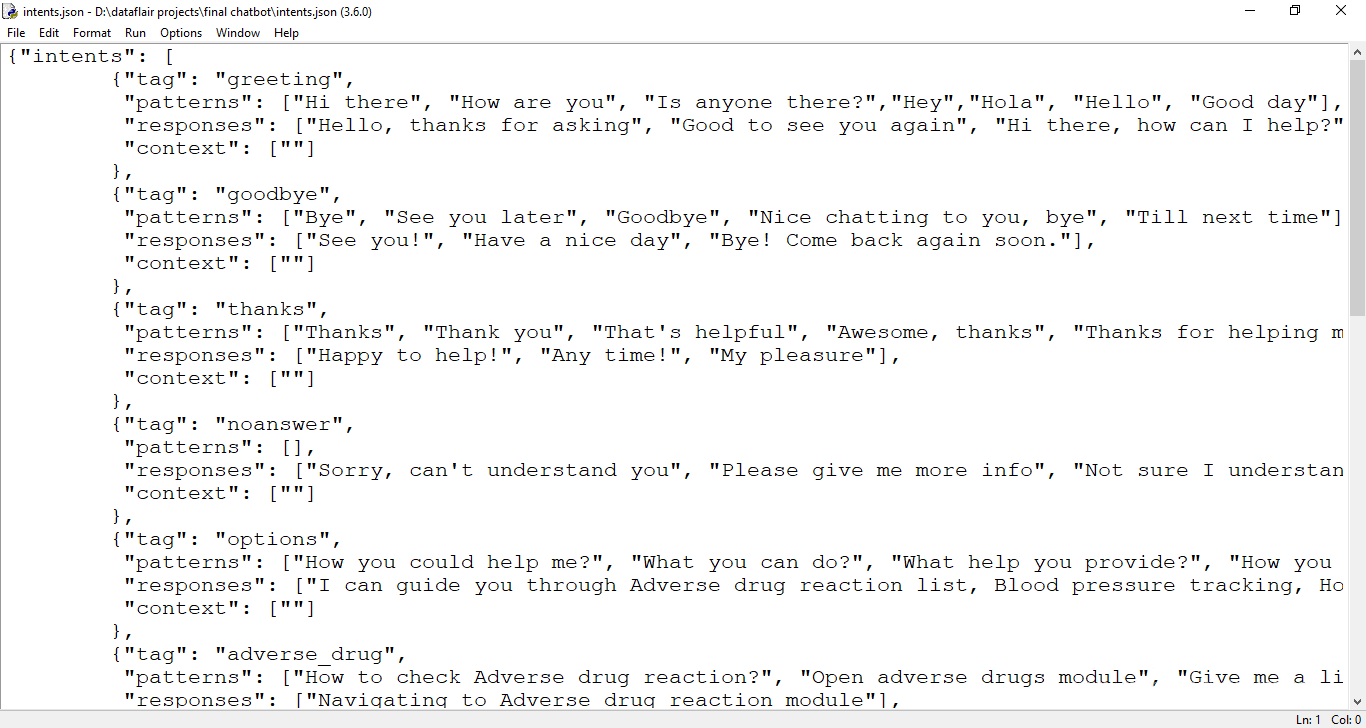

The dataset we will be using is ‘intents.json’. This is a JSON file that contains the patterns we need to find and the responses we want to return to the user.

Please download python chatbot code & dataset from the following link: Python Chatbot Code & Dataset

Prerequisites

The project requires you to have good knowledge of Python, Keras, and Natural language processing (NLTK). Along with them, we will use some helping modules which you can download using the python-pip command.

pip install tensorflow, keras, pickle, nltk

How to Make Chatbot in Python?

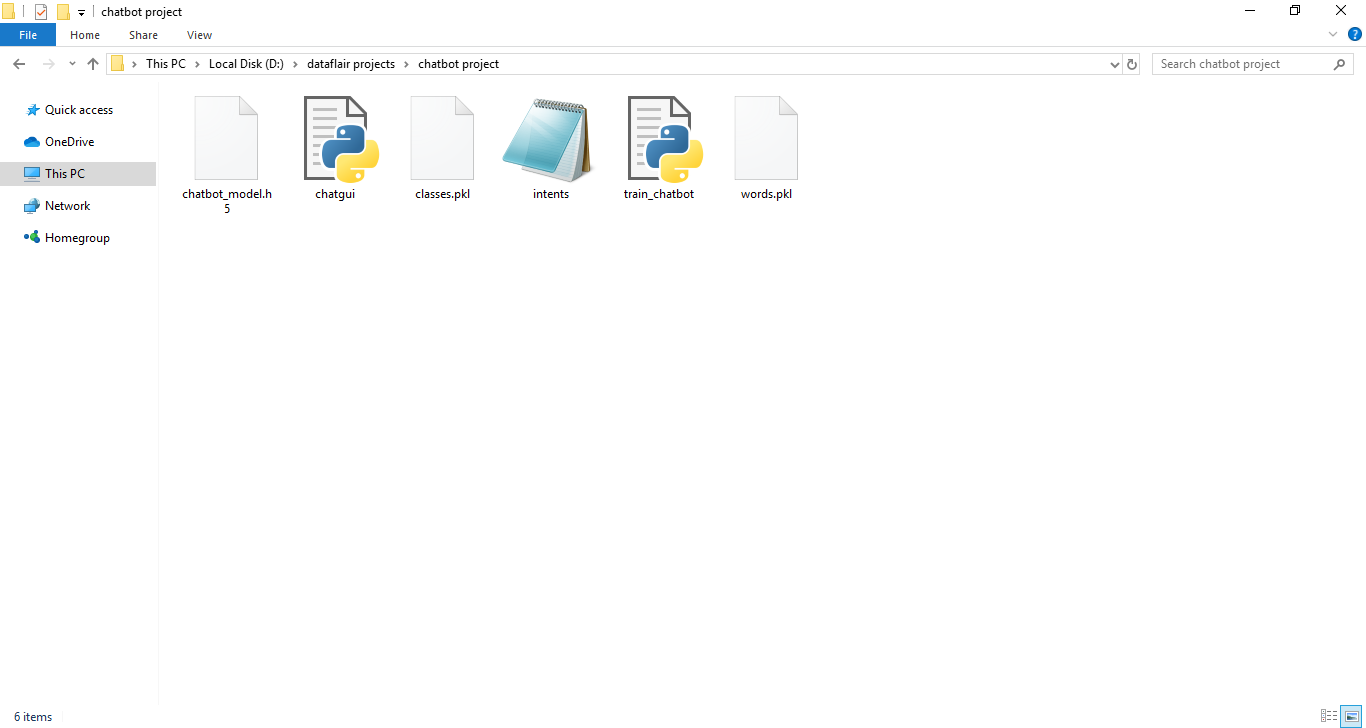

Now we are going to build the chatbot using Python but first, let us see the file structure and the type of files we will be creating:

- Intents.json – The data file which has predefined patterns and responses.

- train_chatbot.py – In this Python file, we wrote a script to build the model and train our chatbot.

- Words.pkl – This is a pickle file in which we store the words Python object that contains a list of our vocabulary.

- Classes.pkl – The classes pickle file contains the list of categories.

- Chatbot_model.h5 – This is the trained model that contains information about the model and has weights of the neurons.

- Chatgui.py – This is the Python script in which we implemented GUI for our chatbot. Users can easily interact with the bot.

Here are the 5 steps to create a chatbot in Python from scratch:

- Import and load the data file

- Preprocess data

- Create training and testing data

- Build the model

- Predict the response

1. Import and load the data file

First, make a file name as train_chatbot.py. We import the necessary packages for our chatbot and initialize the variables we will use in our Python project.

The data file is in JSON format so we used the json package to parse the JSON file into Python.

import nltk

from nltk.stem import WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

import json

import pickle

import numpy as np

from keras.models import Sequential

from keras.layers import Dense, Activation, Dropout

from keras.optimizers import SGD

import random

words=[]

classes = []

documents = []

ignore_words = ['?', '!']

data_file = open('intents.json').read()

intents = json.loads(data_file)This is how our intents.json file looks like.

2. Preprocess data

When working with text data, we need to perform various preprocessing on the data before we make a machine learning or a deep learning model. Based on the requirements we need to apply various operations to preprocess the data.

Tokenizing is the most basic and first thing you can do on text data. Tokenizing is the process of breaking the whole text into small parts like words.

Here we iterate through the patterns and tokenize the sentence using nltk.word_tokenize() function and append each word in the words list. We also create a list of classes for our tags.

for intent in intents['intents']:

for pattern in intent['patterns']:

#tokenize each word

w = nltk.word_tokenize(pattern)

words.extend(w)

#add documents in the corpus

documents.append((w, intent['tag']))

# add to our classes list

if intent['tag'] not in classes:

classes.append(intent['tag'])Now we will lemmatize each word and remove duplicate words from the list. Lemmatizing is the process of converting a word into its lemma form and then creating a pickle file to store the Python objects which we will use while predicting.

# lemmatize, lower each word and remove duplicates

words = [lemmatizer.lemmatize(w.lower()) for w in words if w not in ignore_words]

words = sorted(list(set(words)))

# sort classes

classes = sorted(list(set(classes)))

# documents = combination between patterns and intents

print (len(documents), "documents")

# classes = intents

print (len(classes), "classes", classes)

# words = all words, vocabulary

print (len(words), "unique lemmatized words", words)

pickle.dump(words,open('words.pkl','wb'))

pickle.dump(classes,open('classes.pkl','wb'))3. Create training and testing data

Now, we will create the training data in which we will provide the input and the output. Our input will be the pattern and output will be the class our input pattern belongs to. But the computer doesn’t understand text so we will convert text into numbers.

# create our training data

training = []

# create an empty array for our output

output_empty = [0] * len(classes)

# training set, bag of words for each sentence

for doc in documents:

# initialize our bag of words

bag = []

# list of tokenized words for the pattern

pattern_words = doc[0]

# lemmatize each word - create base word, in attempt to represent related words

pattern_words = [lemmatizer.lemmatize(word.lower()) for word in pattern_words]

# create our bag of words array with 1, if word match found in current pattern

for w in words:

bag.append(1) if w in pattern_words else bag.append(0)

# output is a '0' for each tag and '1' for current tag (for each pattern)

output_row = list(output_empty)

output_row[classes.index(doc[1])] = 1

training.append([bag, output_row])

# shuffle our features and turn into np.array

random.shuffle(training)

training = np.array(training)

# create train and test lists. X - patterns, Y - intents

train_x = list(training[:,0])

train_y = list(training[:,1])

print("Training data created")4. Build the model

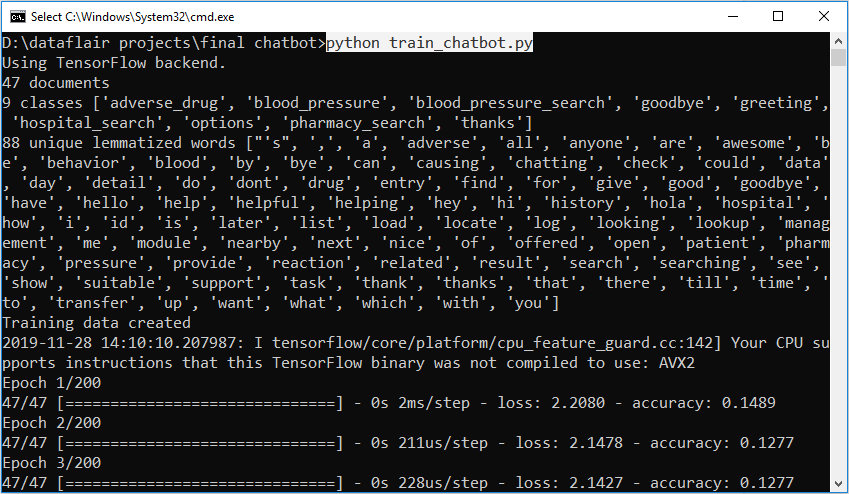

We have our training data ready, now we will build a deep neural network that has 3 layers. We use the Keras sequential API for this. After training the model for 200 epochs, we achieved 100% accuracy on our model. Let us save the model as ‘chatbot_model.h5’.

# Create model - 3 layers. First layer 128 neurons, second layer 64 neurons and 3rd output layer contains number of neurons

# equal to number of intents to predict output intent with softmax

model = Sequential()

model.add(Dense(128, input_shape=(len(train_x[0]),), activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(64, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(len(train_y[0]), activation='softmax'))

# Compile model. Stochastic gradient descent with Nesterov accelerated gradient gives good results for this model

sgd = SGD(lr=0.01, decay=1e-6, momentum=0.9, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

#fitting and saving the model

hist = model.fit(np.array(train_x), np.array(train_y), epochs=200, batch_size=5, verbose=1)

model.save('chatbot_model.h5', hist)

print("model created")5. Predict the response (Graphical User Interface)

To predict the sentences and get a response from the user to let us create a new file ‘chatapp.py’.

We will load the trained model and then use a graphical user interface that will predict the response from the bot. The model will only tell us the class it belongs to, so we will implement some functions which will identify the class and then retrieve us a random response from the list of responses.

Again we import the necessary packages and load the ‘words.pkl’ and ‘classes.pkl’ pickle files which we have created when we trained our model:

import nltk

from nltk.stem import WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

import pickle

import numpy as np

from keras.models import load_model

model = load_model('chatbot_model.h5')

import json

import random

intents = json.loads(open('intents.json').read())

words = pickle.load(open('words.pkl','rb'))

classes = pickle.load(open('classes.pkl','rb'))To predict the class, we will need to provide input in the same way as we did while training. So we will create some functions that will perform text preprocessing and then predict the class.

def clean_up_sentence(sentence):

# tokenize the pattern - split words into array

sentence_words = nltk.word_tokenize(sentence)

# stem each word - create short form for word

sentence_words = [lemmatizer.lemmatize(word.lower()) for word in sentence_words]

return sentence_words

# return bag of words array: 0 or 1 for each word in the bag that exists in the sentence

def bow(sentence, words, show_details=True):

# tokenize the pattern

sentence_words = clean_up_sentence(sentence)

# bag of words - matrix of N words, vocabulary matrix

bag = [0]*len(words)

for s in sentence_words:

for i,w in enumerate(words):

if w == s:

# assign 1 if current word is in the vocabulary position

bag[i] = 1

if show_details:

print ("found in bag: %s" % w)

return(np.array(bag))

def predict_class(sentence, model):

# filter out predictions below a threshold

p = bow(sentence, words,show_details=False)

res = model.predict(np.array([p]))[0]

ERROR_THRESHOLD = 0.25

results = [[i,r] for i,r in enumerate(res) if r>ERROR_THRESHOLD]

# sort by strength of probability

results.sort(key=lambda x: x[1], reverse=True)

return_list = []

for r in results:

return_list.append({"intent": classes[r[0]], "probability": str(r[1])})

return return_listAfter predicting the class, we will get a random response from the list of intents.

def getResponse(ints, intents_json):

tag = ints[0]['intent']

list_of_intents = intents_json['intents']

for i in list_of_intents:

if(i['tag']== tag):

result = random.choice(i['responses'])

break

return result

def chatbot_response(text):

ints = predict_class(text, model)

res = getResponse(ints, intents)

return resNow we will develop a graphical user interface. Let’s use Tkinter library which is shipped with tons of useful libraries for GUI. We will take the input message from the user and then use the helper functions we have created to get the response from the bot and display it on the GUI. Here is the full source code for the GUI.

#Creating GUI with tkinter

import tkinter

from tkinter import *

def send():

msg = EntryBox.get("1.0",'end-1c').strip()

EntryBox.delete("0.0",END)

if msg != '':

ChatLog.config(state=NORMAL)

ChatLog.insert(END, "You: " + msg + '\n\n')

ChatLog.config(foreground="#442265", font=("Verdana", 12 ))

res = chatbot_response(msg)

ChatLog.insert(END, "Bot: " + res + '\n\n')

ChatLog.config(state=DISABLED)

ChatLog.yview(END)

base = Tk()

base.title("Hello")

base.geometry("400x500")

base.resizable(width=FALSE, height=FALSE)

#Create Chat window

ChatLog = Text(base, bd=0, bg="white", height="8", width="50", font="Arial",)

ChatLog.config(state=DISABLED)

#Bind scrollbar to Chat window

scrollbar = Scrollbar(base, command=ChatLog.yview, cursor="heart")

ChatLog['yscrollcommand'] = scrollbar.set

#Create Button to send message

SendButton = Button(base, font=("Verdana",12,'bold'), text="Send", width="12", height=5,

bd=0, bg="#32de97", activebackground="#3c9d9b",fg='#ffffff',

command= send )

#Create the box to enter message

EntryBox = Text(base, bd=0, bg="white",width="29", height="5", font="Arial")

#EntryBox.bind("<Return>", send)

#Place all components on the screen

scrollbar.place(x=376,y=6, height=386)

ChatLog.place(x=6,y=6, height=386, width=370)

EntryBox.place(x=128, y=401, height=90, width=265)

SendButton.place(x=6, y=401, height=90)

base.mainloop()6. Run the chatbot

To run the chatbot, we have two main files; train_chatbot.py and chatapp.py.

First, we train the model using the command in the terminal:

python train_chatbot.py

If we don’t see any error during training, we have successfully created the model. Then to run the app, we run the second file.

python chatgui.py

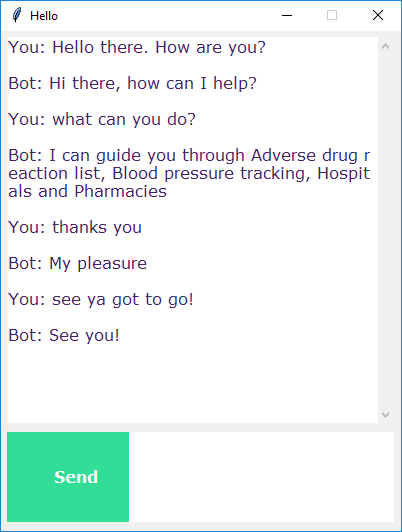

The program will open up a GUI window within a few seconds. With the GUI you can easily chat with the bot.

Screenshots:

Summary

In this Python data science project, we understood about chatbots and implemented a deep learning version of a chatbot in Python which is accurate. You can customize the data according to business requirements and train the chatbot with great accuracy. Chatbots are used everywhere and all businesses are looking forward to implementing bot in their workflow.

I hope you will practice by customizing your own chatbot using Python and don’t forget to show us your work. And, if you found the article useful, do share the project with your friends and colleagues.

If you are Happy with DataFlair, do not forget to make us happy with your positive feedback on Google

Whatever I write gives this answer Please provide hospital name or location how can I fix this error?

Same here!!

It can be fixed by either training the model with a large dataset or by increasing the error threshold for example 0.75 then give an if else condition when we get empty result list. Finally use try except in get response for any user input that doesn’t match the pattern and the bot replies “I don’t understand”.

Hello, thank you for great project. I would like to code precisely this – to set threshold higher and add smth like I dont understand please rephrase your question. Could you provide the code for it?

Thank you very much

Hi

I could run the Chat BOT successfully. However, in my intents.json file there are several intents for several tags where the word “entity” is present. So when I am typing just the word “entity” in the GUI , it is finding something odd. How can I find all the intents which has the above word just by typing “entity”?

Yes, you can find the intents which has any specific word provided by the user by applying print(return_list) in predictclass function.

Hi

thank you very much for your amazing project

I could run chatbot successfully. But when i send a message from tkinter the answer of bot is “Bot: Please mention your complaint, we will reach you and sorry for any inconvenience caused”

how can i resolve this problem?

It can be fixed by either training the model with a large dataset or by increasing the error threshold for example 0.75 then give an if else condition when we get empty result list. Finally use try except in get response for any user input that doesn’t match the pattern and the bot replies “I don’t understand”.

When we ran the code, and asked the chat bot “hello there, how are you?” it responded “please provide hospital name or location”

Can’t really understand why this problem is arising. Can you help plz?

Hi Aashi , I ran the code as instructed above and asked the chatbot the same “hello there, how are you?” for me it responded “Bot: Hi there, how can I help?” chatbots respond to anything relating it to the associated patterns. But it can not go beyond the associated pattern. Do check your dataset for what response is allocated to which pattern.

Hey can you please share your code to me, my code isn’t working like that

How can I contact you?

I am facing this error in chatgui.py

Exception in Tkinter callback

Traceback (most recent call last):

File “C:\Users\naman\anaconda3\lib\tkinter\__init__.py”, line 1705, in __call__

return self.func(*args)

File “”, line 14, in send

res = chatbot_response(msg)

File “”, line 2, in chatbot_response

ints = predict_class(msg, model)

File “”, line 3, in predict_class

p = bow(sentence, words,show_details=False)

File “”, line 7, in bow

for s in sentence_words():

TypeError: ‘list’ object is not callable

solved it, found the error

Hello, first of all, thanks for this amazing project, but I have encountered one problem which is

PS C:\Users\asus> & C:/Python37/python.exe “c:/Users/asus/Desktop/Python Playing Codes/Chatbot (Chan Yeol)/chatgui.py”

2021-02-19 16:59:26.013068: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library ‘cudart64_110.dll’; dlerror: cudart64_110.dll not found

2021-02-19 16:59:26.013344: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

2021-02-19 16:59:28.934765: I tensorflow/compiler/jit/xla_cpu_device.cc:41] Not creating XLA devices, tf_xla_enable_xla_devices not set

2021-02-19 16:59:28.936143: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library ‘nvcuda.dll’; dlerror: nvcuda.dll not found

2021-02-19 16:59:28.936656: W tensorflow/stream_executor/cuda/cuda_driver.cc:326] failed call to cuInit: UNKNOWN ERROR (303)

2021-02-19 16:59:28.955530: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:169] retrieving CUDA diagnostic information for host: MrMagicTaha

2021-02-19 16:59:28.963326: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:176] hostname: MrMagicTaha

2021-02-19 16:59:28.963997: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2021-02-19 16:59:28.966051: I tensorflow/compiler/jit/xla_gpu_device.cc:99] Not creating XLA devices, tf_xla_enable_xla_devices not set

2021-02-19 16:59:37.654913: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:116] None of the MLIR optimization passes are enabled (registered 2)

Exception in Tkinter callback

Traceback (most recent call last):

File “C:\Python37\lib\tkinter\__init__.py”, line 1705, in __call__

return self.func(*args)

File “c:/Users/asus/Desktop/Python Playing Codes/Chatbot (Chan Yeol)/chatgui.py”, line 81, in send

res = chatbot_response(msg)

File “c:/Users/asus/Desktop/Python Playing Codes/Chatbot (Chan Yeol)/chatgui.py”, line 62, in chatbot_response

ints = predict_class(msg, model)

File “c:/Users/asus/Desktop/Python Playing Codes/Chatbot (Chan Yeol)/chatgui.py”, line 42, in predict_class

res = model.predict(np.array([p]))[0]

File “C:\Python37\lib\site-packages\tensorflow\python\keras\engine\training.py”, line 1629, in predict

tmp_batch_outputs = self.predict_function(iterator)

File “C:\Python37\lib\site-packages\tensorflow\python\eager\def_function.py”, line 828, in __call__

result = self._call(*args, **kwds)

File “C:\Python37\lib\site-packages\tensorflow\python\eager\def_function.py”, line 871, in _call

self._initialize(args, kwds, add_initializers_to=initializers)

File “C:\Python37\lib\site-packages\tensorflow\python\eager\def_function.py”, line 726, in _initialize

*args, **kwds))

File “C:\Python37\lib\site-packages\tensorflow\python\eager\function.py”, line 2969, in _get_concrete_function_internal_garbage_collected

graph_function, _ = self._maybe_define_function(args, kwargs)

File “C:\Python37\lib\site-packages\tensorflow\python\eager\function.py”, line 3361, in _maybe_define_function

graph_function = self._create_graph_function(args, kwargs)

File “C:\Python37\lib\site-packages\tensorflow\python\eager\function.py”, line 3206, in _create_graph_function

capture_by_value=self._capture_by_value),

File “C:\Python37\lib\site-packages\tensorflow\python\framework\func_graph.py”, line 990, in func_graph_from_py_func

func_outputs = python_func(*func_args, **func_kwargs)

File “C:\Python37\lib\site-packages\tensorflow\python\eager\def_function.py”, line 634, in wrapped_fn

out = weak_wrapped_fn().__wrapped__(*args, **kwds)

File “C:\Python37\lib\site-packages\tensorflow\python\framework\func_graph.py”, line 977, in wrapper

raise e.ag_error_metadata.to_exception(e)

ValueError: in user code:

C:\Python37\lib\site-packages\tensorflow\python\keras\engine\training.py:1478 predict_function *

return step_function(self, iterator)

C:\Python37\lib\site-packages\tensorflow\python\keras\engine\training.py:1468 step_function **

outputs = model.distribute_strategy.run(run_step, args=(data,))

C:\Python37\lib\site-packages\tensorflow\python\distribute\distribute_lib.py:1259 run

return self._extended.call_for_each_replica(fn, args=args, kwargs=kwargs)

C:\Python37\lib\site-packages\tensorflow\python\distribute\distribute_lib.py:2730 call_for_each_replica

return self._call_for_each_replica(fn, args, kwargs)

C:\Python37\lib\site-packages\tensorflow\python\distribute\distribute_lib.py:3417 _call_for_each_replica

outputs = model.predict_step(data)

C:\Python37\lib\site-packages\tensorflow\python\keras\engine\training.py:1434 predict_step

return self(x, training=False)

C:\Python37\lib\site-packages\tensorflow\python\keras\engine\base_layer.py:998 __call__

input_spec.assert_input_compatibility(self.input_spec, inputs, self.name)

C:\Python37\lib\site-packages\tensorflow\python\keras\engine\input_spec.py:259 assert_input_compatibility

‘ but received input with shape ‘ + display_shape(x.shape))

ValueError: Input 0 of layer sequential is incompatible with the layer: expected axis -1 of input shape to have value 43 but received input with shape (None, 88)

I’ve try many other ways, but it still the same error. How can I solve this problem?

still not working :'(

Please post the error message and complete stacktrace, we will look into the issue.

Hello!! Thank you so much for sharing this project. I have a question though… I noticed that there is a “context” field in the json file. I want to make use of this context field so that the chatbot can answer a question related to the previous messages. How should I change the code to do this?

Thanks!!!

I am new to programming in general and I have decided to program a chatbot to learn Python. I am going to be using the IntelliJ IDEA environment for Python, and I understand how to make the initial .py file in this tutorial but I am completely lost in the steps afterward. Will the free Python course offered here get me up to speed to be able to understand this tutorial and implement it? Thanks.

If you are new to Python Programming, it is recommended to finish the python course then work on a few python projects which are available with the course. Once you feel confident then start the chatbot project.

Thanks!

Hey, Nice tutorial. Can you suggest how I create an exception if the keyword is not in the training data I want the bot to return “I Don’t Understand. Where should I update the code?

Code and the explanation is really awesome, simple to understand. Thank you so much for sharing. Once we get the chatbot, i need to click on send everything. Hitting enter doesnt seems to be working.

Hey I encounter this error while running chatGUI. The GUI window pop us but after sending the message I get this error. Please help me to sort this out.

Exception in Tkinter callback

Traceback (most recent call last):

File “C:\Users\deepi\.conda\anacondaInstallation\lib\tkinter\__init__.py”, line 1883, in __call__

return self.func(*args)

File “”, line 11, in send

res = chatbot_response(msg)

File “”, line 54, in chatbot_response

ints = predict_class(text, model)

File “”, line 36, in predict_class

res = model.predict(np.array([p]))[0]

File “C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\keras\engine\training.py”, line 1629, in predict

tmp_batch_outputs = self.predict_function(iterator)

File “C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\eager\def_function.py”, line 828, in __call__

result = self._call(*args, **kwds)

File “C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\eager\def_function.py”, line 862, in _call

results = self._stateful_fn(*args, **kwds)

File “C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\eager\function.py”, line 2941, in __call__

filtered_flat_args) = self._maybe_define_function(args, kwargs)

File “C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\eager\function.py”, line 3357, in _maybe_define_function

return self._define_function_with_shape_relaxation(

File “C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\eager\function.py”, line 3279, in _define_function_with_shape_relaxation

graph_function = self._create_graph_function(

File “C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\eager\function.py”, line 3196, in _create_graph_function

func_graph_module.func_graph_from_py_func(

File “C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\framework\func_graph.py”, line 990, in func_graph_from_py_func

func_outputs = python_func(*func_args, **func_kwargs)

File “C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\eager\def_function.py”, line 634, in wrapped_fn

out = weak_wrapped_fn().__wrapped__(*args, **kwds)

File “C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\framework\func_graph.py”, line 977, in wrapper

raise e.ag_error_metadata.to_exception(e)

ValueError: in user code:

C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\keras\engine\training.py:1478 predict_function *

return step_function(self, iterator)

C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\keras\engine\training.py:1468 step_function **

outputs = model.distribute_strategy.run(run_step, args=(data,))

C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\distribute\distribute_lib.py:1259 run

return self._extended.call_for_each_replica(fn, args=args, kwargs=kwargs)

C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\distribute\distribute_lib.py:2730 call_for_each_replica

return self._call_for_each_replica(fn, args, kwargs)

C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\distribute\distribute_lib.py:3417 _call_for_each_replica

return fn(*args, **kwargs)

C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\keras\engine\training.py:1461 run_step **

outputs = model.predict_step(data)

C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\keras\engine\training.py:1434 predict_step

return self(x, training=False)

C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\keras\engine\base_layer.py:998 __call__

input_spec.assert_input_compatibility(self.input_spec, inputs, self.name)

C:\Users\deepi\.conda\anacondaInstallation\lib\site-packages\tensorflow\python\keras\engine\input_spec.py:255 assert_input_compatibility

raise ValueError(

ValueError: Input 0 of layer sequential is incompatible with the layer: expected axis -1 of input shape to have value 48 but received input with shape (None, 88)

In order to run this project what libraries and which version I have to install and which ide I have to run this project.

Thanks for this wonderful Project, how can it fetch results from database as well?

i’m getting this error when i run the chatgui.py code.please help me to resolve this

2021-03-29 13:09:02.560793: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library ‘cudart64_110.dll’; dlerror: cudart64_110.dll not found

2021-03-29 13:09:02.595485: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

2021-03-29 13:09:48.634332: I tensorflow/compiler/jit/xla_cpu_device.cc:41] Not creating XLA devices, tf_xla_enable_xla_devices not set

2021-03-29 13:09:48.798792: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library ‘nvcuda.dll’; dlerror: nvcuda.dll not found

2021-03-29 13:09:48.809261: W tensorflow/stream_executor/cuda/cuda_driver.cc:326] failed call to cuInit: UNKNOWN ERROR (303)

2021-03-29 13:09:49.021902: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:169] retrieving CUDA diagnostic information for host: LAPTOP-9N7987FL

2021-03-29 13:09:49.022530: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:176] hostname: LAPTOP-9N7987FL

2021-03-29 13:09:49.144278: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2021-03-29 13:09:49.184669: I tensorflow/compiler/jit/xla_gpu_device.cc:99] Not creating XLA devices, tf_xla_enable_xla_devices not set

Exception in Tkinter callback

Traceback (most recent call last):

File “C:\Users\are prasad\AppData\Local\Programs\Python\Python36\lib\tkinter\__init__.py”, line 1702, in __call__

return self.func(*args)

File “chatgui.py”, line 81, in send

res = chatbot_response(msg)

File “chatgui.py”, line 62, in chatbot_response

ints = predict_class(msg, model)

File “chatgui.py”, line 41, in predict_class

p = bow(sentence, words,show_details=False)

File “chatgui.py”, line 27, in bow

sentence_words = clean_up_sentence(sentence)

File “chatgui.py”, line 18, in clean_up_sentence

sentence_words = nltk.word_tokenize(sentence)

File “C:\Users\are prasad\AppData\Local\Programs\Python\Python36\lib\site-packages\nltk\tokenize\__init__.py”, line 129, in word_tokenize

sentences = [text] if preserve_line else sent_tokenize(text, language)

File “C:\Users\are prasad\AppData\Local\Programs\Python\Python36\lib\site-packages\nltk\tokenize\__init__.py”, line 106, in sent_tokenize

tokenizer = load(“tokenizers/punkt/{0}.pickle”.format(language))

File “C:\Users\are prasad\AppData\Local\Programs\Python\Python36\lib\site-packages\nltk\data.py”, line 752, in load

opened_resource = _open(resource_url)

File “C:\Users\are prasad\AppData\Local\Programs\Python\Python36\lib\site-packages\nltk\data.py”, line 877, in _open

return find(path_, path + [“”]).open()

File “C:\Users\are prasad\AppData\Local\Programs\Python\Python36\lib\site-packages\nltk\data.py”, line 585, in find

raise LookupError(resource_not_found)

LookupError:

i got this error when i run the train_chatbot.py

2021-03-29 13:29:01.108957: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library ‘cudart64_110.dll’; dlerror: cudart64_110.dll not found

2021-03-29 13:29:01.121060: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

Traceback (most recent call last):

File “train_chatbot.py”, line 26, in

w = nltk.word_tokenize(pattern)

File “C:\Users\are prasad\AppData\Local\Programs\Python\Python36\lib\site-packages\nltk\tokenize\__init__.py”, line 129, in word_tokenize

sentences = [text] if preserve_line else sent_tokenize(text, language)

File “C:\Users\are prasad\AppData\Local\Programs\Python\Python36\lib\site-packages\nltk\tokenize\__init__.py”, line 106, in sent_tokenize

tokenizer = load(“tokenizers/punkt/{0}.pickle”.format(language))

File “C:\Users\are prasad\AppData\Local\Programs\Python\Python36\lib\site-packages\nltk\data.py”, line 752, in load

opened_resource = _open(resource_url)

File “C:\Users\are prasad\AppData\Local\Programs\Python\Python36\lib\site-packages\nltk\data.py”, line 877, in _open

return find(path_, path + [“”]).open()

File “C:\Users\are prasad\AppData\Local\Programs\Python\Python36\lib\site-packages\nltk\data.py”, line 585, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource [93mpunkt[0m not found.

Please use the NLTK Downloader to obtain the resource:

[31m>>> import nltk

>>> nltk.download(‘punkt’)

[0m

For more information see: https://www.nltk.org/data.html

Attempted to load [93mtokenizers/punkt/english.pickle[0m

Searched in:

– ‘C:\\Users\\are prasad/nltk_data’

– ‘C:\\Users\\are prasad\\AppData\\Local\\Programs\\Python\\Python36\\nltk_data’

– ‘C:\\Users\\are prasad\\AppData\\Local\\Programs\\Python\\Python36\\share\\nltk_data’

– ‘C:\\Users\\are prasad\\AppData\\Local\\Programs\\Python\\Python36\\lib\\nltk_data’

– ‘C:\\Users\\are prasad\\AppData\\Roaming\\nltk_data’

– ‘C:\\nltk_data’

– ‘D:\\nltk_data’

– ‘E:\\nltk_data’

– ”

When I run train_chatbot.py, Then it shows this error :

Traceback (most recent call last):

File “C:\Users\ABDUL HAIYAN\AppData\Local\Programs\Python\Python38\lib\site-packages\tensorflow\python\pywrap_tensorflow.py”, line 64, in

from tensorflow.python._pywrap_tensorflow_internal import *

ImportError: DLL load failed with error code 3221225501 while importing _pywrap_tensorflow_internal

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “C:\Users\ABDUL HAIYAN\AppData\Local\Programs\Python\Python38\lib\site-packages\keras\__init__.py”, line 3, in

from tensorflow.keras.layers.experimental.preprocessing import RandomRotation

File “C:\Users\ABDUL HAIYAN\AppData\Local\Programs\Python\Python38\lib\site-packages\tensorflow\__init__.py”, line 41, in

from tensorflow.python.tools import module_util as _module_util

File “C:\Users\ABDUL HAIYAN\AppData\Local\Programs\Python\Python38\lib\site-packages\tensorflow\python\__init__.py”, line 39, in

from tensorflow.python import pywrap_tensorflow as _pywrap_tensorflow

File “C:\Users\ABDUL HAIYAN\AppData\Local\Programs\Python\Python38\lib\site-packages\tensorflow\python\pywrap_tensorflow.py”, line 83, in

raise ImportError(msg)

ImportError: Traceback (most recent call last):

File “C:\Users\ABDUL HAIYAN\AppData\Local\Programs\Python\Python38\lib\site-packages\tensorflow\python\pywrap_tensorflow.py”, line 64, in

from tensorflow.python._pywrap_tensorflow_internal import *

ImportError: DLL load failed with error code 3221225501 while importing _pywrap_tensorflow_internal

Failed to load the native TensorFlow runtime.

See https://www.tensorflow.org/install/errors

for some common reasons and solutions. Include the entire stack trace

above this error message when asking for help.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “C:\Users\ABDUL HAIYAN\Desktop\Programming\Python\Chat Bot\train_chatbot.py”, line 8, in

from keras.models import Sequential

File “C:\Users\ABDUL HAIYAN\AppData\Local\Programs\Python\Python38\lib\site-packages\keras\__init__.py”, line 5, in

raise ImportError(

ImportError: Keras requires TensorFlow 2.2 or higher. Install TensorFlow via `pip install tensorflow`

Note : I Already Install TensorFlow 2.4.1, and I did upgrade it again and try but also show same error

Great article. Just a minor point – the neural network is NOT using LSTM as claimed in the write up.

Excellent application – easy to use and follow!

Please help in “context” field. When I try to link the tag with previous tag, it is not showing me the details.

Hello, In the beginning of the project discription, you said “We use a special recurrent neural network (LSTM) to classify which category the user’s message belongs to and then we will give a random response from the list of responses.

“, Im dont find to this part in the code.Please explain it. Thank you

C:\Users\patel\OneDrive\Desktop\chatbot>python train_chatbot.py

2021-05-08 21:15:29.296445: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library cudart64_110.dll

Traceback (most recent call last):

File “train_chatbot.py”, line 8, in

from keras.models import Sequential

File “C:\Python3\lib\site-packages\keras\__init__.py”, line 20, in

from . import initializers

File “C:\Python3\lib\site-packages\keras\initializers\__init__.py”, line 124, in

populate_deserializable_objects()

File “C:\Python3\lib\site-packages\keras\initializers\__init__.py”, line 49, in populate_deserializable_objects

LOCAL.GENERATED_WITH_V2 = tf.__internal__.tf2.enabled()

AttributeError: module ‘tensorflow.compat.v2.__internal__’ has no attribute ‘tf2’

Hi , i am able to run this project , but i have to attach this chatbot in my website that i have made using flask but server does not run tkinter, that’s why i am not able to integrate it in my website . can you plz suggest me some method to integrate it or can you plz help me to scrap the tkinter part and run the above project using flask and javascript api.

plz help me , i have to submit this my major project before 20th of this month.

when i run train_chatbot.py

i fetch this type error

C:\Users\admin\Desktop\chatbot>python tarin_chatbot.py

python: can’t open file ‘C:\Users\admin\Desktop\chatbot\tarin_chatbot.py’: [Errno 2] No such file or directory

C:\Users\admin\Desktop\chatbot>python train_chatbot.py

2021-05-12 07:23:27.620349: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library ‘cudart64_110.dll’; dlerror: cudart64_110.dll not found

2021-05-12 07:23:27.620619: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

Traceback (most recent call last):

File “C:\Users\admin\AppData\Local\Programs\Python\Python39\lib\site-packages\nltk\corpus\util.py”, line 83, in __load

root = nltk.data.find(“{}/{}”.format(self.subdir, zip_name))

File “C:\Users\admin\AppData\Local\Programs\Python\Python39\lib\site-packages\nltk\data.py”, line 583, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource [93mwordnet[0m not found.

Please use the NLTK Downloader to obtain the resource:

[31m>>> import nltk

>>> nltk.download(‘wordnet’)

[0m

For more information see: https://www.nltk.org/data.html

Attempted to load [93mcorpora/wordnet.zip/wordnet/[0m

Searched in:

– ‘C:\\Users\\admin/nltk_data’

– ‘C:\\Users\\admin\\AppData\\Local\\Programs\\Python\\Python39\\nltk_data’

– ‘C:\\Users\\admin\\AppData\\Local\\Programs\\Python\\Python39\\share\\nltk_data’

– ‘C:\\Users\\admin\\AppData\\Local\\Programs\\Python\\Python39\\lib\\nltk_data’

– ‘C:\\Users\\admin\\AppData\\Roaming\\nltk_data’

– ‘C:\\nltk_data’

– ‘D:\\nltk_data’

– ‘E:\\nltk_data’

**********************************************************************

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “C:\Users\admin\Desktop\chatbot\train_chatbot.py”, line 35, in

words = [lemmatizer.lemmatize(w.lower()) for w in words if w not in ignore_words]

File “C:\Users\admin\Desktop\chatbot\train_chatbot.py”, line 35, in

words = [lemmatizer.lemmatize(w.lower()) for w in words if w not in ignore_words]

File “C:\Users\admin\AppData\Local\Programs\Python\Python39\lib\site-packages\nltk\stem\wordnet.py”, line 38, in lemmatize

lemmas = wordnet._morphy(word, pos)

File “C:\Users\admin\AppData\Local\Programs\Python\Python39\lib\site-packages\nltk\corpus\util.py”, line 120, in __getattr__

self.__load()

File “C:\Users\admin\AppData\Local\Programs\Python\Python39\lib\site-packages\nltk\corpus\util.py”, line 85, in __load

raise e

File “C:\Users\admin\AppData\Local\Programs\Python\Python39\lib\site-packages\nltk\corpus\util.py”, line 80, in __load

root = nltk.data.find(“{}/{}”.format(self.subdir, self.__name))

File “C:\Users\admin\AppData\Local\Programs\Python\Python39\lib\site-packages\nltk\data.py”, line 583, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource [93mwordnet[0m not found.

Please use the NLTK Downloader to obtain the resource:

[31m>>> import nltk

>>> nltk.download(‘wordnet’)

[0m

For more information see: https://www.nltk.org/data.html

Attempted to load [93mcorpora/wordnet[0m

Searched in:

– ‘C:\\Users\\admin/nltk_data’

– ‘C:\\Users\\admin\\AppData\\Local\\Programs\\Python\\Python39\\nltk_data’

– ‘C:\\Users\\admin\\AppData\\Local\\Programs\\Python\\Python39\\share\\nltk_data’

– ‘C:\\Users\\admin\\AppData\\Local\\Programs\\Python\\Python39\\lib\\nltk_data’

– ‘C:\\Users\\admin\\AppData\\Roaming\\nltk_data’

– ‘C:\\nltk_data’

– ‘D:\\nltk_data’

– ‘E:\\nltk_data’

**********************************************************************

Then try it on anaconda software its simple to use .In that Jupyter library is there. We need to upload the related documents in jupyter. And run the code that’s it. I am done with this project. Thank You to who created this simple Project.

Hi, I’m kinda new to Python and I wanted to create this project, seems interesting to me and I really wanted to see how it works, I made a lot of reasearch and I’m beggining to understand a lot of things. But I have a problem, I don’t really know how to put together this project, can someone please help me so I can run this and see it work?

hey, rather than tkinter can i use other thing for GUI, bcz the current GUI is not look so good in real life project.

Thank you very much for this project. I have a question: I implemented everything, and then I tested a bit the chatbot. I’ve seen that is able to classy well unseen question in the correct topic. A problem the i cannot undertand is that when i pass to the chatbot and intentional wrong string, like “AJAJSJSJS” or similar the the model predict always the fisrt class with an high probability. I would expected all the classes with a very low probability (lower than the threshold). Do you have some advise for this problem?

Thank you

def responseOfBot(sentence):

results = predict_class(sentence)

if tag==[]:

return “Sorry I am not understand It”

else:

#If we have a classification then find the matching intent tag

if results:

#Loop as long as there are matches to process

while results:

for i in intents[‘intents’]:

#Find a tag matching the first result

if i[‘tag’] == results[0][0]:

#A random response from the intent

return random.choice(i[‘responses’])

results.pop(0)

Hi thank you very much for the project. I have a question: the classifier is able to classify well question also unseen, of the user finding the correct tag and then taking a responses, but I notice that when I insert intentionally a wrong question with a random string like “Akjdasbf”, or similar, the classifier predicts always the same class, the class 0. Instead I expected to obtain classes with very low probability ( lower than the threshold). Why this happens ?

Thank you in advance

Hi thank you very much for the project. I am new to programming in general and I have decided to program a chatbot to learn Python. I have a question: I see you mention using special neural network (LSTM) but when looking at your code I don’t know where it is used. Please help me explain. Hope you reply me