Python based Project – Learn to Build Image Caption Generator with CNN & LSTM

Python course with 57 real-time projects - Learn Python

Project based on Python – Image Caption Generator

You saw an image and your brain can easily tell what the image is about, but can a computer tell what the image is representing? Computer vision researchers worked on this a lot and they considered it impossible until now! With the advancement in Deep learning techniques, availability of huge datasets and computer power, we can build models that can generate captions for an image.

This is what we are going to implement in this Python based project where we will use deep learning techniques of Convolutional Neural Networks and a type of Recurrent Neural Network (LSTM) together.

Below are some of the Python Data Science projects on which you can work later on:

- Fake News Detection Python Project

- Parkinson’s Disease Detection Python Project

- Color Detection Python Project

- Speech Emotion Recognition Python Project

- Breast Cancer Classification Python Project

- Age and Gender Detection Python Project

- Handwritten Digit Recognition Python Project

- Chatbot Python Project

- Driver Drowsiness Detection Python Project

- Traffic Signs Recognition Python Project

- Image Caption Generator Python Project

Now, let’s quickly start the Python based project by defining the image caption generator.

What is Image Caption Generator?

Image caption generator is a task that involves computer vision and natural language processing concepts to recognize the context of an image and describe them in a natural language like English.

Image Caption Generator with CNN – About the Python based Project

The objective of our project is to learn the concepts of a CNN and LSTM model and build a working model of Image caption generator by implementing CNN with LSTM.

In this Python project, we will be implementing the caption generator using CNN (Convolutional Neural Networks) and LSTM (Long short term memory). The image features will be extracted from Xception which is a CNN model trained on the imagenet dataset and then we feed the features into the LSTM model which will be responsible for generating the image captions.

The Dataset of Python based Project

For the image caption generator, we will be using the Flickr_8K dataset. There are also other big datasets like Flickr_30K and MSCOCO dataset but it can take weeks just to train the network so we will be using a small Flickr8k dataset. The advantage of a huge dataset is that we can build better models.

Thanks to Jason Brownlee for providing a direct link to download the dataset (Size: 1GB).

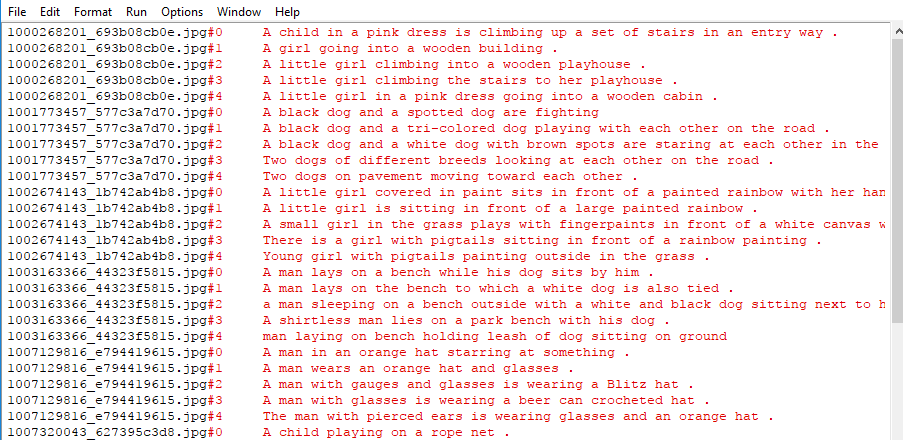

The Flickr_8k_text folder contains file Flickr8k.token which is the main file of our dataset that contains image name and their respective captions separated by newline(“\n”).

Pre-requisites

This project requires good knowledge of Deep learning, Python, working on Jupyter notebooks, Keras library, Numpy, and Natural language processing.

Make sure you have installed all the following necessary libraries:

- pip install tensorflow

- keras

- pillow

- numpy

- tqdm

- jupyterlab

Image Caption Generator – Python based Project

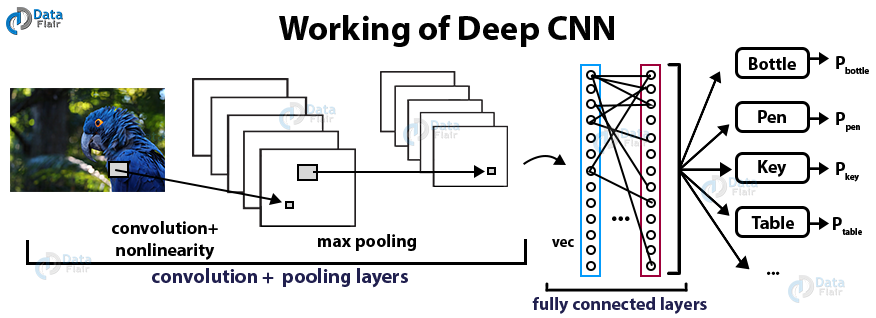

What is CNN?

Convolutional Neural networks are specialized deep neural networks which can process the data that has input shape like a 2D matrix. Images are easily represented as a 2D matrix and CNN is very useful in working with images.

CNN is basically used for image classifications and identifying if an image is a bird, a plane or Superman, etc.

It scans images from left to right and top to bottom to pull out important features from the image and combines the feature to classify images. It can handle the images that have been translated, rotated, scaled and changes in perspective.

Practise the important Python topics

Check out the 240+ Python Tutorials

What is LSTM?

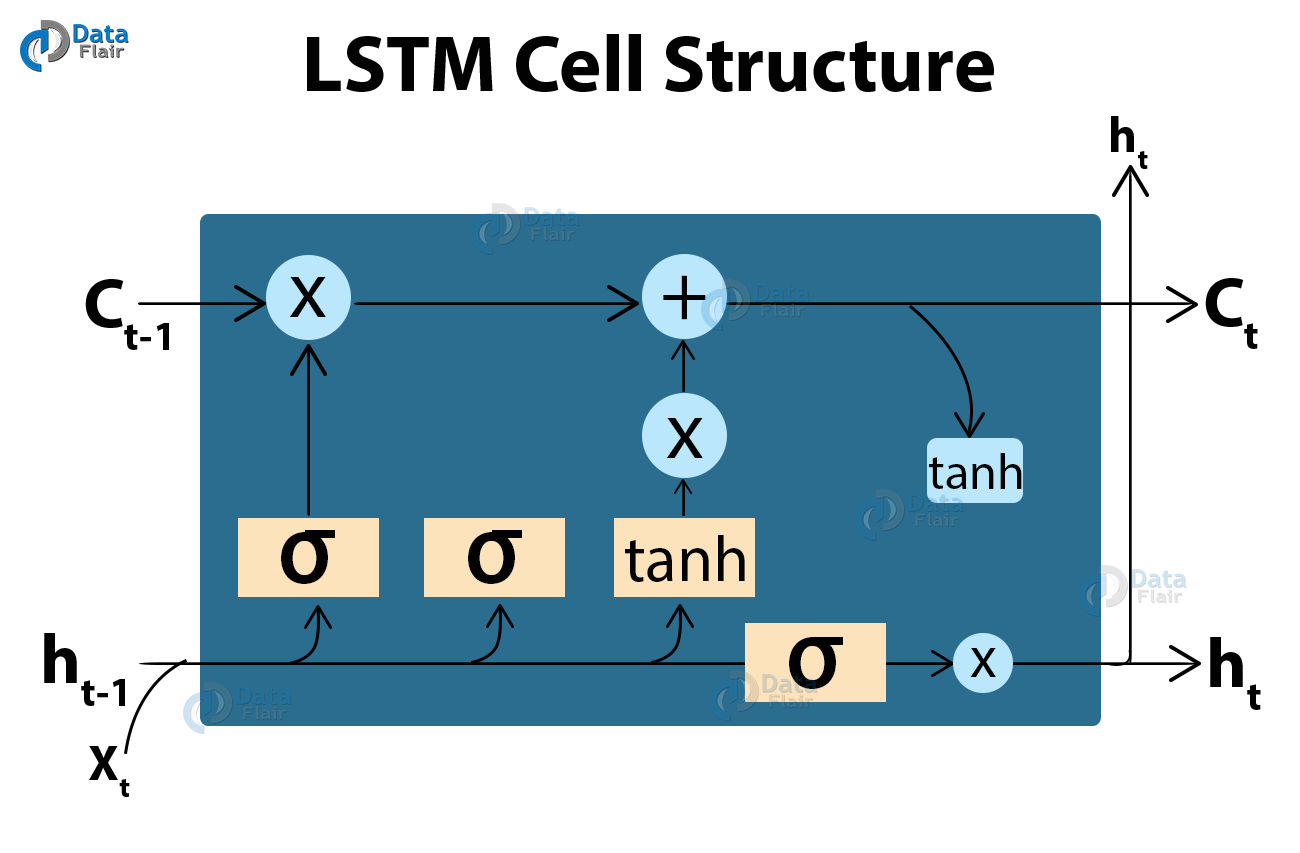

LSTM stands for Long short term memory, they are a type of RNN (recurrent neural network) which is well suited for sequence prediction problems. Based on the previous text, we can predict what the next word will be. It has proven itself effective from the traditional RNN by overcoming the limitations of RNN which had short term memory. LSTM can carry out relevant information throughout the processing of inputs and with a forget gate, it discards non-relevant information.

This is what an LSTM cell looks like –

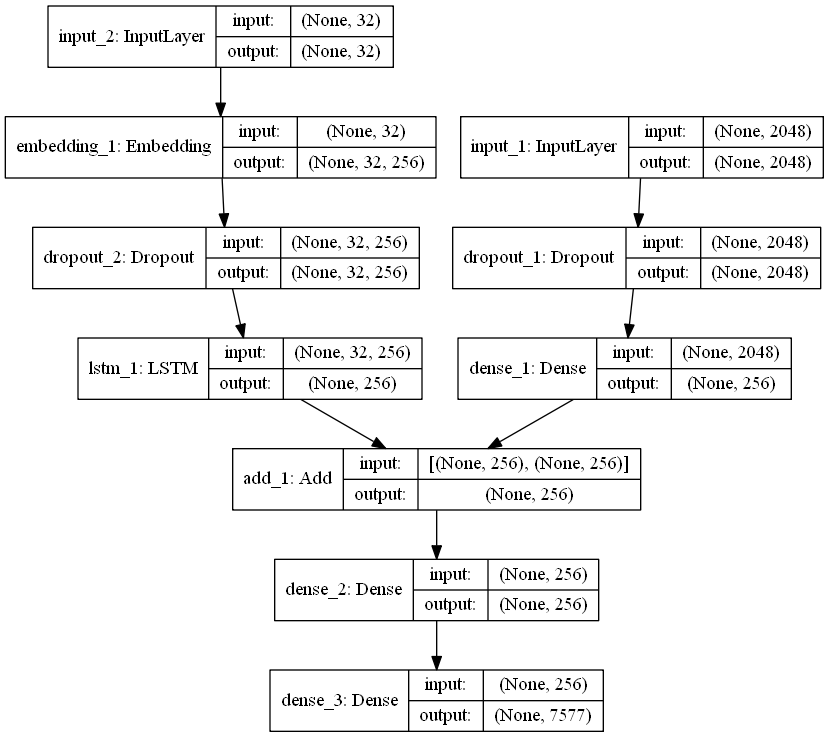

Image Caption Generator Model

So, to make our image caption generator model, we will be merging these architectures. It is also called a CNN-RNN model.

- CNN is used for extracting features from the image. We will use the pre-trained model Xception.

- LSTM will use the information from CNN to help generate a description of the image.

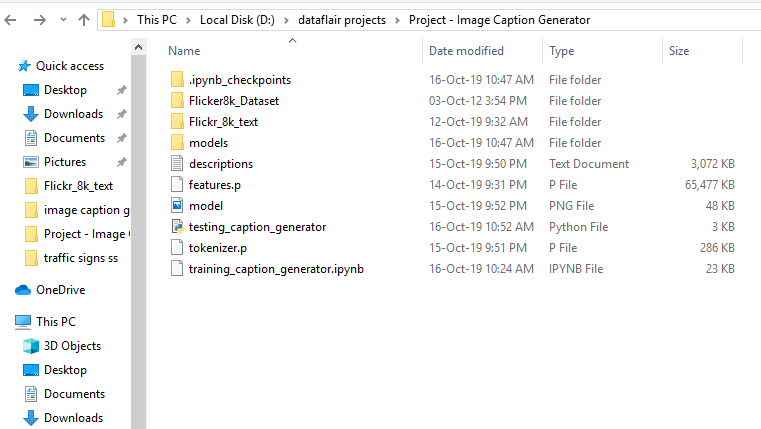

Project File Structure

Downloaded from dataset:

- Flicker8k_Dataset – Dataset folder which contains 8091 images.

- Flickr_8k_text – Dataset folder which contains text files and captions of images.

The below files will be created by us while making the project.

- Models – It will contain our trained models.

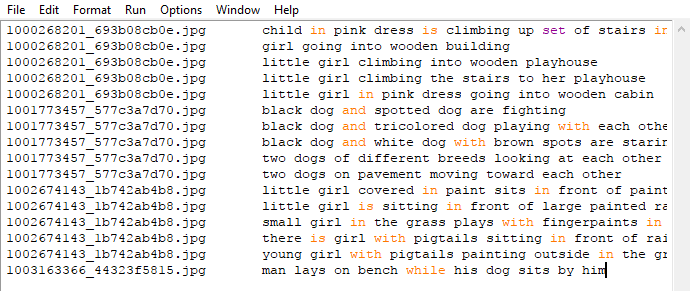

- Descriptions.txt – This text file contains all image names and their captions after preprocessing.

- Features.p – Pickle object that contains an image and their feature vector extracted from the Xception pre-trained CNN model.

- Tokenizer.p – Contains tokens mapped with an index value.

- Model.png – Visual representation of dimensions of our project.

- Testing_caption_generator.py – Python file for generating a caption of any image.

- Training_caption_generator.ipynb – Jupyter notebook in which we train and build our image caption generator.

You can download all the files from the link:

Image Caption Generator – Python Project Files

Want to become a Python expert?

Enroll for the Certified Python Training Course

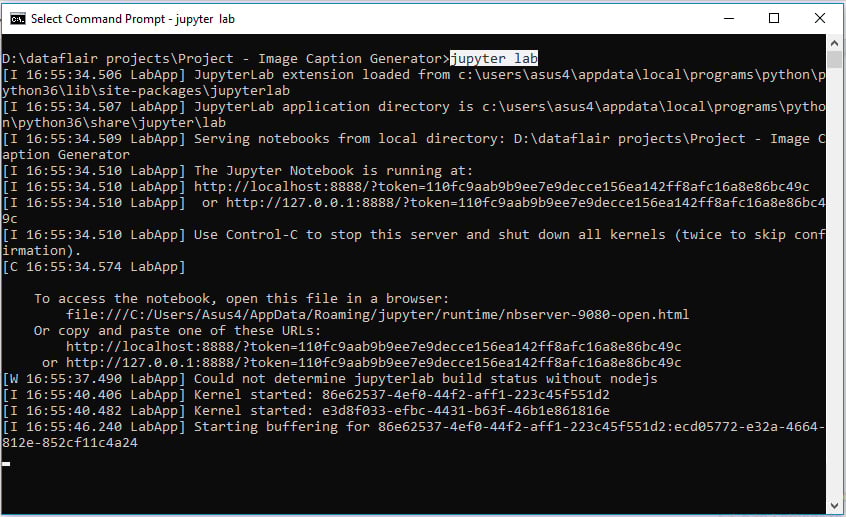

Building the Python based Project

Let’s start by initializing the jupyter notebook server by typing jupyter lab in the console of your project folder. It will open up the interactive Python notebook where you can run your code. Create a Python3 notebook and name it training_caption_generator.ipynb

1. First, we import all the necessary packages

import string import numpy as np from PIL import Image import os from pickle import dump, load import numpy as np from keras.applications.xception import Xception, preprocess_input from keras.preprocessing.image import load_img, img_to_array from keras.preprocessing.text import Tokenizer from keras.preprocessing.sequence import pad_sequences from keras.utils import to_categorical from keras.layers.merge import add from keras.models import Model, load_model from keras.layers import Input, Dense, LSTM, Embedding, Dropout # small library for seeing the progress of loops. from tqdm import tqdm_notebook as tqdm tqdm().pandas()

2. Getting and performing data cleaning

The main text file which contains all image captions is Flickr8k.token in our Flickr_8k_text folder.

Have a look at the file –

The format of our file is image and caption separated by a new line (“\n”).

Each image has 5 captions and we can see that #(0 to 5)number is assigned for each caption.

We will define 5 functions:

- load_doc( filename ) – For loading the document file and reading the contents inside the file into a string.

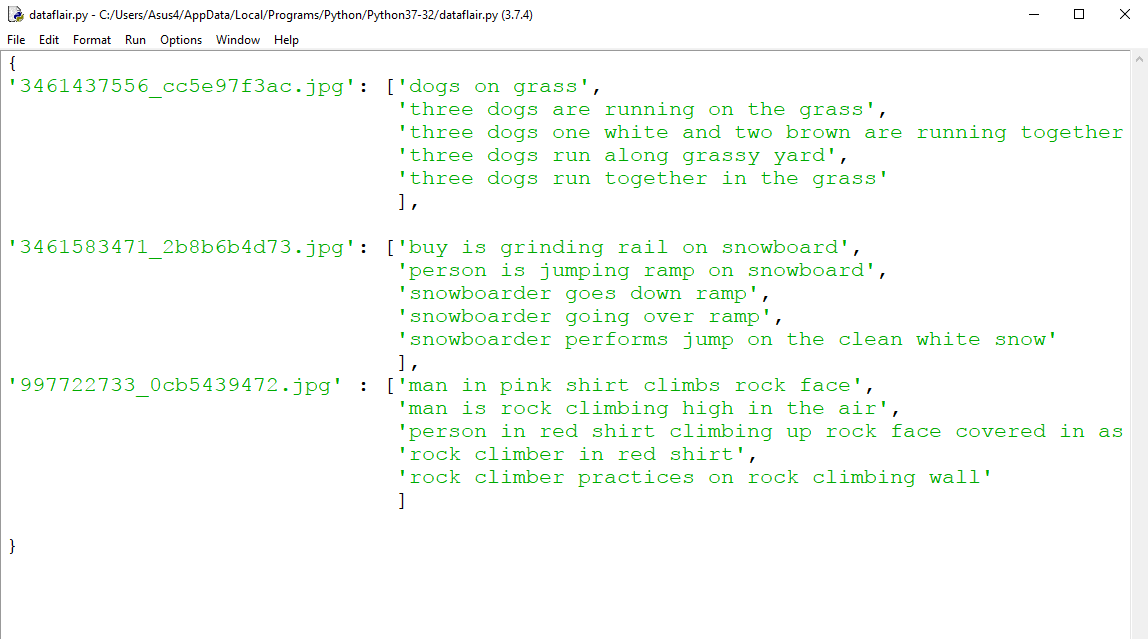

- all_img_captions( filename ) – This function will create a descriptions dictionary that maps images with a list of 5 captions. The descriptions dictionary will look something like this:

- cleaning_text( descriptions) – This function takes all descriptions and performs data cleaning. This is an important step when we work with textual data, according to our goal, we decide what type of cleaning we want to perform on the text. In our case, we will be removing punctuations, converting all text to lowercase and removing words that contain numbers.

So, a caption like “A man riding on a three-wheeled wheelchair” will be transformed into “man riding on three wheeled wheelchair” - text_vocabulary( descriptions ) – This is a simple function that will separate all the unique words and create the vocabulary from all the descriptions.

- save_descriptions( descriptions, filename ) – This function will create a list of all the descriptions that have been preprocessed and store them into a file. We will create a descriptions.txt file to store all the captions. It will look something like this:

Code :

# Loading a text file into memory

def load_doc(filename):

# Opening the file as read only

file = open(filename, 'r')

text = file.read()

file.close()

return text

# get all imgs with their captions

def all_img_captions(filename):

file = load_doc(filename)

captions = file.split('\n')

descriptions ={}

for caption in captions[:-1]:

img, caption = caption.split('\t')

if img[:-2] not in descriptions:

descriptions[img[:-2]] = [ caption ]

else:

descriptions[img[:-2]].append(caption)

return descriptions

#Data cleaning- lower casing, removing puntuations and words containing numbers

def cleaning_text(captions):

table = str.maketrans('','',string.punctuation)

for img,caps in captions.items():

for i,img_caption in enumerate(caps):

img_caption.replace("-"," ")

desc = img_caption.split()

#converts to lowercase

desc = [word.lower() for word in desc]

#remove punctuation from each token

desc = [word.translate(table) for word in desc]

#remove hanging 's and a

desc = [word for word in desc if(len(word)>1)]

#remove tokens with numbers in them

desc = [word for word in desc if(word.isalpha())]

#convert back to string

img_caption = ' '.join(desc)

captions[img][i]= img_caption

return captions

def text_vocabulary(descriptions):

# build vocabulary of all unique words

vocab = set()

for key in descriptions.keys():

[vocab.update(d.split()) for d in descriptions[key]]

return vocab

#All descriptions in one file

def save_descriptions(descriptions, filename):

lines = list()

for key, desc_list in descriptions.items():

for desc in desc_list:

lines.append(key + '\t' + desc )

data = "\n".join(lines)

file = open(filename,"w")

file.write(data)

file.close()

# Set these path according to project folder in you system

dataset_text = "D:\dataflair projects\Project - Image Caption Generator\Flickr_8k_text"

dataset_images = "D:\dataflair projects\Project - Image Caption Generator\Flicker8k_Dataset"

#we prepare our text data

filename = dataset_text + "/" + "Flickr8k.token.txt"

#loading the file that contains all data

#mapping them into descriptions dictionary img to 5 captions

descriptions = all_img_captions(filename)

print("Length of descriptions =" ,len(descriptions))

#cleaning the descriptions

clean_descriptions = cleaning_text(descriptions)

#building vocabulary

vocabulary = text_vocabulary(clean_descriptions)

print("Length of vocabulary = ", len(vocabulary))

#saving each description to file

save_descriptions(clean_descriptions, "descriptions.txt")3. Extracting the feature vector from all images

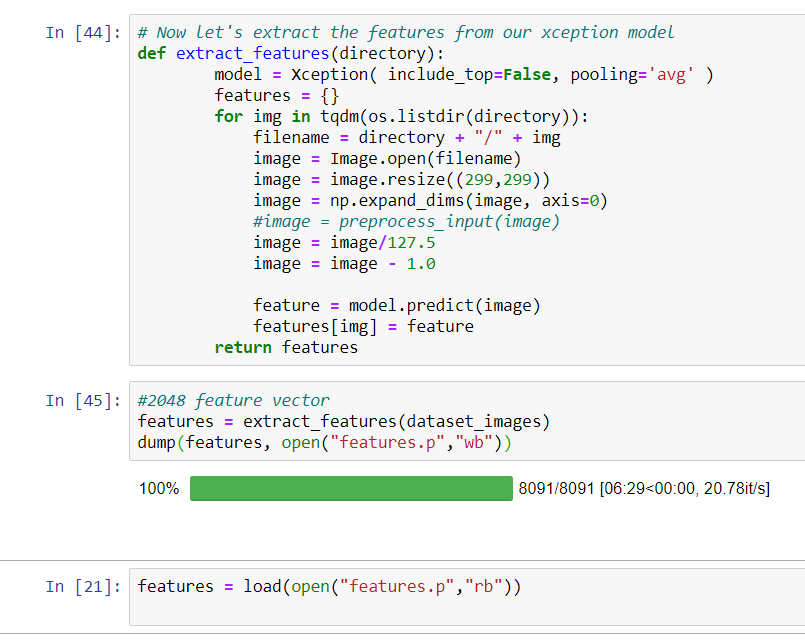

This technique is also called transfer learning, we don’t have to do everything on our own, we use the pre-trained model that have been already trained on large datasets and extract the features from these models and use them for our tasks. We are using the Xception model which has been trained on imagenet dataset that had 1000 different classes to classify. We can directly import this model from the keras.applications . Make sure you are connected to the internet as the weights get automatically downloaded. Since the Xception model was originally built for imagenet, we will do little changes for integrating with our model. One thing to notice is that the Xception model takes 299*299*3 image size as input. We will remove the last classification layer and get the 2048 feature vector.

model = Xception( include_top=False, pooling=’avg’ )

The function extract_features() will extract features for all images and we will map image names with their respective feature array. Then we will dump the features dictionary into a “features.p” pickle file.

Code:

def extract_features(directory):

model = Xception( include_top=False, pooling='avg' )

features = {}

for img in tqdm(os.listdir(directory)):

filename = directory + "/" + img

image = Image.open(filename)

image = image.resize((299,299))

image = np.expand_dims(image, axis=0)

#image = preprocess_input(image)

image = image/127.5

image = image - 1.0

feature = model.predict(image)

features[img] = feature

return features

#2048 feature vector

features = extract_features(dataset_images)

dump(features, open("features.p","wb"))This process can take a lot of time depending on your system. I am using an Nvidia 1050 GPU for training purpose so it took me around 7 minutes for performing this task. However, if you are using CPU then this process might take 1-2 hours. You can comment out the code and directly load the features from our pickle file.

features = load(open("features.p","rb"))4. Loading dataset for Training the model

In our Flickr_8k_test folder, we have Flickr_8k.trainImages.txt file that contains a list of 6000 image names that we will use for training.

For loading the training dataset, we need more functions:

- load_photos( filename ) – This will load the text file in a string and will return the list of image names.

- load_clean_descriptions( filename, photos ) – This function will create a dictionary that contains captions for each photo from the list of photos. We also append the <start> and <end> identifier for each caption. We need this so that our LSTM model can identify the starting and ending of the caption.

- load_features(photos) – This function will give us the dictionary for image names and their feature vector which we have previously extracted from the Xception model.

Code :

#load the data

def load_photos(filename):

file = load_doc(filename)

photos = file.split("\n")[:-1]

return photos

def load_clean_descriptions(filename, photos):

#loading clean_descriptions

file = load_doc(filename)

descriptions = {}

for line in file.split("\n"):

words = line.split()

if len(words)<1 :

continue

image, image_caption = words[0], words[1:]

if image in photos:

if image not in descriptions:

descriptions[image] = []

desc = '<start> ' + " ".join(image_caption) + ' <end>'

descriptions[image].append(desc)

return descriptions

def load_features(photos):

#loading all features

all_features = load(open("features.p","rb"))

#selecting only needed features

features = {k:all_features[k] for k in photos}

return features

filename = dataset_text + "/" + "Flickr_8k.trainImages.txt"

#train = loading_data(filename)

train_imgs = load_photos(filename)

train_descriptions = load_clean_descriptions("descriptions.txt", train_imgs)

train_features = load_features(train_imgs)5. Tokenizing the vocabulary

Computers don’t understand English words, for computers, we will have to represent them with numbers. So, we will map each word of the vocabulary with a unique index value. Keras library provides us with the tokenizer function that we will use to create tokens from our vocabulary and save them to a “tokenizer.p” pickle file.

Code:

#converting dictionary to clean list of descriptions

def dict_to_list(descriptions):

all_desc = []

for key in descriptions.keys():

[all_desc.append(d) for d in descriptions[key]]

return all_desc

#creating tokenizer class

#this will vectorise text corpus

#each integer will represent token in dictionary

from keras.preprocessing.text import Tokenizer

def create_tokenizer(descriptions):

desc_list = dict_to_list(descriptions)

tokenizer = Tokenizer()

tokenizer.fit_on_texts(desc_list)

return tokenizer

# give each word an index, and store that into tokenizer.p pickle file

tokenizer = create_tokenizer(train_descriptions)

dump(tokenizer, open('tokenizer.p', 'wb'))

vocab_size = len(tokenizer.word_index) + 1

vocab_sizeOur vocabulary contains 7577 words.

We calculate the maximum length of the descriptions. This is important for deciding the model structure parameters. Max_length of description is 32.

#calculate maximum length of descriptions

def max_length(descriptions):

desc_list = dict_to_list(descriptions)

return max(len(d.split()) for d in desc_list)

max_length = max_length(descriptions)

max_length6. Create Data generator

Let us first see how the input and output of our model will look like. To make this task into a supervised learning task, we have to provide input and output to the model for training. We have to train our model on 6000 images and each image will contain 2048 length feature vector and caption is also represented as numbers. This amount of data for 6000 images is not possible to hold into memory so we will be using a generator method that will yield batches.

The generator will yield the input and output sequence.

For example:

The input to our model is [x1, x2] and the output will be y, where x1 is the 2048 feature vector of that image, x2 is the input text sequence and y is the output text sequence that the model has to predict.

| x1(feature vector) | x2(Text sequence) | y(word to predict) |

| feature | start, | two |

| feature | start, two | dogs |

| feature | start, two, dogs | drink |

| feature | start, two, dogs, drink | water |

| feature | start, two, dogs, drink, water | end |

#create input-output sequence pairs from the image description.

#data generator, used by model.fit_generator()

def data_generator(descriptions, features, tokenizer, max_length):

while 1:

for key, description_list in descriptions.items():

#retrieve photo features

feature = features[key][0]

input_image, input_sequence, output_word = create_sequences(tokenizer, max_length, description_list, feature)

yield [[input_image, input_sequence], output_word]

def create_sequences(tokenizer, max_length, desc_list, feature):

X1, X2, y = list(), list(), list()

# walk through each description for the image

for desc in desc_list:

# encode the sequence

seq = tokenizer.texts_to_sequences([desc])[0]

# split one sequence into multiple X,y pairs

for i in range(1, len(seq)):

# split into input and output pair

in_seq, out_seq = seq[:i], seq[i]

# pad input sequence

in_seq = pad_sequences([in_seq], maxlen=max_length)[0]

# encode output sequence

out_seq = to_categorical([out_seq], num_classes=vocab_size)[0]

# store

X1.append(feature)

X2.append(in_seq)

y.append(out_seq)

return np.array(X1), np.array(X2), np.array(y)

#You can check the shape of the input and output for your model

[a,b],c = next(data_generator(train_descriptions, features, tokenizer, max_length))

a.shape, b.shape, c.shape

#((47, 2048), (47, 32), (47, 7577))7. Defining the CNN-RNN model

To define the structure of the model, we will be using the Keras Model from Functional API. It will consist of three major parts:

- Feature Extractor – The feature extracted from the image has a size of 2048, with a dense layer, we will reduce the dimensions to 256 nodes.

- Sequence Processor – An embedding layer will handle the textual input, followed by the LSTM layer.

- Decoder – By merging the output from the above two layers, we will process by the dense layer to make the final prediction. The final layer will contain the number of nodes equal to our vocabulary size.

Visual representation of the final model is given below –

from keras.utils import plot_model

# define the captioning model

def define_model(vocab_size, max_length):

# features from the CNN model squeezed from 2048 to 256 nodes

inputs1 = Input(shape=(2048,))

fe1 = Dropout(0.5)(inputs1)

fe2 = Dense(256, activation='relu')(fe1)

# LSTM sequence model

inputs2 = Input(shape=(max_length,))

se1 = Embedding(vocab_size, 256, mask_zero=True)(inputs2)

se2 = Dropout(0.5)(se1)

se3 = LSTM(256)(se2)

# Merging both models

decoder1 = add([fe2, se3])

decoder2 = Dense(256, activation='relu')(decoder1)

outputs = Dense(vocab_size, activation='softmax')(decoder2)

# tie it together [image, seq] [word]

model = Model(inputs=[inputs1, inputs2], outputs=outputs)

model.compile(loss='categorical_crossentropy', optimizer='adam')

# summarize model

print(model.summary())

plot_model(model, to_file='model.png', show_shapes=True)

return model8. Training the model

To train the model, we will be using the 6000 training images by generating the input and output sequences in batches and fitting them to the model using model.fit_generator() method. We also save the model to our models folder. This will take some time depending on your system capability.

# train our model

print('Dataset: ', len(train_imgs))

print('Descriptions: train=', len(train_descriptions))

print('Photos: train=', len(train_features))

print('Vocabulary Size:', vocab_size)

print('Description Length: ', max_length)

model = define_model(vocab_size, max_length)

epochs = 10

steps = len(train_descriptions)

# making a directory models to save our models

os.mkdir("models")

for i in range(epochs):

generator = data_generator(train_descriptions, train_features, tokenizer, max_length)

model.fit_generator(generator, epochs=1, steps_per_epoch= steps, verbose=1)

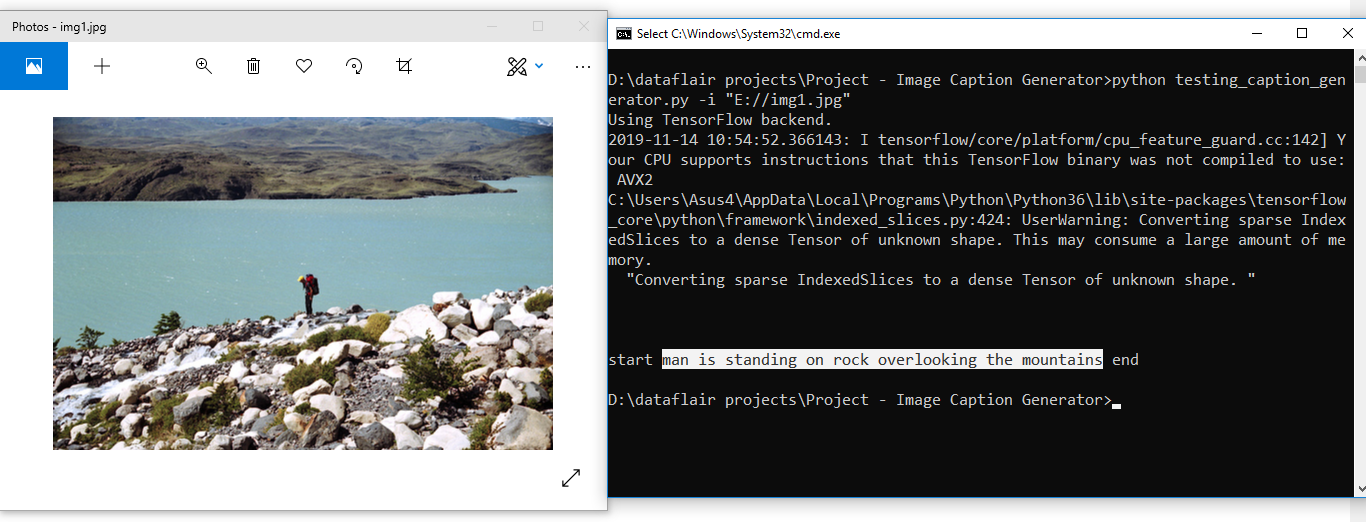

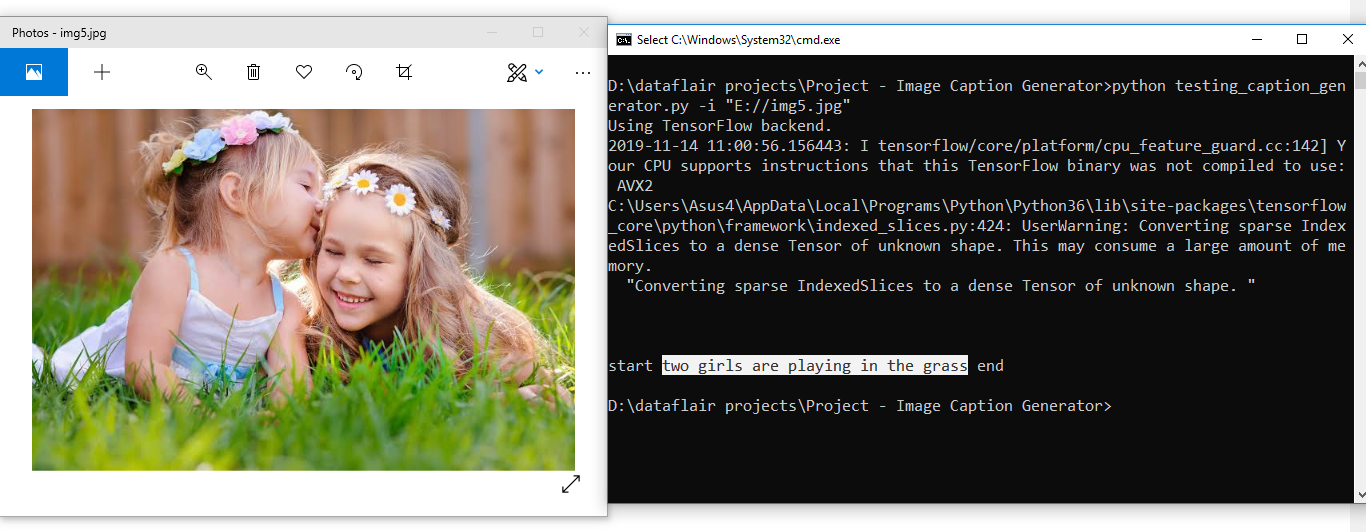

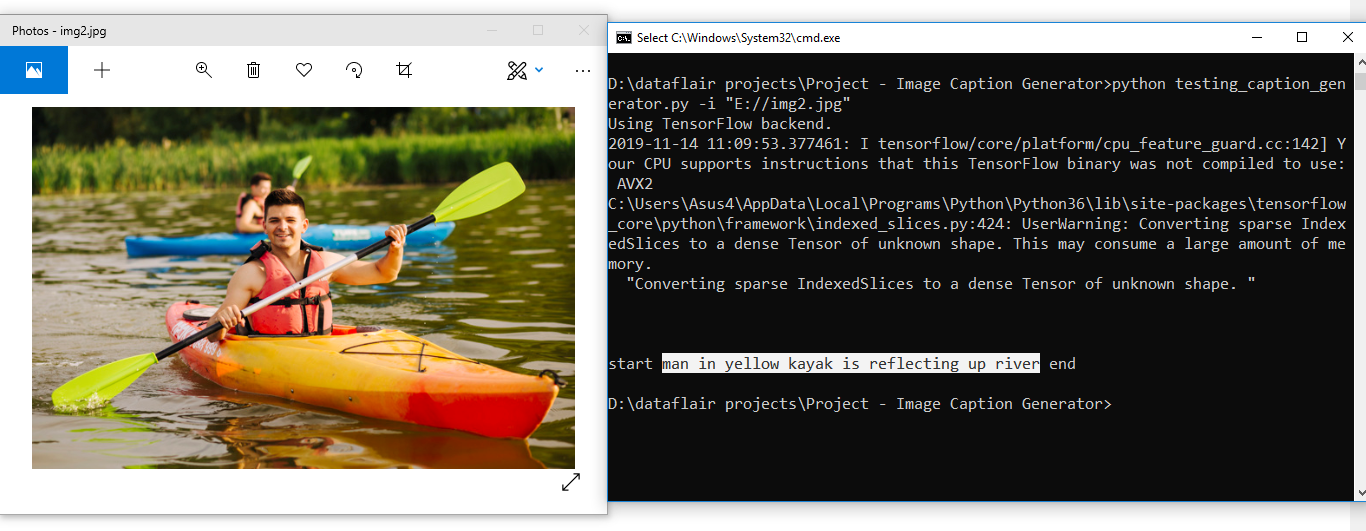

model.save("models/model_" + str(i) + ".h5")9. Testing the model

The model has been trained, now, we will make a separate file testing_caption_generator.py which will load the model and generate predictions. The predictions contain the max length of index values so we will use the same tokenizer.p pickle file to get the words from their index values.

Code:

import numpy as np

from PIL import Image

import matplotlib.pyplot as plt

import argparse

ap = argparse.ArgumentParser()

ap.add_argument('-i', '--image', required=True, help="Image Path")

args = vars(ap.parse_args())

img_path = args['image']

def extract_features(filename, model):

try:

image = Image.open(filename)

except:

print("ERROR: Couldn't open image! Make sure the image path and extension is correct")

image = image.resize((299,299))

image = np.array(image)

# for images that has 4 channels, we convert them into 3 channels

if image.shape[2] == 4:

image = image[..., :3]

image = np.expand_dims(image, axis=0)

image = image/127.5

image = image - 1.0

feature = model.predict(image)

return feature

def word_for_id(integer, tokenizer):

for word, index in tokenizer.word_index.items():

if index == integer:

return word

return None

def generate_desc(model, tokenizer, photo, max_length):

in_text = 'start'

for i in range(max_length):

sequence = tokenizer.texts_to_sequences([in_text])[0]

sequence = pad_sequences([sequence], maxlen=max_length)

pred = model.predict([photo,sequence], verbose=0)

pred = np.argmax(pred)

word = word_for_id(pred, tokenizer)

if word is None:

break

in_text += ' ' + word

if word == 'end':

break

return in_text

#path = 'Flicker8k_Dataset/111537222_07e56d5a30.jpg'

max_length = 32

tokenizer = load(open("tokenizer.p","rb"))

model = load_model('models/model_9.h5')

xception_model = Xception(include_top=False, pooling="avg")

photo = extract_features(img_path, xception_model)

img = Image.open(img_path)

description = generate_desc(model, tokenizer, photo, max_length)

print("\n\n")

print(description)

plt.imshow(img)

Results:

Summary

In this advanced Python project, we have implemented a CNN-RNN model by building an image caption generator. Some key points to note are that our model depends on the data, so, it cannot predict the words that are out of its vocabulary. We used a small dataset consisting of 8000 images. For production-level models, we need to train on datasets larger than 100,000 images which can produce better accuracy models.

Rock the Python interview round

Practise 150+ Python Interview Questions

Hope you enjoyed making this Python based project with us. You can ask your doubts in the comment section below.

Your opinion matters

Please write your valuable feedback about DataFlair on Google

hey Everything works fine but atlast it’s showing this error its a raw code but I am using tensorflow as a backend—–

ValueError Traceback (most recent call last)

in

13 for i in range(epochs):

14 generator = data_generator(train_descriptions, train_features, tokenizer, max_length)

—> 15 model.fit_generator(generator, epochs=1, steps_per_epoch= steps, verbose=1)

16 model.save(“models/model_” + str(i) + “.h5”)

~/anaconda3/lib/python3.7/site-packages/tensorflow_core/python/keras/engine/training.py in fit_generator(self, generator, steps_per_epoch, epochs, verbose, callbacks, validation_data, validation_steps, validation_freq, class_weight, max_queue_size, workers, use_multiprocessing, shuffle, initial_epoch)

1295 shuffle=shuffle,

1296 initial_epoch=initial_epoch,

-> 1297 steps_name=’steps_per_epoch’)

1298

1299 def evaluate_generator(self,

~/anaconda3/lib/python3.7/site-packages/tensorflow_core/python/keras/engine/training_generator.py in model_iteration(model, data, steps_per_epoch, epochs, verbose, callbacks, validation_data, validation_steps, validation_freq, class_weight, max_queue_size, workers, use_multiprocessing, shuffle, initial_epoch, mode, batch_size, steps_name, **kwargs)

263

264 is_deferred = not model._is_compiled

–> 265 batch_outs = batch_function(*batch_data)

266 if not isinstance(batch_outs, list):

267 batch_outs = [batch_outs]

~/anaconda3/lib/python3.7/site-packages/tensorflow_core/python/keras/engine/training.py in train_on_batch(self, x, y, sample_weight, class_weight, reset_metrics)

971 outputs = training_v2_utils.train_on_batch(

972 self, x, y=y, sample_weight=sample_weight,

–> 973 class_weight=class_weight, reset_metrics=reset_metrics)

974 outputs = (outputs[‘total_loss’] + outputs[‘output_losses’] +

975 outputs[‘metrics’])

~/anaconda3/lib/python3.7/site-packages/tensorflow_core/python/keras/engine/training_v2_utils.py in train_on_batch(model, x, y, sample_weight, class_weight, reset_metrics)

251 x, y, sample_weights = model._standardize_user_data(

252 x, y, sample_weight=sample_weight, class_weight=class_weight,

–> 253 extract_tensors_from_dataset=True)

254 batch_size = array_ops.shape(nest.flatten(x, expand_composites=True)[0])[0]

255 # If `model._distribution_strategy` is True, then we are in a replica context

~/anaconda3/lib/python3.7/site-packages/tensorflow_core/python/keras/engine/training.py in _standardize_user_data(self, x, y, sample_weight, class_weight, batch_size, check_steps, steps_name, steps, validation_split, shuffle, extract_tensors_from_dataset)

2470 feed_input_shapes,

2471 check_batch_axis=False, # Don’t enforce the batch size.

-> 2472 exception_prefix=’input’)

2473

2474 # Get typespecs for the input data and sanitize it if necessary.

~/anaconda3/lib/python3.7/site-packages/tensorflow_core/python/keras/engine/training_utils.py in standardize_input_data(data, names, shapes, check_batch_axis, exception_prefix)

504 elif isinstance(data, (list, tuple)):

505 if isinstance(data[0], (list, tuple)):

–> 506 data = [np.asarray(d) for d in data]

507 elif len(names) == 1 and isinstance(data[0], (float, int)):

508 data = [np.asarray(data)]

~/anaconda3/lib/python3.7/site-packages/tensorflow_core/python/keras/engine/training_utils.py in (.0)

504 elif isinstance(data, (list, tuple)):

505 if isinstance(data[0], (list, tuple)):

–> 506 data = [np.asarray(d) for d in data]

507 elif len(names) == 1 and isinstance(data[0], (float, int)):

508 data = [np.asarray(data)]

~/anaconda3/lib/python3.7/site-packages/numpy/core/numeric.py in asarray(a, dtype, order)

536

537 “””

–> 538 return array(a, dtype, copy=False, order=order)

539

540

ValueError: could not broadcast input array from shape (47,2048) into shape (47)

PermissionError Traceback (most recent call last)

in

1 directory =”D:\Flickr8k_Dataset”

—-> 2 features = extract_features(directory)

3 print(‘Extracted Features: %d’ % len(features))

4 # save to file

5 dump(features, open(r’features.pkl’, ‘rb’))

in extract_features(directory)

13 # load an image from file

14 filename = directory + ‘/’ + name

—> 15 image = load_img(filename, target_size=(224, 224))

16 # convert the image pixels to a numpy array

17 image = img_to_array(image)

~\anaconda3\lib\site-packages\keras_preprocessing\image\utils.py in load_img(path, grayscale, color_mode, target_size, interpolation)

108 raise ImportError(‘Could not import PIL.Image. ‘

109 ‘The use of `load_img` requires PIL.’)

–> 110 img = pil_image.open(path)

111 if color_mode == ‘grayscale’:

112 if img.mode != ‘L’:

~\anaconda3\lib\site-packages\PIL\Image.py in open(fp, mode)

2807

2808 if filename:

-> 2809 fp = builtins.open(filename, “rb”)

2810 exclusive_fp = True

2811

PermissionError: [Errno 13] Permission denied: ‘D:\\Flickr8k_Dataset/Flicker8k_Dataset’

why is this error showing?can you please help me?

D:\\Flickr8k_Dataset\\Flicker8k_Dataset’

use that

How to measure the accuracy of the given model/project?

Please can you share the working code?

You can find the accuracy by testing the trained model and compare it with original outputs. Python provides functions to get the True and False Positives and Negatives, which is given as a matrix called the Confusion Matrix. Using this matrix and other functions, you can find the accuracy and other metrics to find the performance of the model.

You can find the accuracy by testing the trained model and compare it with original outputs. Python provides functions to get the True and False Positives and Negatives, which is given as a matrix called the Confusion Matrix. Using this matrix and other functions, you can find the accuracy and other metrics to find the performance of the model.

how do use this program using bleu score for testing the accuracy of image

Bleu score gives the similarity of outputs from the trained model and the original output. Higher the score, better is the model. A score of 30- 40 means the model is good, 40-50 means that the model is very good and the score more than that the model is of very high quality.

The captions that are being generated are not accurate enough as shown in the result section of this page. What can i do to improve?

Please can you share the working code??

Please can you share the working code??

can you plz share the working code?

This is the error I keep getting. I am running the model on Google Colab.

FileNotFoundError Traceback (most recent call last)

in ()

61 #loading the file that contains all data

62 #mapping them into descriptions dictionary img to 5 captions

—> 63 descriptions = all_img_captions(filename)

64 print(“Length of descriptions =” ,len(descriptions))

65 #cleaning the descriptions

1 frames

in load_doc(filename)

1 def load_doc(filename):

2 # Opening the file as read only

—-> 3 file = open(filename, ‘r’)

4 text = file.read()

5 file.close()

FileNotFoundError: [Errno 2] No such file or directory: ‘C:\\Users\\USER\\Documents\\ImageCaptionGenerator\\Flickr_8k_text/Flickr8k.token.txt’

If you’re running it in Colab you need to upload the files for each session of the runtime, or upload all the files to Google Drive and then mount the drive.

You can prevent getting this error by changind the path in the code according to the location at which the file exist in your device. Hope this helps!

What do we need to keep instead of directory and filename

Please help me as soon as possible

The directory and the filename conbinedly represet the location of the images. So, instead of the directory you can write the path.

ValueError: No gradients provided for any variable: [’embedding_4/embeddings:0′, ‘dense_12/kernel:0’, ‘dense_12/bias:0’, ‘lstm_4/lstm_cell_4/kernel:0’, ‘lstm_4/lstm_cell_4/recurrent_kernel:0’, ‘lstm_4/lstm_cell_4/bias:0’, ‘dense_13/kernel:0’, ‘dense_13/bias:0’, ‘dense_14/kernel:0’, ‘dense_14/bias:0’].

Bro, Did you found a solution to this error. I am encountering the same problem. If you could give me a heads up about it .

Hey, do you find a solution to this issue?

because I am also getting the same error.

For anyone who is getting this error on google colab, I have a temporary fix for it. Simply downgrade the version of keras and tensorflow. Use pip for this.

Run the following code:

pip uninstall keras

pip install keras == 2.3.1

pip uninstall tensorflow

pip install tensorflow == 2.2

After running the above codes in different cells, simply restart your runtime and your error will be solved.

Hello Everyone i am getting this error every time i run the code. Please help

WARNING:tensorflow:From :14: Model.fit_generator (from tensorflow.python.keras.engine.training) is deprecated and will be removed in a future version.

Instructions for updating:

Please use Model.fit, which supports generators.

—————————————————————————

ValueError Traceback (most recent call last)

in

12 for i in range(epochs):

13 generator = data_generator(train_descriptions, train_features, tokenizer, max_length)

—> 14 model.fit_generator(generator, epochs=1, steps_per_epoch= steps, verbose=1)

15 model.save(“models/model_” + str(i) + “.h5”)

~/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/util/deprecation.py in new_func(*args, **kwargs)

322 ‘in a future version’ if date is None else (‘after %s’ % date),

323 instructions)

–> 324 return func(*args, **kwargs)

325 return tf_decorator.make_decorator(

326 func, new_func, ‘deprecated’,

~/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/keras/engine/training.py in fit_generator(self, generator, steps_per_epoch, epochs, verbose, callbacks, validation_data, validation_steps, validation_freq, class_weight, max_queue_size, workers, use_multiprocessing, shuffle, initial_epoch)

1813 “””

1814 _keras_api_gauge.get_cell(‘fit_generator’).set(True)

-> 1815 return self.fit(

1816 generator,

1817 steps_per_epoch=steps_per_epoch,

~/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/keras/engine/training.py in _method_wrapper(self, *args, **kwargs)

106 def _method_wrapper(self, *args, **kwargs):

107 if not self._in_multi_worker_mode(): # pylint: disable=protected-access

–> 108 return method(self, *args, **kwargs)

109

110 # Running inside `run_distribute_coordinator` already.

~/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/keras/engine/training.py in fit(self, x, y, batch_size, epochs, verbose, callbacks, validation_split, validation_data, shuffle, class_weight, sample_weight, initial_epoch, steps_per_epoch, validation_steps, validation_batch_size, validation_freq, max_queue_size, workers, use_multiprocessing)

1096 batch_size=batch_size):

1097 callbacks.on_train_batch_begin(step)

-> 1098 tmp_logs = train_function(iterator)

1099 if data_handler.should_sync:

1100 context.async_wait()

~/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/eager/def_function.py in __call__(self, *args, **kwds)

778 else:

779 compiler = “nonXla”

–> 780 result = self._call(*args, **kwds)

781

782 new_tracing_count = self._get_tracing_count()

~/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/eager/def_function.py in _call(self, *args, **kwds)

821 # This is the first call of __call__, so we have to initialize.

822 initializers = []

–> 823 self._initialize(args, kwds, add_initializers_to=initializers)

824 finally:

825 # At this point we know that the initialization is complete (or less

~/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/eager/def_function.py in _initialize(self, args, kwds, add_initializers_to)

694 self._graph_deleter = FunctionDeleter(self._lifted_initializer_graph)

695 self._concrete_stateful_fn = (

–> 696 self._stateful_fn._get_concrete_function_internal_garbage_collected( # pylint: disable=protected-access

697 *args, **kwds))

698

~/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/eager/function.py in _get_concrete_function_internal_garbage_collected(self, *args, **kwargs)

2853 args, kwargs = None, None

2854 with self._lock:

-> 2855 graph_function, _, _ = self._maybe_define_function(args, kwargs)

2856 return graph_function

2857

~/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/eager/function.py in _maybe_define_function(self, args, kwargs)

3211

3212 self._function_cache.missed.add(call_context_key)

-> 3213 graph_function = self._create_graph_function(args, kwargs)

3214 self._function_cache.primary[cache_key] = graph_function

3215 return graph_function, args, kwargs

~/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/eager/function.py in _create_graph_function(self, args, kwargs, override_flat_arg_shapes)

3063 arg_names = base_arg_names + missing_arg_names

3064 graph_function = ConcreteFunction(

-> 3065 func_graph_module.func_graph_from_py_func(

3066 self._name,

3067 self._python_function,

~/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/framework/func_graph.py in func_graph_from_py_func(name, python_func, args, kwargs, signature, func_graph, autograph, autograph_options, add_control_dependencies, arg_names, op_return_value, collections, capture_by_value, override_flat_arg_shapes)

984 _, original_func = tf_decorator.unwrap(python_func)

985

–> 986 func_outputs = python_func(*func_args, **func_kwargs)

987

988 # invariant: `func_outputs` contains only Tensors, CompositeTensors,

~/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/eager/def_function.py in wrapped_fn(*args, **kwds)

598 # __wrapped__ allows AutoGraph to swap in a converted function. We give

599 # the function a weak reference to itself to avoid a reference cycle.

–> 600 return weak_wrapped_fn().__wrapped__(*args, **kwds)

601 weak_wrapped_fn = weakref.ref(wrapped_fn)

602

~/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/framework/func_graph.py in wrapper(*args, **kwargs)

971 except Exception as e: # pylint:disable=broad-except

972 if hasattr(e, “ag_error_metadata”):

–> 973 raise e.ag_error_metadata.to_exception(e)

974 else:

975 raise

ValueError: in user code:

/home/shahzad/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/keras/engine/training.py:806 train_function *

return step_function(self, iterator)

/home/shahzad/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/keras/engine/training.py:796 step_function **

outputs = model.distribute_strategy.run(run_step, args=(data,))

/home/shahzad/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/distribute/distribute_lib.py:1211 run

return self._extended.call_for_each_replica(fn, args=args, kwargs=kwargs)

/home/shahzad/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/distribute/distribute_lib.py:2585 call_for_each_replica

return self._call_for_each_replica(fn, args, kwargs)

/home/shahzad/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/distribute/distribute_lib.py:2945 _call_for_each_replica

return fn(*args, **kwargs)

/home/shahzad/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/keras/engine/training.py:789 run_step **

outputs = model.train_step(data)

/home/shahzad/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/keras/engine/training.py:756 train_step

_minimize(self.distribute_strategy, tape, self.optimizer, loss,

/home/shahzad/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/keras/engine/training.py:2736 _minimize

gradients = optimizer._aggregate_gradients(zip(gradients, # pylint: disable=protected-access

/home/shahzad/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/keras/optimizer_v2/optimizer_v2.py:562 _aggregate_gradients

filtered_grads_and_vars = _filter_grads(grads_and_vars)

/home/shahzad/anaconda3/envs/nust1/lib/python3.8/site-packages/tensorflow/python/keras/optimizer_v2/optimizer_v2.py:1270 _filter_grads

raise ValueError(“No gradients provided for any variable: %s.” %

ValueError: No gradients provided for any variable: [’embedding/embeddings:0′, ‘dense/kernel:0’, ‘dense/bias:0’, ‘lstm/lstm_cell/kernel:0’, ‘lstm/lstm_cell/recurrent_kernel:0’, ‘lstm/lstm_cell/bias:0’, ‘dense_1/kernel:0’, ‘dense_1/bias:0’, ‘dense_2/kernel:0’, ‘dense_2/bias:0’].

Bro, did u solve this error?

I’m also getting the same.. plz help me out with this bro

m also getting the same error do anyone have the solution?

While conducting feature extraction on the dataset,

features = extract_features(dataset_images)

The extraction commences, goes on for around 2 hours and the spyder crashes and shuts down abruptly.

Could anybody please help me with this?

Thanks in advance!

During importing of libraries

I am getting ther error

NO MODULE FOUND NAMED ‘KERAS’

Though I have installed the keras .

Please help to resolve this issue

!pip install keras

One soluction for this is to install keras and tensolr flow using the commands pip uninstall keras and pip uninstall tensorflow. Then installing them again with required versions using pip install keras==2.2.4 and pip install tensorflow==1.13.1. Hope this helps!

if you get the ValueError: No gradients provided for any variable: Try to change this yield [[input_image, input_sequence], output_word] for yield ([input_image, input_sequence], output_word) in the data generation function.

how to use cmd in the end for the results

First check if Python is installed in your device. Then open the terminal and change the directory to the location where the Python file exist. After this, type python, you get the Python version as an output. Then you can run the program or the file containing the Python code.

what to write in place of filename and directory please help

Getting this error while runniing the code. How to remove it.

ValueError: No gradients provided for any variable: [’embedding_5/embeddings:0′, ‘dense_15/kernel:0’, ‘dense_15/bias:0’, ‘lstm_5/lstm_cell_5/kernel:0’, ‘lstm_5/lstm_cell_5/recurrent_kernel:0’, ‘lstm_5/lstm_cell_5/bias:0’, ‘dense_16/kernel:0’, ‘dense_16/bias:0’, ‘dense_17/kernel:0’, ‘dense_17/bias:0’].

You gotta use tensorflow 1.13.1 to resolve this issue, because this code is written for tensorflow 1.

usage: ipykernel_launcher.py [-h] -i IMAGE

ipykernel_launcher.py: error: the following arguments are required: -i/–image

An exception has occurred, use %tb to see the full traceback.

SystemExit: 2

c:\python\python37\lib\site-packages\IPython\core\interactiveshell.py:3426: UserWarning: To exit: use ‘exit’, ‘quit’, or Ctrl-D.

warn(“To exit: use ‘exit’, ‘quit’, or Ctrl-D.”, stacklevel=1)

I am getting error when suing model_generator(),this fun is depreciated please tell how to use fit function and the respective parameters in this image captioning problem

You are getting this error becasue only input is passed to the model_generator() function. You can prevent this erro by passing both inputs and corresponding output.

Getting this error and I’m not able to figure out how to solve it. “keyerror: ‘2513260012_03d33305cf.jpg'”

Did you resolve it? I am also getting same error

This might be becasue of wrong extension of the image, jpg taken instead of jpeg. Hope this solves the issue.

—————————————————————————

NameError Traceback (most recent call last)

in

3 #loading the file that contains all data

4 #mapping them into descriptions dictionary img to 5 captions

—-> 5 descriptions = all_img_captions(filename)

6 print(“Length of descriptions =” ,len(descriptions))

7

NameError: name ‘all_img_captions’ is not defined

I’m getting this error can you help me out here?

Please check if you have the all_img_captions function defined. If yes, check if you there is any difference in the name of the function. Hope this helps!

Hi, everybody! I just find a debug, hope that can help you. I has also an error about model.fit_generator()

#data generator, used by model.fit_generator()

def data_generator(descriptions, features, tokenizer, max_length):

while 1:

for key, description_list in descriptions.items():

#retrieve photo features

feature = features[key][0]

input_image, input_sequence, output_word = create_sequences(tokenizer, max_length, description_list, feature)

# yield [[input_image, input_sequence], output_word]

# I change this sentence

yield ([input_image, input_sequence], output_word)

KeyError: ‘1000268201_693b08cb0e.jpg’

I am getting the above error when training model…please help

I am also getting photos: train=1 which is supposed to be 6000.

okay so key here is ‘1000268201_693b08cb0e not ‘1000268201_693b08cb0e.jpg. Try removing the “.jpg” using split

For the same function I am getting the following error.

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\keras\engine\training.py:1844: UserWarning: `Model.fit_generator` is deprecated and will be removed in a future version. Please use `Model.fit`, which supports generators.

warnings.warn(‘`Model.fit_generator` is deprecated and ‘

—————————————————————————

ValueError Traceback (most recent call last)

in

13 for i in range(epochs):

14 generator = data_generator(train_descriptions, train_features, tokenizer, max_length)

—> 15 model.fit_generator(generator, epochs=1, steps_per_epoch= steps, verbose=1)

16 model.save(“models/model_” + str(i) + “.h5”)

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\keras\engine\training.py in fit_generator(self, generator, steps_per_epoch, epochs, verbose, callbacks, validation_data, validation_steps, validation_freq, class_weight, max_queue_size, workers, use_multiprocessing, shuffle, initial_epoch)

1845 ‘will be removed in a future version. ‘

1846 ‘Please use `Model.fit`, which supports generators.’)

-> 1847 return self.fit(

1848 generator,

1849 steps_per_epoch=steps_per_epoch,

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\keras\engine\training.py in fit(self, x, y, batch_size, epochs, verbose, callbacks, validation_split, validation_data, shuffle, class_weight, sample_weight, initial_epoch, steps_per_epoch, validation_steps, validation_batch_size, validation_freq, max_queue_size, workers, use_multiprocessing)

1098 _r=1):

1099 callbacks.on_train_batch_begin(step)

-> 1100 tmp_logs = self.train_function(iterator)

1101 if data_handler.should_sync:

1102 context.async_wait()

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\eager\def_function.py in __call__(self, *args, **kwds)

826 tracing_count = self.experimental_get_tracing_count()

827 with trace.Trace(self._name) as tm:

–> 828 result = self._call(*args, **kwds)

829 compiler = “xla” if self._experimental_compile else “nonXla”

830 new_tracing_count = self.experimental_get_tracing_count()

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\eager\def_function.py in _call(self, *args, **kwds)

869 # This is the first call of __call__, so we have to initialize.

870 initializers = []

–> 871 self._initialize(args, kwds, add_initializers_to=initializers)

872 finally:

873 # At this point we know that the initialization is complete (or less

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\eager\def_function.py in _initialize(self, args, kwds, add_initializers_to)

723 self._graph_deleter = FunctionDeleter(self._lifted_initializer_graph)

724 self._concrete_stateful_fn = (

–> 725 self._stateful_fn._get_concrete_function_internal_garbage_collected( # pylint: disable=protected-access

726 *args, **kwds))

727

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\eager\function.py in _get_concrete_function_internal_garbage_collected(self, *args, **kwargs)

2967 args, kwargs = None, None

2968 with self._lock:

-> 2969 graph_function, _ = self._maybe_define_function(args, kwargs)

2970 return graph_function

2971

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\eager\function.py in _maybe_define_function(self, args, kwargs)

3359

3360 self._function_cache.missed.add(call_context_key)

-> 3361 graph_function = self._create_graph_function(args, kwargs)

3362 self._function_cache.primary[cache_key] = graph_function

3363

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\eager\function.py in _create_graph_function(self, args, kwargs, override_flat_arg_shapes)

3194 arg_names = base_arg_names + missing_arg_names

3195 graph_function = ConcreteFunction(

-> 3196 func_graph_module.func_graph_from_py_func(

3197 self._name,

3198 self._python_function,

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\framework\func_graph.py in func_graph_from_py_func(name, python_func, args, kwargs, signature, func_graph, autograph, autograph_options, add_control_dependencies, arg_names, op_return_value, collections, capture_by_value, override_flat_arg_shapes)

988 _, original_func = tf_decorator.unwrap(python_func)

989

–> 990 func_outputs = python_func(*func_args, **func_kwargs)

991

992 # invariant: `func_outputs` contains only Tensors, CompositeTensors,

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\eager\def_function.py in wrapped_fn(*args, **kwds)

632 xla_context.Exit()

633 else:

–> 634 out = weak_wrapped_fn().__wrapped__(*args, **kwds)

635 return out

636

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\framework\func_graph.py in wrapper(*args, **kwargs)

975 except Exception as e: # pylint:disable=broad-except

976 if hasattr(e, “ag_error_metadata”):

–> 977 raise e.ag_error_metadata.to_exception(e)

978 else:

979 raise

ValueError: in user code:

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\keras\engine\training.py:805 train_function *

return step_function(self, iterator)

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\keras\engine\training.py:795 step_function **

outputs = model.distribute_strategy.run(run_step, args=(data,))

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\distribute\distribute_lib.py:1259 run

return self._extended.call_for_each_replica(fn, args=args, kwargs=kwargs)

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\distribute\distribute_lib.py:2730 call_for_each_replica

return self._call_for_each_replica(fn, args, kwargs)

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\distribute\distribute_lib.py:3417 _call_for_each_replica

return fn(*args, **kwargs)

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\keras\engine\training.py:788 run_step **

outputs = model.train_step(data)

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\keras\engine\training.py:754 train_step

y_pred = self(x, training=True)

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\keras\engine\base_layer.py:998 __call__

input_spec.assert_input_compatibility(self.input_spec, inputs, self.name)

c:\users\kiit\appdata\local\programs\python\python38\lib\site-packages\tensorflow\python\keras\engine\input_spec.py:204 assert_input_compatibility

raise ValueError(‘Layer ‘ + layer_name + ‘ expects ‘ +

ValueError: Layer model_4 expects 2 input(s), but it received 3 input tensors. Inputs received: [, , ]

Even after changing model.fit_generator() to model.fit() I am still getting the error

ValueError: Layer model_4 expects 2 input(s), but it received 3 input tensors. Inputs received: [, , ]

Kindly help

do changes in yield [input_image, input_sequence], output_word

The dataset is not found. Please help me in downloading the dataset

You can solve this issue by sending the data to model.fit_generator() in round brackers (()) rather than in square brackers([]). Hope this helps!

usage: ipykernel_launcher.py [-h] -i IMAGE

ipykernel_launcher.py: error: the following arguments are required: -i/–image

An exception has occurred, use %tb to see the full traceback.

SystemExit: 2

this is the error i get in my last cell which is for testing the model.

Failed to import pydot. You must install pydot and graphviz for `pydotprint` to work.

—————————————————————————

FileExistsError Traceback (most recent call last)

in

9 steps = len(train_descriptions)

10 # making a directory models to save our models

—> 11 os.mkdir(“models”)

12 for i in range(epochs):

13 generator = data_generator(train_descriptions, train_features, tokenizer, max_length)

FileExistsError: [WinError 183] Cannot create a file when that file already exists: ‘models’

and this is the error i get in my second last cell which is for training the model.

I have got this error in testing phase. please help me….

import numpy as np

from PIL import Image

import matplotlib.pyplot as plt

import argparse

ap = argparse.ArgumentParser()

ap.add_argument(‘-i’, ‘–image’, required=True, help=”C:\\Users\\Dell\\anaconda3\\envs\\testenv\\Flickr8k_Dataset\\Flicker8k_Dataset\\667626_18933d713e.jpg”)

args = vars(ap.parse_args())

img_path = args[‘image’]

def extract_features(filename, model):

try:

image = Image.open(filename)

except:

print(“ERROR: Couldn’t open image! Make sure the image path and extension is correct”)

image = image.resize((299,299))

image = np.array(image)

# for images that has 4 channels, we convert them into 3 channels

if image.shape[2] == 4:

image = image[…, :3]

image = np.expand_dims(image, axis=0)

image = image/127.5

image = image – 1.0

feature = model.predict(image)

return feature

def word_for_id(integer, tokenizer):

for word, index in tokenizer.word_index.items():

if index == integer:

return word

return None

def generate_desc(model, tokenizer, photo, max_length):

in_text = ‘start’

for i in range(max_length):

sequence = tokenizer.texts_to_sequences([in_text])[0]

sequence = pad_sequences([sequence], maxlen=max_length)

pred = model.predict([photo,sequence], verbose=0)

pred = np.argmax(pred)

word = word_for_id(pred, tokenizer)

if word is None:

break

in_text += ‘ ‘ + word

if word == ‘end’:

break

return in_text

#path = ‘Flicker8k_Dataset/111537222_07e56d5a30.jpg’

max_length = 32

tokenizer = load(open(“tokenizer.p”,”rb”))

model = load_model(‘models/model_9.h5’)

xception_model = Xception(include_top=False, pooling=”avg”)

photo = extract_features(img_path, xception_model)

img = Image.open(img_path)

description = generate_desc(model, tokenizer, photo, max_length)

print(“\n\n”)

print(description)

plt.imshow(img)

—————————————-

usage: [-h] -i IMAGE

: error: the following arguments are required: -i/–image

An exception has occurred, use %tb to see the full traceback.

SystemExit: 2

The dataset is not found. Please help me in downloading the dataset

The dataset is not found. Please help me in downloading the dataset

6396929964 DM me on whatsapp for help.

You can download the dataset from the kaggle website https://www.kaggle.com/meowmeowmeowmeowmeow/gtsrb-german-traffic-sign .

I am getting error in line from keras.utils import to_categorical

You can change the import statement to from tensorflow.keras.utils import to_categorical instead of from keras.utils import to_categorical. Hope this solves the issue.

Thank you so much for your work

But i have a question? Why the predict text with my picture always a men in red shirt …. even it’s not ?????

Hi !

I have a problem at the import for tqdm :

The error message :

Please use `tqdm.notebook.tqdm` instead of `tqdm.tqdm_notebook`

tqdm().pandas()

I tried a lot of things…

Can I have some help please ?

‘from tqdm.notebook import tqdm’ if you’re using a notebook or

‘from tqdm import tqdm’ if you’re not