1. XGBoost Tutorial – Objective

In this XGBoost Tutorial, we will study What is XGBoosting. Also, will learn the features of XGBoosting and why we need XGBoost Algorithm. We will try to cover all basic concepts like why we use XGBoost, why XGBoosting is good and much more.

So, let’s start XGBoost Tutorial.

XGBoost Tutorial – What is XGBoost in Machine Learning?

2. What is XGBoost?

XGBoost is an algorithm. That has recently been dominating applied

machine learning.

XGBoost Algorithm is an implementation of

gradient boosted decision trees. That

was designed for speed and performance.

Basically, XGBoosting is a type of software library. That you can download and install on your machine. Then have to access it from a variety of interfaces.

Specifically, XGBoosting supports the following main interfaces:

- Command Line Interface (CLI).

- C++ (the language in which the library is written).

- Python interface as well as a model in scikit-learn.

- R interface as well as a model in the caret package.

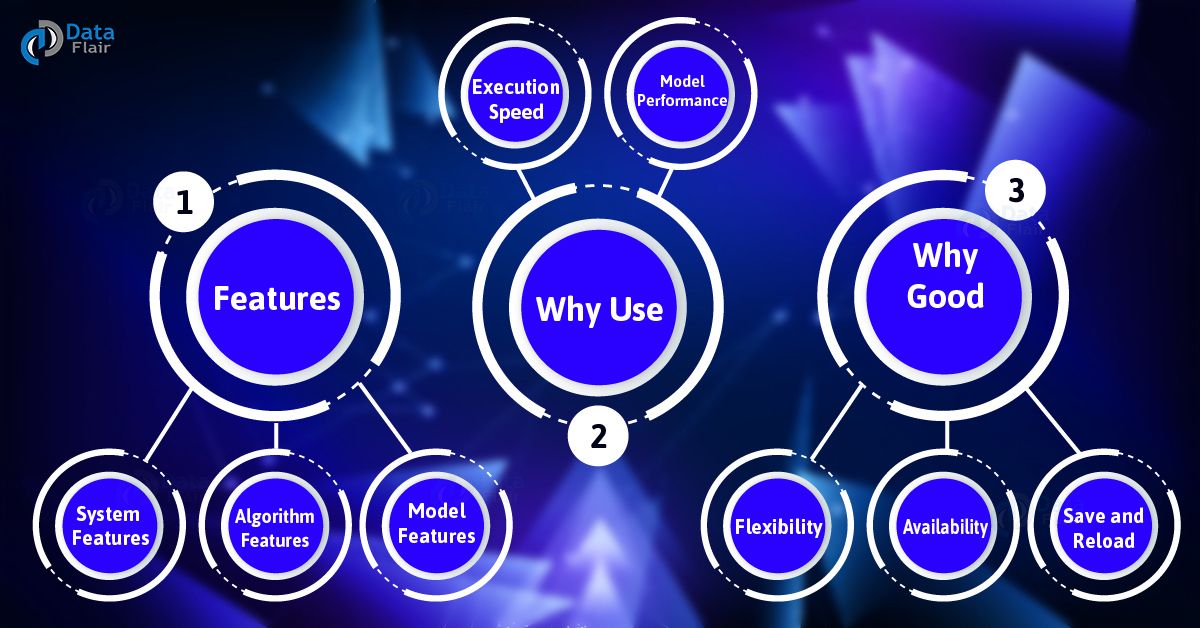

a. Model Features

XGBoost model implementation supports the features of the scikit-learn and R implementations. Three main forms of gradient boosting are supported:

This is also called as gradient boosting machine including the learning rate.

Stochastic Gradient Boosting

This is the boosting with sub-sampling at the row, column, and column per split levels.

Regularized Gradient Boosting

It includes boosting with both L1 and L2 regularization.

b. System Features

For use of a range of computing environments this library provides:

- Parallelization of tree construction using all of your CPU cores during training.

- Distributed Computing for training very large models using a cluster of machines.

- Out-of-Core Computing for very large datasets that don’t fit into memory.

- Cache Optimization of data structures and algorithm to make the best use of hardware.

c. Algorithm Features

For efficiency of computing time and memory resources, we use XGBoost algorithm. Also, this was designed to make use of available resources to train the model.

Some key algorithm implementation features include:

- Sparse aware implementation with automatic handling of missing data values.

- Block structure to support the parallelization of tree construction.

- Continued training so that you can further boost an already fitted model on new data.

- XGBoost is free open source software. That is available for use under the permissive Apache-2 license.

The two reasons to use XGBoosting Algorithms are also the two goals of the project:

a. XGBoost Execution Speed

When we compare XGBoosting to implementations of gradient boosting, it’s so fast.

It compares XGBoost to other implementations of gradient boosting and bagged decision trees. Also, he wrote up his results in May 2015 in the blog post titled. That is “Benchmarking Random Forest Implementations“.

Moreover, it provides all the code on GitHub and a more extensive report of results with hard numbers.

b. XGBoost Model Performance

It dominates structured datasets on classification and regression predictive modeling problems.

The evidence is that it is a go-to algorithm for competition winners. That is based on the Kaggle competitive data science platform.

5. XGBoost Tutorial – Why XGBoosting is good?

a. Flexibility

XGBoosting supports user-defined objective functions with classification, regression and ranking problems. We use an objective function to measure the performance of the model. That is given a certain set of parameters. Furthermore, it supports user-defined evaluation metrics as well.

b. Availability

As it is available for programming languages such as

R,

Python,

Java, Julia, and

Scala.

c. Save and Reload

We can easily save our data matrix and model and reload it later. Let suppose, if we have a large dataset, we can simply save the model. Further, we use it in future instead of wasting time redoing the computation.

So, this was all about XGBoost Tutorial. Hope you like our explanation.

Can u give the more information of ANN and SVM just only realated that XG boosting