Real-Time Face Mask Detector with Python, OpenCV, Keras

Free Machine Learning courses with 130+ real-time projects Start Now!!

During pandemic COVID-19, WHO has made wearing masks compulsory to protect against this deadly virus. In this tutorial we will develop a machine learning project – Real-time Face Mask Detector with Python.

Real-Time Face Mask Detector with Python

We will build a real-time system to detect whether the person on the webcam is wearing a mask or not. We will train the face mask detector model using Keras and OpenCV.

Download the Dataset

The dataset we are working on consists of 1376 images with 690 images containing images of people wearing masks and 686 images with people without masks.

Download the dataset: Face Mask Dataset

Download the Project Code

Before proceeding ahead, please download the project source code: Face Mask Detector Project

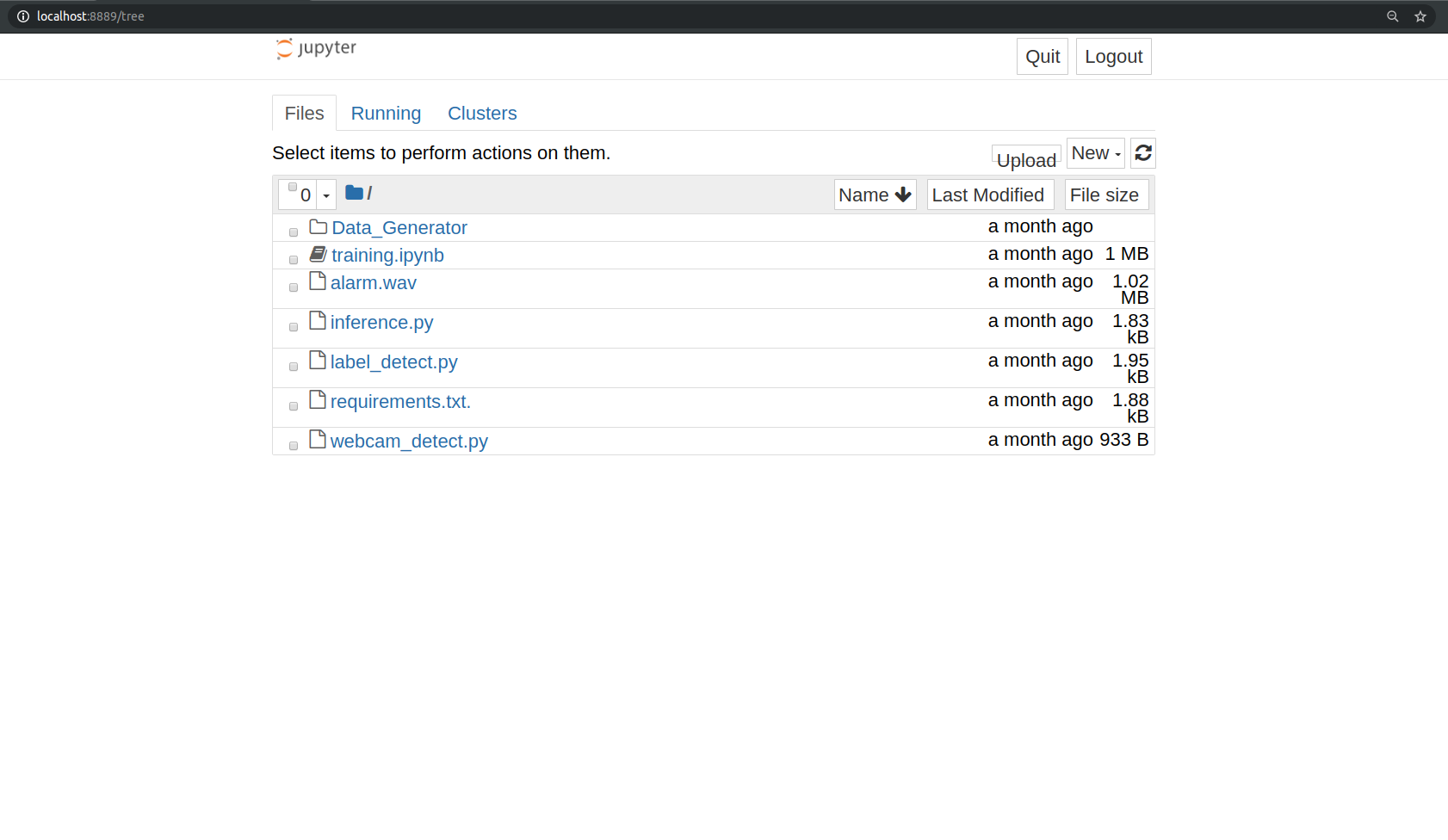

Install Jupyter Notebook

In this machine learning project for beginners, we will use Jupyter Notebook for the development. Let’s see steps for the installation and configuration of Jupyter Notebook.

Using pip python package manager you can install Jupyter notebook:

pip3 install notebook

And that’s it, you have installed jupyter notebook

Technology is evolving rapidly!

Stay updated with DataFlair on WhatsApp!!

After installing Jupyter notebook you can run the notebook server. To run the notebook, open terminal and type:

jupyter notebook

It will start the notebook server at http://localhost:8888

To create a new project click on the “new” tab on the right panel, it will generate a new .ipynb file.

Create a new file and write the code which you have downloaded

Let’s dive into the code for face mask detector project:

We are going to build this project in two parts. In the first part, we will write a python script using Keras to train face mask detector model. In the second part, we test the results in a real-time webcam using OpenCV.

Make a python file train.py to write the code for training the neural network on our dataset. Follow the steps:

1. Imports:

Import all the libraries and modules required.

from keras.optimizers import RMSprop from keras.preprocessing.image import ImageDataGenerator import cv2 from keras.models import Sequential from keras.layers import Conv2D, Input, ZeroPadding2D, BatchNormalization, Activation, MaxPooling2D, Flatten, Dense,Dropout from keras.models import Model, load_model from keras.callbacks import TensorBoard, ModelCheckpoint from sklearn.model_selection import train_test_split from sklearn.metrics import f1_score from sklearn.utils import shuffle import imutils import numpy as np

2. Build the neural network:

This convolution network consists of two pairs of Conv and MaxPool layers to extract features from the dataset. Which is then followed by a Flatten and Dropout layer to convert the data in 1D and ensure overfitting.

And then two Dense layers for classification.

model = Sequential([

Conv2D(100, (3,3), activation='relu', input_shape=(150, 150, 3)),

MaxPooling2D(2,2),

Conv2D(100, (3,3), activation='relu'),

MaxPooling2D(2,2),

Flatten(),

Dropout(0.5),

Dense(50, activation='relu'),

Dense(2, activation='softmax')

])

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['acc'])

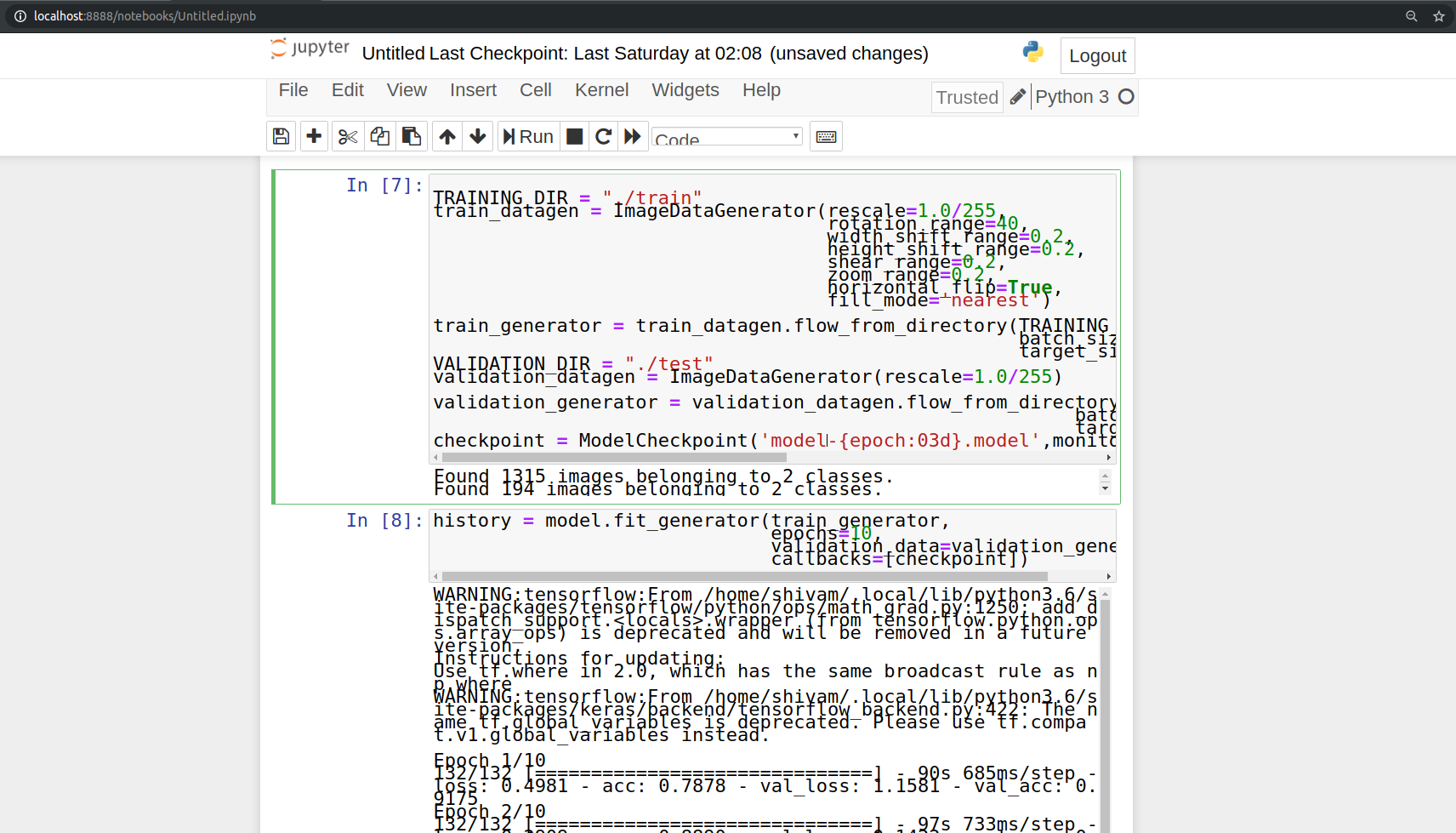

3. Image Data Generation/Augmentation:

TRAINING_DIR = "./train"

train_datagen = ImageDataGenerator(rescale=1.0/255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

train_generator = train_datagen.flow_from_directory(TRAINING_DIR,

batch_size=10,

target_size=(150, 150))

VALIDATION_DIR = "./test"

validation_datagen = ImageDataGenerator(rescale=1.0/255)

validation_generator = validation_datagen.flow_from_directory(VALIDATION_DIR,

batch_size=10,

target_size=(150, 150))

4. Initialize a callback checkpoint to keep saving best model after each epoch while training:

checkpoint = ModelCheckpoint('model2-{epoch:03d}.model',monitor='val_loss',verbose=0,save_best_only=True,mode='auto')

5. Train the model:

history = model.fit_generator(train_generator,

epochs=10,

validation_data=validation_generator,

callbacks=[checkpoint])

Now we will test the results of face mask detector model using OpenCV.

Make a python file “test.py” and paste the below script.

import cv2

import numpy as np

from keras.models import load_model

model=load_model("./model-010.h5")

results={0:'without mask',1:'mask'}

GR_dict={0:(0,0,255),1:(0,255,0)}

rect_size = 4

cap = cv2.VideoCapture(0)

haarcascade = cv2.CascadeClassifier('/home/user_name/.local/lib/python3.6/site-packages/cv2/data/haarcascade_frontalface_default.xml')

while True:

(rval, im) = cap.read()

im=cv2.flip(im,1,1)

rerect_size = cv2.resize(im, (im.shape[1] // rect_size, im.shape[0] // rect_size))

faces = haarcascade.detectMultiScale(rerect_size)

for f in faces:

(x, y, w, h) = [v * rect_size for v in f]

face_img = im[y:y+h, x:x+w]

rerect_sized=cv2.resize(face_img,(150,150))

normalized=rerect_sized/255.0

reshaped=np.reshape(normalized,(1,150,150,3))

reshaped = np.vstack([reshaped])

result=model.predict(reshaped)

label=np.argmax(result,axis=1)[0]

cv2.rectangle(im,(x,y),(x+w,y+h),GR_dict[label],2)

cv2.rectangle(im,(x,y-40),(x+w,y),GR_dict[label],-1)

cv2.putText(im, results[label], (x, y-10),cv2.FONT_HERSHEY_SIMPLEX,0.8,(255,255,255),2)

cv2.imshow('LIVE', im)

key = cv2.waitKey(10)

if key == 27:

break

cap.release()

cv2.destroyAllWindows()

Run the project and observe the model performance.

python3 test.py

Summary

In this project, we have developed a deep learning model for face mask detection using Python, Keras, and OpenCV. We developed the face mask detector model for detecting whether person is wearing a mask or not. We have trained the model using Keras with network architecture. Training the model is the first part of this project and testing using webcam using OpenCV is the second part.

This is a nice project for beginners to implement their learnings and gain expertise.

Did you know we work 24x7 to provide you best tutorials

Please encourage us - write a review on Google

What you have used in the saved model ?? Getting error there

I need a final report/document on real time mask usage detection

Same here. Were you able to solve the error? Can you please tell how?

Check the quotes ‘model2-009.model’

UserWarning: image file could not be identified because WEBP support not installed

UnidentifiedImageError: cannot identify image file

this is happening when I’m training the model

I installed libwebp libraries restarted the system after installing, even after that I’m getting the same error

Is this error resolved

I’m having the same error. Please help

just delete the webp images from the dataset you are using.

but if you really want to do that just give this a try

pip install libwebp

They is a chance of corrupt image in the dataset, python program to identify corrupt image is below:

import PIL

from pathlib import Path

from PIL import UnidentifiedImageError

path = Path(“PATH OF DATASET”).rglob(“*.jpg”)

for img_p in path:

try:

img = PIL.Image.open(img_p)

except PIL.UnidentifiedImageError:

print(img_p)

can anyone tell me how to upload test and train files on jupyter notebook

You can show your files direction… “c://…download/.train” , etc.

inside the program??

I’ve managed the program to run, but as soon as it runs I see the camera open notification but the camera does not open up, system says camera is in use but the camera screen does not open up. please someone help me I’ve come so far in this project with endless errors and depression. Please give me solution why my camera screen is not showing. thank you

webcam = cv2.VideoCapture(0)

check if you have 0 as the value…but also if you are using mac it will be 1

OSError: SavedModel file does not exist at: ./model-010.h5/{saved_model.pbtxt|saved_model.pb}

where is the model-010.h5 file??

i’m gettng error .

same error

just copy the file path of the place where you saved the model that u trained….

replace / with \

After 1 epochs complete it shows an error: PIL.UnidentifiedImageError: cannot identify image file

I tried to fix this problem from StackOverflow but there has no solution. can anyone help to solve this problem?

How to change the test. py program for image format? I have need this please post the answer. 🙏

why do u want to do that just import the model isntead

I am getting NO MODULE NAMED KERAS please give me solution anyone

pip install keras .

Please try to understand the basic of ML before diving into it

can I try in python instead of Jupyter?. When I tried in python. it is just open and close it. kindly help me to fix it.

managed to run the code.But it is always with Mask thought it is without in real.How to resolve

Hi, have you solved the issue? I have a similar one, it is showing always “No mask” even when wearing a mask.

run the code.But result is wrong.always with mask even no mask.How to resolve.

Hi, did you solve the issue? I have a similar one.

that means the model isn’t trained properly… get more images for your dataset and try 20 epocs. also get adam learning rate to 0.0001 if you are feeling really patient

FileNotFoundError: [WinError 3] The system cannot find the path specified: ‘c://Users/user/Desktop/project 6th sem/face-mask-dataset/Dataset/.train’

can anyone help!

TRAINING_DIR = “./train”

train_datagen = ImageDataGenerator(rescale=1.0/255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode=’nearest’)

train_generator = train_datagen.flow_from_directory(TRAINING_DIR,

batch_size=10,

target_size=(150, 150))

VALIDATION_DIR = “./test”

validation_datagen = ImageDataGenerator(rescale=1.0/255)

validation_generator = validation_datagen.flow_from_directory(VALIDATION_DIR,

batch_size=10,

target_size=(150, 150))

FileNotFoundError Traceback (most recent call last)

in

9 fill_mode=’nearest’)

10

—> 11 train_generator = train_datagen.flow_from_directory(TRAINING_DIR,

12 batch_size=10,

13 target_size=(150, 150))

c:\users\shubham\appdata\local\programs\python\python39\lib\site-packages\keras\preprocessing\image.py in flow_from_directory(self, directory, target_size, color_mode, classes, class_mode, batch_size, shuffle, seed, save_to_dir, save_prefix, save_format, follow_links, subset, interpolation)

953 and `y` is a numpy array of corresponding labels.

954 “””

–> 955 return DirectoryIterator(

956 directory,

957 self,

c:\users\shubham\appdata\local\programs\python\python39\lib\site-packages\keras\preprocessing\image.py in __init__(self, directory, image_data_generator, target_size, color_mode, classes, class_mode, batch_size, shuffle, seed, data_format, save_to_dir, save_prefix, save_format, follow_links, subset, interpolation, dtype)

371 dtype = backend.floatx()

372 kwargs[‘dtype’] = dtype

–> 373 super(DirectoryIterator, self).__init__(

374 directory, image_data_generator,

375 target_size=target_size,

c:\users\shubham\appdata\local\programs\python\python39\lib\site-packages\keras_preprocessing\image\directory_iterator.py in __init__(self, directory, image_data_generator, target_size, color_mode, classes, class_mode, batch_size, shuffle, seed, data_format, save_to_dir, save_prefix, save_format, follow_links, subset, interpolation, dtype)

113 if not classes:

114 classes = []

–> 115 for subdir in sorted(os.listdir(directory)):

116 if os.path.isdir(os.path.join(directory, subdir)):

117 classes.append(subdir)

FileNotFoundError: [WinError 3] The system cannot find the path specified: ‘./train’

nice, but how to notify people that they are not wearing mask.

Epoch 1/10

102/132 [======================>…….] – ETA: 25s – loss: 0.5733 – acc: 0.7665

—————————————————————————

UnknownError Traceback (most recent call last)

in

—-> 1 history = model.fit(train_generator, epochs=10, validation_data=validation_generator, callbacks=[checkpoint])

~\anaconda3\lib\site-packages\tensorflow\python\keras\engine\training.py in fit(self, x, y, batch_size, epochs, verbose, callbacks, validation_split, validation_data, shuffle, class_weight, sample_weight, initial_epoch, steps_per_epoch, validation_steps, validation_batch_size, validation_freq, max_queue_size, workers, use_multiprocessing)

1181 _r=1):

1182 callbacks.on_train_batch_begin(step)

-> 1183 tmp_logs = self.train_function(iterator)

1184 if data_handler.should_sync:

1185 context.async_wait()

~\anaconda3\lib\site-packages\tensorflow\python\eager\def_function.py in __call__(self, *args, **kwds)

887

888 with OptionalXlaContext(self._jit_compile):

–> 889 result = self._call(*args, **kwds)

890

891 new_tracing_count = self.experimental_get_tracing_count()

~\anaconda3\lib\site-packages\tensorflow\python\eager\def_function.py in _call(self, *args, **kwds)

915 # In this case we have created variables on the first call, so we run the

916 # defunned version which is guaranteed to never create variables.

–> 917 return self._stateless_fn(*args, **kwds) # pylint: disable=not-callable

918 elif self._stateful_fn is not None:

919 # Release the lock early so that multiple threads can perform the call

~\anaconda3\lib\site-packages\tensorflow\python\eager\function.py in __call__(self, *args, **kwargs)

3021 (graph_function,

3022 filtered_flat_args) = self._maybe_define_function(args, kwargs)

-> 3023 return graph_function._call_flat(

3024 filtered_flat_args, captured_inputs=graph_function.captured_inputs) # pylint: disable=protected-access

3025

~\anaconda3\lib\site-packages\tensorflow\python\eager\function.py in _call_flat(self, args, captured_inputs, cancellation_manager)

1958 and executing_eagerly):

1959 # No tape is watching; skip to running the function.

-> 1960 return self._build_call_outputs(self._inference_function.call(

1961 ctx, args, cancellation_manager=cancellation_manager))

1962 forward_backward = self._select_forward_and_backward_functions(

~\anaconda3\lib\site-packages\tensorflow\python\eager\function.py in call(self, ctx, args, cancellation_manager)

589 with _InterpolateFunctionError(self):

590 if cancellation_manager is None:

–> 591 outputs = execute.execute(

592 str(self.signature.name),

593 num_outputs=self._num_outputs,

~\anaconda3\lib\site-packages\tensorflow\python\eager\execute.py in quick_execute(op_name, num_outputs, inputs, attrs, ctx, name)

57 try:

58 ctx.ensure_initialized()

—> 59 tensors = pywrap_tfe.TFE_Py_Execute(ctx._handle, device_name, op_name,

60 inputs, attrs, num_outputs)

61 except core._NotOkStatusException as e:

UnknownError: UnidentifiedImageError: cannot identify image file

Traceback (most recent call last):

File “C:\Users\tam05\anaconda3\lib\site-packages\tensorflow\python\ops\script_ops.py”, line 249, in __call__

ret = func(*args)

File “C:\Users\tam05\anaconda3\lib\site-packages\tensorflow\python\autograph\impl\api.py”, line 645, in wrapper

return func(*args, **kwargs)

File “C:\Users\tam05\anaconda3\lib\site-packages\tensorflow\python\data\ops\dataset_ops.py”, line 961, in generator_py_func

values = next(generator_state.get_iterator(iterator_id))

File “C:\Users\tam05\anaconda3\lib\site-packages\tensorflow\python\keras\engine\data_adapter.py”, line 837, in wrapped_generator

for data in generator_fn():

File “C:\Users\tam05\anaconda3\lib\site-packages\tensorflow\python\keras\engine\data_adapter.py”, line 963, in generator_fn

yield x[i]

File “C:\Users\tam05\anaconda3\lib\site-packages\keras_preprocessing\image\iterator.py”, line 65, in __getitem__

return self._get_batches_of_transformed_samples(index_array)

File “C:\Users\tam05\anaconda3\lib\site-packages\keras_preprocessing\image\iterator.py”, line 227, in _get_batches_of_transformed_samples

img = load_img(filepaths[j],

File “C:\Users\tam05\anaconda3\lib\site-packages\keras_preprocessing\image\utils.py”, line 114, in load_img

img = pil_image.open(io.BytesIO(f.read()))

File “C:\Users\tam05\anaconda3\lib\site-packages\PIL\Image.py”, line 2967, in open

raise UnidentifiedImageError(

PIL.UnidentifiedImageError: cannot identify image file

[[{{node PyFunc}}]]

[[IteratorGetNext]] [Op:__inference_train_function_850]

Function call stack:

train_function

for this line is showing rerect_size = cv2.rerect_size(im, (im.shape[1] // rect_size, im.shape[0] // rect_size))

# Resize the image to speed up detection

—> 21 mini = cv2.resize(im, (im.shape[1] // size, im.shape[0] // size))

22

23 # detect MultiScale / faces

AttributeError: ‘NoneType’ object has no attribute ‘shape’

can you help me to solve?

I am facing the same issue?

Can you tell me how you resolved this issue please?

Did u directly download and run the code or typed the code in jupiter?

I am facing the same problem, I downloaded and copy pasted the code on Colab and dataset + XML file is linked via Drive perfectly but still ‘Nonetype’ object has no attribute ‘shape’ is coming up as error. Can You Help?

Which Algorithm you have used???

hello there , nice tutorial, but my train.php only generates .model files, just generated model2-001.model, model2-002.model and model2-004.model, model2-006.model and model2-007.model, but , this does not generate any model-010.h5 and also does not generates any .xml file like /home/pi/.local/lib/python3.6/site-packages/cv2/data/haarcascade_frontalface_default.xml, the “pi” it is my username. can you please clarify me why this it is possible? what is the tool I need to create the .h5 model?

Thanks in advance!

re-run the train file you’ll get the models…..otherwise go with the 007 models

and about the xml file…download it online and specify the path

UnknownError: UnidentifiedImageError: cannot identify image file

Hi, working this on Colab, can someone please help me out and tell me that in the Test section, classifier = cv2.CascadeClassifier command is used to locate the XML file, where is this file supposed to be and how should this work?

is there a proper fixed code? Please share.

Fixed the XML file but having an error while resizing the image right after that.

I get an error ‘Incompatible shapes: [10,2] vs. [10,3]; during the 1st epoch. Please Help!

I get this error during the 1st epoch ‘Incompatible shapes: [10,2] vs. [10,3]’.Help!

Sir can you kindly add that if the person is not wearing mask, then his or her picture will be captured and stored as well.

“ValueError: logits and labels must have the same shape ((None, 2) vs (None, 1))”

this is the error I get when I try to use the softmax activation function with two outputs

error: OpenCV(4.5.5) D:\a\opencv-python\opencv-python\opencv\modules\objdetect\src\cascadedetect.cpp:1689: error: (-215:Assertion failed) !empty() in function ‘cv::CascadeClassifier::detectMultiScale’

I’m getting this error in the last step, what should I do…

check to make sure the path to your cascade classifier is correctly specified