Spark Stage- An Introduction to Physical Execution plan

A stage is nothing but a step in a physical execution plan. It is basically a physical unit of the execution plan. This blog aims at explaining the whole concept of Apache Spark Stage. It covers the types of Stages in Spark which are of two types: ShuffleMapstage in Spark and ResultStage in spark. Also, it will cover the details of the method to create Spark Stage. However, before exploring this blog, you should have a basic understanding of Apache Spark so that you can relate with the concepts well.

What are Stages in Spark?

What are Stages in Spark?

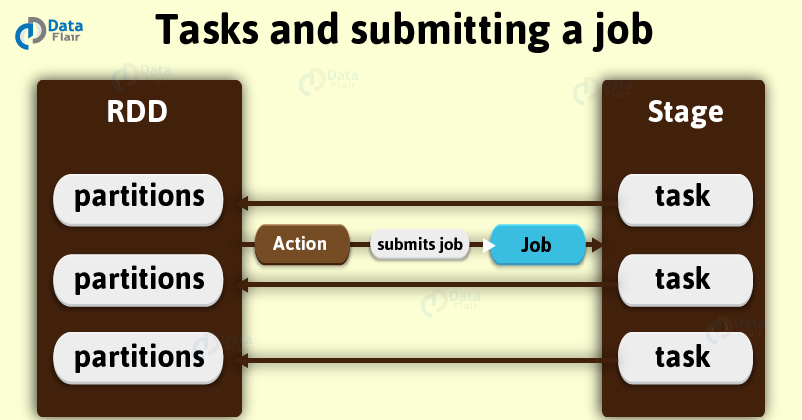

A stage is nothing but a step in a physical execution plan. It is a physical unit of the execution plan. It is a set of parallel tasks i.e. one task per partition. In other words, each job which gets divided into smaller sets of tasks is a stage. Although, it totally depends on each other. However, we can say it is as same as the map and reduce stages in MapReduce.

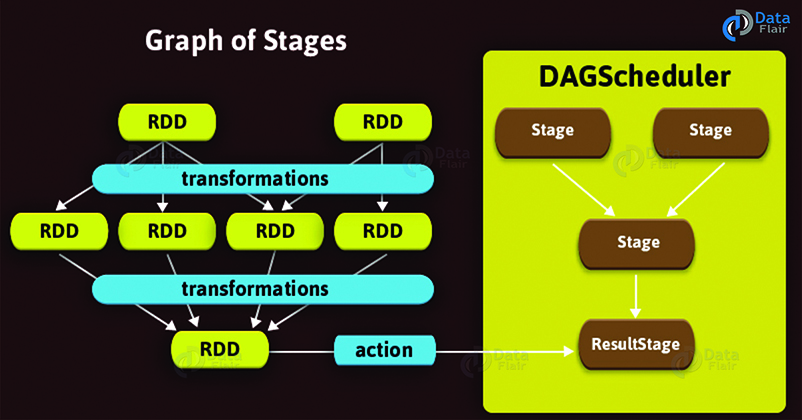

We can associate the spark stage with many other dependent parent stages. However, it can only work on the partitions of a single RDD. Also, with the boundary of a stage in spark marked by shuffle dependencies.

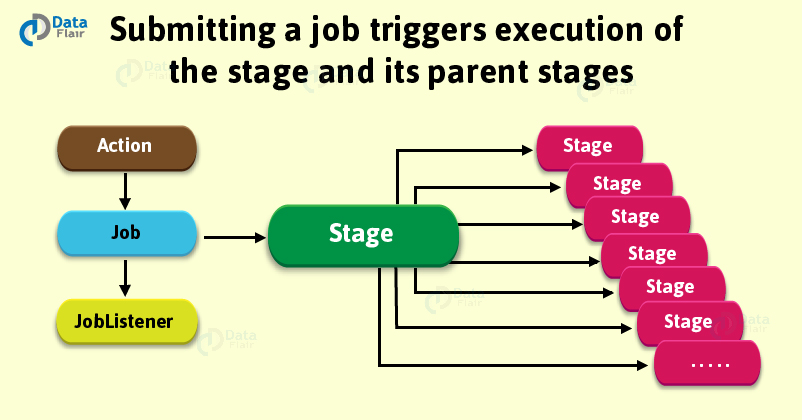

Ultimately, submission of Spark stage triggers the execution of a series of dependent parent stages. Although, there is a first Job Id present at every stage that is the id of the job which submits stage in Spark.

Types of Spark Stages

Stages in Apache spark have two categories

1. ShuffleMapStage in Spark

2. ResultStage in Spark

Let’s discuss each type of Spark Stages in detail:

1. ShuffleMapStage in Spark

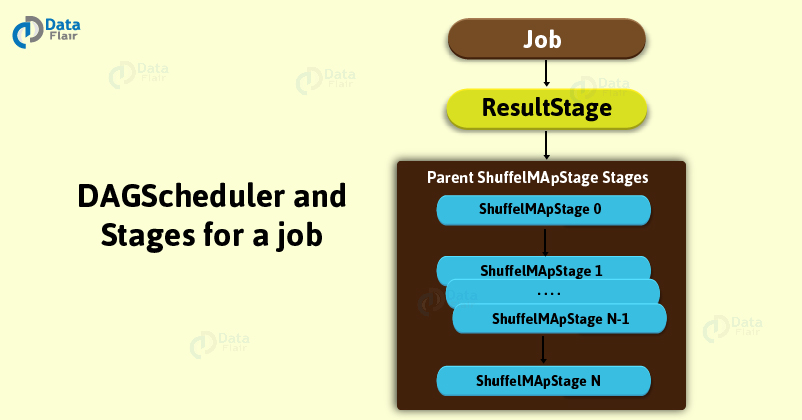

ShuffleMapStage is considered as an intermediate Spark stage in the physical execution of DAG. It produces data for another stage(s). In a job in Adaptive Query Planning / Adaptive Scheduling, we can consider it as the final stage in Apache Spark and it is possible to submit it independently as a Spark job for Adaptive Query Planning.

In addition, at the time of execution, a Spark ShuffleMapStage saves map output files. We can fetch those files by reduce tasks. When all map outputs are available, the ShuffleMapStage is considered ready. Although, output locations can be missing sometimes. Two things we can infer from this scenario. Those are partitions might not be calculated or are lost. However, we can track how many shuffle map outputs available. To track this, stages uses outputLocs &_numAvailableOutputs internal registries.

We consider ShuffleMapStage in Spark as an input for other following Spark stages in the DAG of stages. Basically, that is shuffle dependency’s map side. It is possible that there are various multiple pipeline operations in ShuffleMapStage like map and filter, before shuffle operation. We can share a single ShuffleMapStage among different jobs.

2. ResultStage in Spark

By running a function on a spark RDD Stage that executes a Spark action in a user program is a ResultStage. It is considered as a final stage in spark. ResultStage implies as a final stage in a job that applies a function on one or many partitions of the target RDD in Spark. It also helps for computation of the result of an action.

Let’s discuss: Spark real-time use cases

Getting StageInfo For Most Recent Attempt

There is one more method, latestInfo method which helps to know the most recent StageInfo.`

latestInfo: StageInfo

Stage Contract

It is a private[scheduler] abstract contract.

abstract class Stage {

def findMissingPartitions(): Seq[Int]

}

Method To Create New Apache Spark Stage

There is a basic method by which we can create a new stage in Spark. The method is:

makeNewStageAttempt(

numPartitionsToCompute: Int,

taskLocalityPreferences: Seq[Seq[TaskLocation]] = Seq.empty): Unit

Basically, it creates a new TaskMetrics. With the help of RDD’s SparkContext, we register the internal accumulators. We can also use the same Spark RDD that was defined when we were creating Stage.

In addition, to set latestInfo to be a StageInfo, from Stage we can use the following: nextAttemptId, numPartitionsToCompute, & taskLocalityPreferences, increments nextAttemptId counter.

The very important thing to note is that we use this method only when DAGScheduler submits missing tasks for a Spark stage.

Let’s revise: Data Type Mapping between R and Spark

Summary

In this blog, we have studied the whole concept of Apache Spark Stages in detail and so now, it’s time to test yourself with Spark Quiz and know where you stand.

Hope, this blog helped to calm the curiosity about Stage in Spark. Still, if you have any query, ask in the comment section below.

Your 15 seconds will encourage us to work even harder

Please share your happy experience on Google