Ways to Create SparkDataFrames in SparkR

1. Objective

Today, in this SparkDataFrames Tutorial, we will learn the whole concept of creating DataFrames in SparkR. Data is organized as a distributed collection of data into named columns. Basically, that we call a SparkDataFrames in SparkR. Also, there are many ways to create DataFrames in SparkR.

So, let’s start SparkDataFrames Tutorial.

2. What is SparkDataFrames?

Data is organized as a distributed collection of data into named columns. Basically, that we call a SparkDataFrame. Although, it is as same as a table in a relational database or a data frame in R. Moreover, we can construct a SparkR DataFrame from a wide array of sources. For example, structured data files, tables in Hive, external databases. Also, existing local R data frames are used for construction.

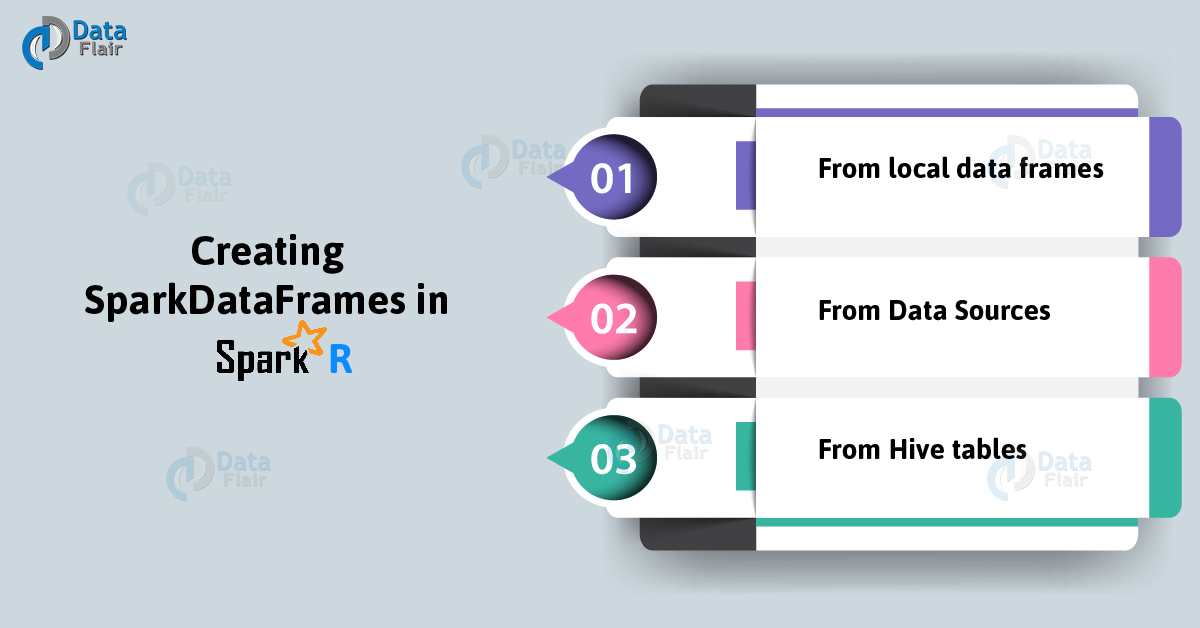

3. Ways to Create SparkDataFrames

Applications can create DataFrames in Spark, with a SparkSession. Apart from it, we can also create it from several methods. Such as local R data frame, a Hive table, or other data sources. Let’s discuss all in brief.

a. From local data frames

To create a SparkDataframe, there is one simplest way. That is the conversion of a local R data frame into a SparkDataFrame. Although, we can create by using as DataFrame or createDataFrame. Also, by passing in the local R data frame to create a SparkDataFrame.

For example,

df <- as.DataFrame(faithful) # Displays the first part of the SparkDataFrame head(df) ## eruptions waiting ##1 3.600 79 ##2 1.800 54 ##3 3.333 74

b. From Data Sources

Through the SparkR SparkDataFrame interface, SparkR supports operating on a variety of data sources. Basically, for creating SparkDataFrames, the general method from data sources is read.df. Generally, this method takes in the path for the file to load. Also, the type of data source and the currently active SparkSession will be automatically used. Moreover, SparkR supports reading JSON, CSV and parquet files natively.

In addition, we can add these packages by specifying two conditions. Such as, if packages with spark-submit or sparkR commands. Else, if initializing SparkSession with the sparkPackages parameter. Either in an interactive R shell or from RStudio.

sparkR.session(sparkPackages = "com.databricks:spark-avro_2.11:3.0.0")

Basically, we have seen how to use data sources using an example, JSON input file. Although the file that is used here is not a typical JSON file. Basically, each line in the file must contain a separate, valid JSON object.

people <- read.df("./examples/src/main/resources/people.json", "json")

head(people)

## age name

##1 NA Michael

##2 30 Andy

##3 19 Justin

# SparkR automatically infers the schema from the JSON file

printSchema(people)

# root

# |-- age: long (nullable = true)

# |-- name: string (nullable = true)

# Similarly, multiple files can be read with read.json

people <- read.json(c("./examples/src/main/resources/people.json", "./examples/src/main/resources/people2.json"))

The data sources API natively supports CSV formatted input files. For more information please refer to SparkR read.df API documentation.

df <- read.df(csvPath, "csv", header = "true", inferSchema = "true", na.strings = "NA")In addition, we can also use data sources API to save out SparkDataFrames into multiple file formats.

For example, we can save the SparkDataFrame from the previous example to a Parquet file using write.df.

write.df(people, path = "people.parquet", source = "parquet", mode = "overwrite")

c. From Hive tables

We can also use Hive tables to create SparkDataFrames. For this, we will need to create a SparkSession with Hive support. Also can help to access tables in the Hive MetaStore. Although it is very important to note that Spark should have been built with Hive support. Although, SparkR attempt to create a SparkSession with Hive support enabled by default. (enableHiveSupport = TRUE).

sparkR.session()

For example,

sql("CREATE TABLE IF NOT EXISTS src (key INT, value STRING)")

sql("LOAD DATA LOCAL INPATH 'examples/src/main/resources/kv1.txt' INTO TABLE src")

# Queries can be expressed in HiveQL.

results <- sql("FROM src SELECT key, value")

# results is now a SparkDataFrame

head(results)

## key value

## 1 238 val_238

## 2 86 val_86

## 3 311 val_311So, this was all in SparkDataFrames. Hope you like our explanation.

4. Conclusion

Hence, we have seen all the methods to construct a SparkR SparkDataFrame. Also, we have learned different ways to create Data frames in spark with local R data frame, a Hive table, and data sources. Although, we have covered all the insights regarding. Still, if any query arises, feel free to ask in the comment section.

For reference.

If you are Happy with DataFlair, do not forget to make us happy with your positive feedback on Google