Abandoned Object Detection in Video Surveillance using OpenCV

Free Machine Learning courses with 130+ real-time projects Start Now!!

Automated video surveillance systems gain huge interest for monitoring public and private places. During the last few years abandoned object detection is a hot topic in the video surveillance community. Abandoned Object Detection systems analyze moving objects in a scenario and identify stationary objects in that scene, which is considered an abandoned object under some condition.

The AOD system is a very complex problem, and it is still in the active research field. Because the detection quality depends on lighting changes, high-density moving objects, camera picture quality, etc.

Applications of AOD:

- Video surveillance

- Illegal parking detection.

- Suspicious object detection.

In this project, we’re going to make an Abandoned object detection system using OpenCV and python.

OpenCV is a real-time computer vision and image processing library for python. OpenCV is very popular because it is lightweight and contains more than 2500 image processing algorithms.

As we know that it is a complex problem to solve. So how are we going to solve this?

First, we’ll take a static picture that doesn’t contain any suspicious or moving objects. Then we’ll find the difference between the static picture and real-time frames. After some filtration, any difference will be considered as an extra object. Then we’ll continuously keep track of the position and state of that object. If the object is moving then nothing will happen but if the object stays at the same place for a few times then the object will be considered as an Abandoned object or suspicious object.

Prerequisites:

- Python 3.x (we used python 3.7.10)

- OpenCV – 4.5.3

- Numpy – 1.19.3

Download Abandoned Object Detection Project Code

Please download the source code of abandoned object detection with opencv: Abandoned Object Detection Project Code

For this project, we’ll create two different programs. One program will keep track of all objects and find abandoned objects and another program will process all the frames.

So let’s start with program-1

Tracker:

# DataFlair Abandoned object Tracker

import math

class ObjectTracker:

def __init__(self):

# Store the center positions of the objects

self.center_points = {}

# Keep the count of the IDs

# each time a new object id detected, the count will increase by one

self.id_count = 0

self.abandoned_temp = {}

def update(self, objects_rect):

# Objects boxes and ids

objects_bbs_ids = []

abandoned_object = []

- First, we import the math module to perform basic mathematical operations.

- Then we created a class named ObjectTracker. And inside the class, we created a method named update. This update method will keep track of all objects and will update the detected object’s current state.

- The update method takes a list that contains all the detected object’s positional information. We’ll see how we can get the positional information of all objects in program 2.

- We create an empty center_points dictionary which will store all the tracked objects inside the frame.

- Abandoned_temp will be used to store temporary detected abandoned objects for later verification.

- Id_count is a unique id for each detected object. For any detected new object, id_count will increase by 1.

# Get center point of new object

for rect in objects_rect:

x, y, w, h = rect

cx = (x + x + w) / 2

cy = (y + y + h) / 2

- Here we calculate the center point for each input object.

# Find out if that object was detected already

same_object_detected = False

for id, pt in self.center_points.items():

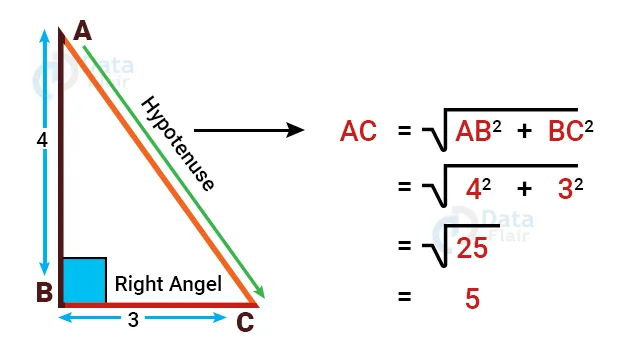

distance = math.hypot(cx - pt[0], cy - pt[1])

if distance < 25:

self.center_points[id] = (cx, cy)

objects_bbs_ids.append([x, y, w, h, id, distance])

same_object_detected = True

- Now we calculate the distance between every new object’s center point and the already presented object’s center point. If

- the distance is less than a threshold then the object will be considered as the same object. And we add the object to object_bbs_ids list.

- Math.hypot calculates the hypotenuse of a right-angle triangle. It calculates the distance between two points.

Technology is evolving rapidly!

Stay updated with DataFlair on WhatsApp!!

- After that, we update the center point position for that object.

if id in self.abandoned_temp:

if distance<1:

if self.abandoned_temp[id] >100:

abandoned_object.append([id, x, y, w, h, distance])

else:

self.abandoned_temp[id] += 1 # Increase count for the object

Break

# If new object is detected then assign the ID to that object

if same_object_detected is False:

# print(False)

self.center_points[self.id_count] = (cx, cy)

self.abandoned_temp[self.id_count] = 1

objects_bbs_ids.append([x, y, w, h, self.id_count, None])

self.id_count += 1

- We know that an abandoned object is a stationary object that doesn’t move. So we again check if the distance is less than 1, then the object is added to the abandoned_temp list.

- In each loop, we check the object is still present in the abandoned_temp dictionary. If the object is present for at least 100

- frames then we consider the object as an abandoned object otherwise we increase the count by 1.

- If the object is not the same then we add the object in relevant dictionaries with a unique id and increase the id by 1.

# Clean the dictionary by center points to remove IDS not used anymore

new_center_points = {}

abandoned_temp_2 = {}

for obj_bb_id in objects_bbs_ids:

_, _, _, _, object_id, _ = obj_bb_id

center = self.center_points[object_id]

new_center_points[object_id] = center

if object_id in self.abandoned_temp:

counts = self.abandoned_temp[object_id]

abandoned_temp_2[object_id] = counts

# Update dictionary with IDs not used removed

self.center_points = new_center_points.copy()

self.abandoned_temp = abandoned_temp_2.copy()

return objects_bbs_ids , abandoned_object

- Here we remove all the objects that are not the frame anymore.

- Finally, we return the tracked objects list and abandoned objects list.

Our tracker program is done. Now let’s see how the main program works.

Steps:

1. Import necessary packages.

2. Preprocess the first frame.

3. Read frames from the video file.

4. Find objects in the current frame.

5. Detect abandoned objects in the frame.

Step 1 – Import necessary packages:

# DataFlair Abandoned Object Detection import numpy as np import cv2 from tracker import *

- First, import all the necessary packages.

# Initialize Tracker tracker = ObjectTracker()

- Create a tracker object using ObjectTracker() class that we’ve created in the tracker program.

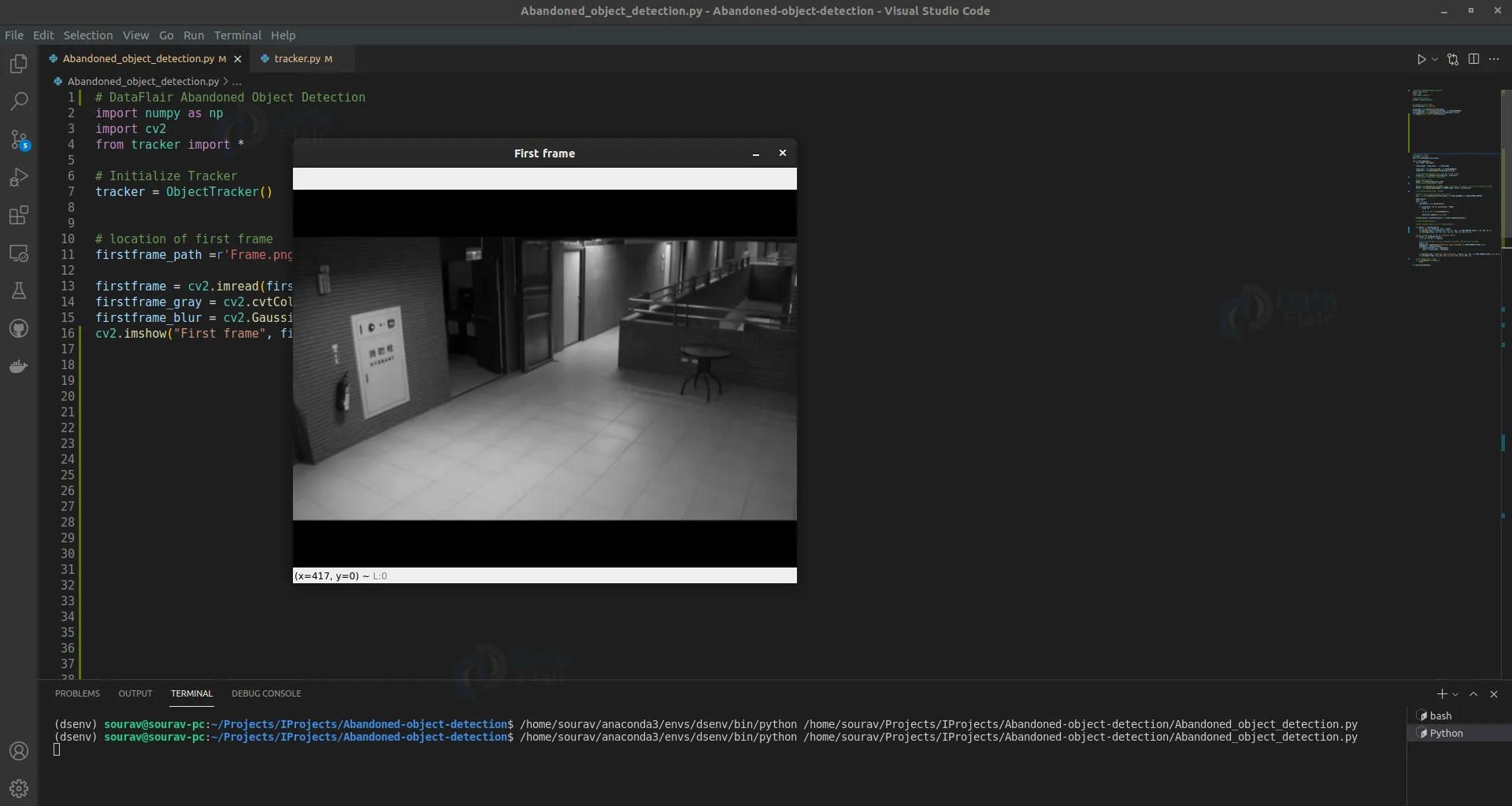

Step 2 – Preprocess the first frame:

# location of first frame

firstframe_path =r'Frame.png'

firstframe = cv2.imread(firstframe_path)

firstframe_gray = cv2.cvtColor(firstframe, cv2.COLOR_BGR2GRAY)

firstframe_blur = cv2.GaussianBlur(firstframe_gray,(3,3),0)

cv2.imshow("First frame", firstframe_blur)

- Using cv2.imread() we read the first frame from local storage.

- Then we convert the image to a grayscale image using cv2.cvtColor(). OpenCV reads an image in BGR format, that’s why we’ve used cv2.COLOR_BGR2GRAY to convert BGR frame to grayscale.

- After that, the image is blurred using cv2.GaussianBlur() function. Blurring helps to remove some noises from the image.

Step 3 – Read frames from the video file:

# location of video

file_path ='cut.mp4'

cap = cv2.VideoCapture(file_path)

while (cap.isOpened()):

ret, frame = cap.read()

frame_height, frame_width, _ = frame.shape

- To read the video file, we’ve used cv2.VideoCapture(). It creates a capture object.

- cap.isOpened() checks if the capture object is open or not.

- Then using cap.read() we reach each frame from the video file in each loop.

frame_gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) frame_blur = cv2.GaussianBlur(frame_gray,(3,3),0)

- After that, we again preprocess the current frame using the same method.

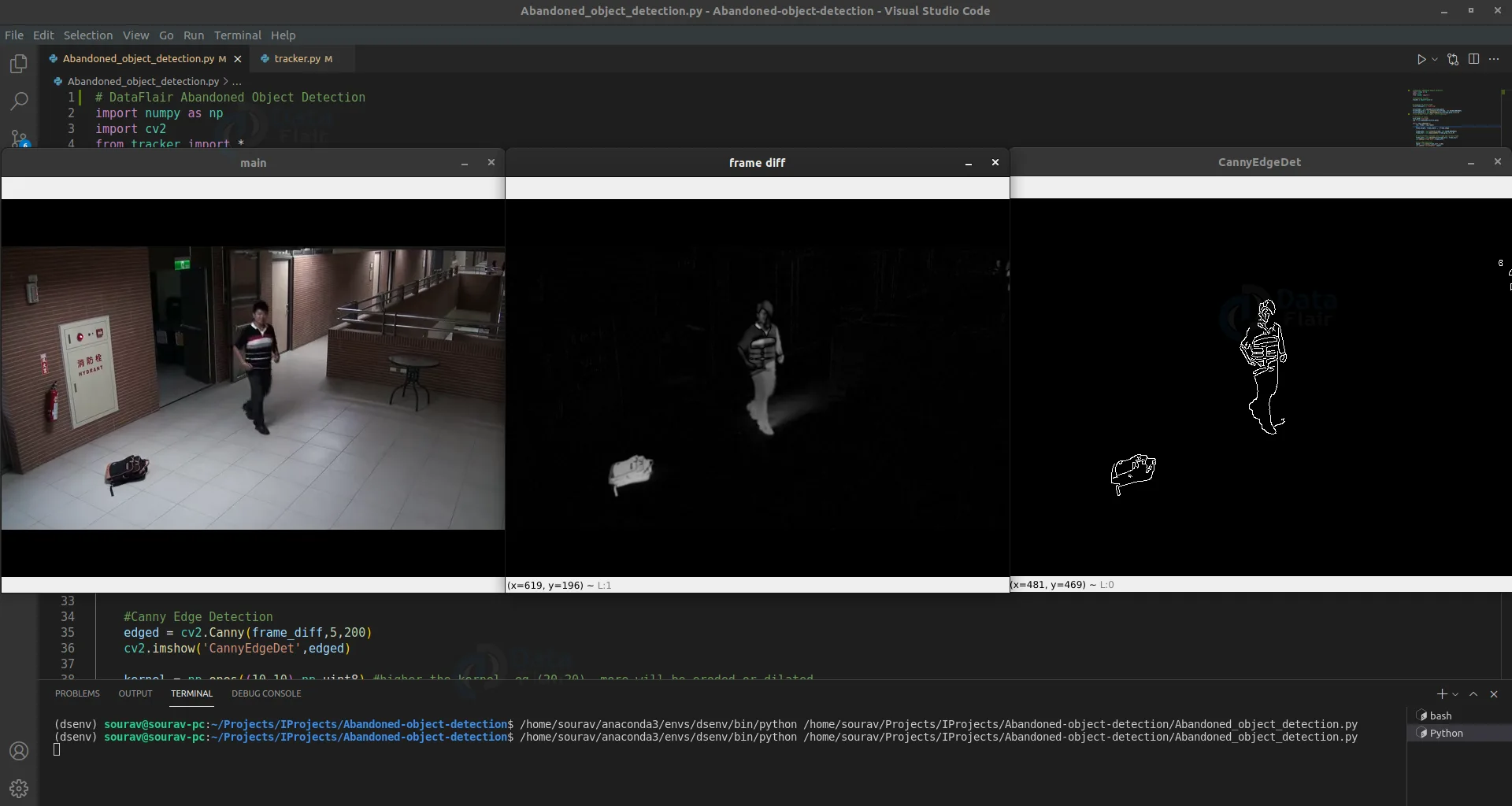

Step 4 – Find objects in the current frame:

# find difference between first frame and current frame

frame_diff = cv2.absdiff(firstframe, frame)

cv2.imshow("frame diff",frame_diff)

#Canny Edge Detection

edged = cv2.Canny(frame_diff,5,200)

cv2.imshow('CannyEdgeDet',edged)

- Cv2.absdiff finds the difference between the two pictures.

- cv2.Canny() detects edges in a frame.

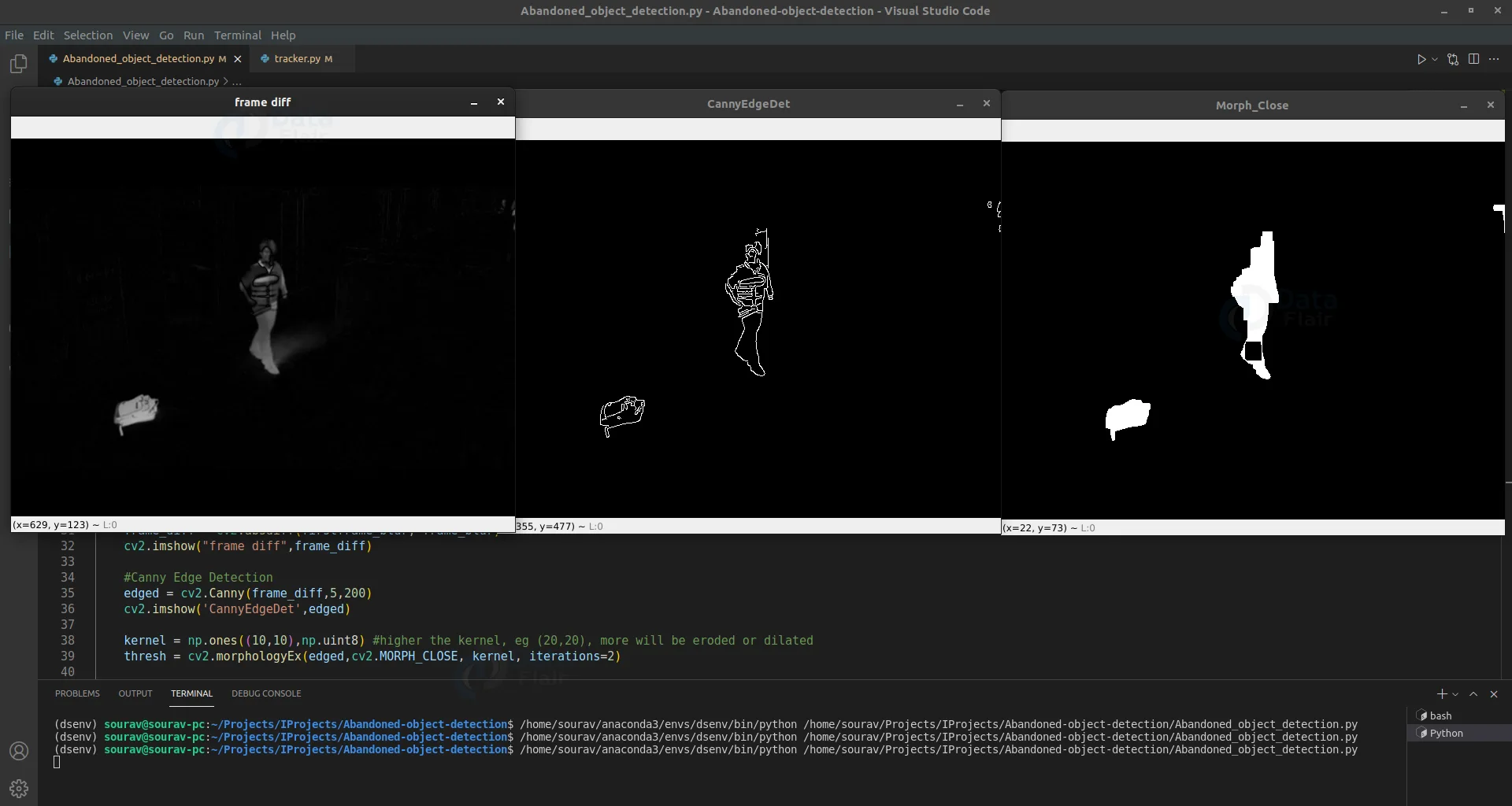

kernel = np.ones((10,10),np.uint8)

thresh = cv2.morphologyEx(edged,cv2.MORPH_CLOSE, kernel, iterations=2)

cv2.imshow('Morph_Close', thresh)

- cv2.morphologyEx() applies morphological operations .

- The higher the kernel size, the more will be eroded or dilated.

Step 5 – Detect abandoned objects in the frame:

cnts, _ = cv2.findContours(thresh,

cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

detections=[]

count = 0

for c in cnts:

contourArea = cv2.contourArea(c)

if contourArea > 50 and contourArea < 10000:

count +=1

(x, y, w, h) = cv2.boundingRect(c)

detections.append([x, y, w, h])

_, abandoned_objects = tracker.update(detections)

- cv2.findcontours () finds contours of all detected objects. Contour is a curve joining all continuous points that have the same color and intensity.

- To remove some noise we calculate the area of contour using cv2.contourArea() function and then we check if the area is greater than a certain threshold.

- Using cv2.boundingRect() we get the bounding box for all detected contours. And add to a list called detections.

- After getting all bounding boxes we pass the list to our tracker object. The tracker then returns a list of abandoned objects’ location coordinates.

Now finally we have all the detected abandoned objects.

for objects in abandoned_objects:

_, x2, y2, w2, h2, _ = objects

cv2.putText(frame, "Suspicious object detected", (x2, y2 - 10),

cv2.FONT_HERSHEY_PLAIN, 1.2, (0, 0, 255), 2)

cv2.rectangle(frame, (x2, y2), (x2 + w2, y2 + h2), (0, 0, 255), 2)

cv2.imshow('main',frame)

if cv2.waitKey(15) == ord('q'):

break

cv2.destroyAllWindows()

- Finally, we draw the rectangle around the detected abandoned object using cv2.rectangle() function and draw a text using cv2.putText() function.

Abandoned Object Detection Output

Summary

In this project, we’ve built a surveillance system called abandoned object detection. Through this project, we’ve learned to track an object, find the stationary objects in a frame, and also some basic image processing techniques.

Your 15 seconds will encourage us to work even harder

Please share your happy experience on Google