Top Advantages and Disadvantages of Hadoop 3

The objective of this tutorial is to discuss the advantages and disadvantages of Hadoop 3.0. As many changes are introduced in Hadoop 3.0 it has become a better product.

Hadoop is designed to store and manage a large amount of data. There are many advantages of Hadoop like it is free and open source, easy to use, its performance etc. but on the other hand, it has some weaknesses which we called as disadvantages.

So, let’s start exploring the top advantages and disadvantages of Hadoop.

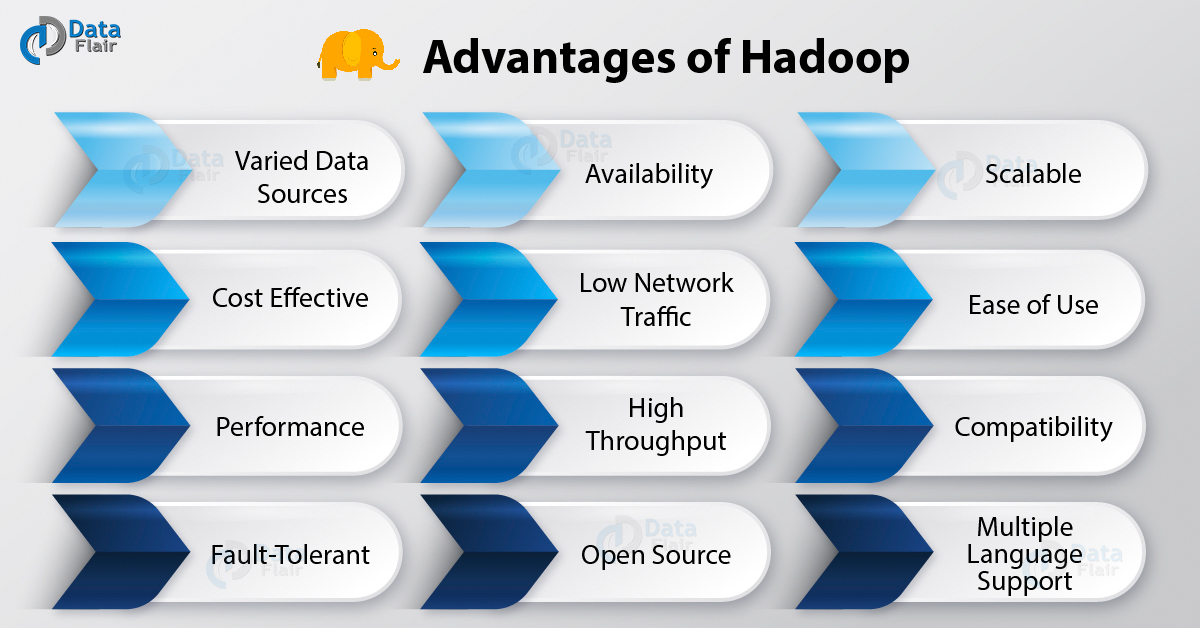

Advantages of Hadoop

Hadoop is easy to use, scalable and cost-effective. Along with this, Hadoop has many advantages. Here we are discussing the top 12 advantages of Hadoop. So, following are the pros of Hadoop that makes it so popular –

1. Varied Data Sources

Hadoop accepts a variety of data. Data can come from a range of sources like email conversation, social media etc. and can be of structured or unstructured form. Hadoop can derive value from diverse data. Hadoop can accept data in a text file, XML file, images, CSV files etc.

2. Cost-effective

Hadoop is an economical solution as it uses a cluster of commodity hardware to store data. Commodity hardware is cheap machines hence the cost of adding nodes to the framework is not much high. In Hadoop 3.0 we have only 50% of storage overhead as opposed to 200% in Hadoop2.x. This requires less machine to store data as the redundant data decreased significantly.

3. Performance

Hadoop with its distributed processing and distributed storage architecture processes huge amounts of data with high speed. Hadoop even defeated supercomputer the fastest machine in 2008. It divides the input data file into a number of blocks and stores data in these blocks over several nodes. It also divides the task that user submits into various sub-tasks which assign to these worker nodes containing required data and these sub-task run in parallel thereby improving the performance.

4. Fault-Tolerant

In Hadoop 3.0 fault tolerance is provided by erasure coding. For example, 6 data blocks produce 3 parity blocks by using erasure coding technique, so HDFS stores a total of these 9 blocks. In event of failure of any node the data block affected can be recovered by using these parity blocks and the remaining data blocks.

5. Highly Available

In Hadoop 2.x, HDFS architecture has a single active NameNode and a single Standby NameNode, so if a NameNode goes down then we have standby NameNode to count on. But Hadoop 3.0 supports multiple standby NameNode making the system even more highly available as it can continue functioning in case if two or more NameNodes crashes.

6. Low Network Traffic

In Hadoop, each job submitted by the user is split into a number of independent sub-tasks and these sub-tasks are assigned to the data nodes thereby moving a small amount of code to data rather than moving huge data to code which leads to low network traffic.

7. High Throughput

Throughput means job done per unit time. Hadoop stores data in a distributed fashion which allows using distributed processing with ease. A given job gets divided into small jobs which work on chunks of data in parallel thereby giving high throughput.

8. Open Source

Hadoop is an open source technology i.e. its source code is freely available. We can modify the source code to suit a specific requirement.

9. Scalable

Hadoop works on the principle of horizontal scalability i.e. we need to add the entire machine to the cluster of nodes and not change the configuration of a machine like adding RAM, disk and so on which is known as vertical scalability. Nodes can be added to Hadoop cluster on the fly making it a scalable framework.

10. Ease of use

The Hadoop framework takes care of parallel processing, MapReduce programmers does not need to care for achieving distributed processing, it is done at the backend automatically.

11. Compatibility

Most of the emerging technology of Big Data is compatible with Hadoop like Spark, Flink etc. They have got processing engines which work over Hadoop as a backend i.e. We use Hadoop as data storage platforms for them.

12. Multiple Languages Supported

Developers can code using many languages on Hadoop like C, C++, Perl, Python, Ruby, and Groovy.

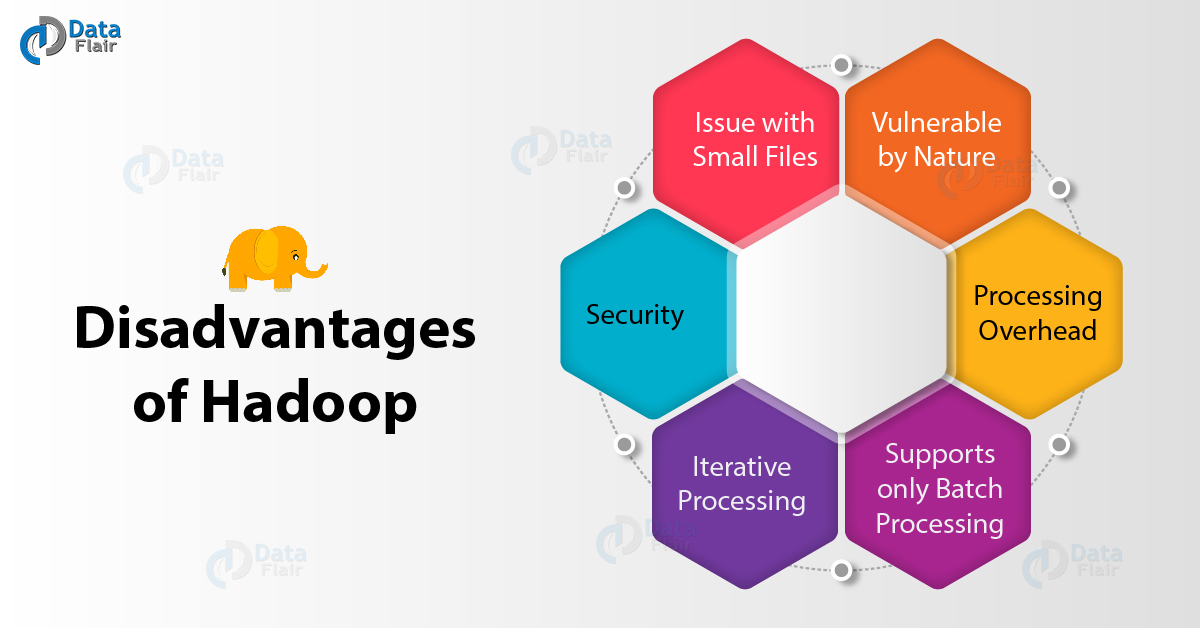

2. Disadvantages of Hadoop

1. Issue With Small Files

Hadoop is suitable for a small number of large files but when it comes to the application which deals with a large number of small files, Hadoop fails here. A small file is nothing but a file which is significantly smaller than Hadoop’s block size which can be either 128MB or 256MB by default. These large number of small files overload the Namenode as it stores namespace for the system and makes it difficult for Hadoop to function.

2. Vulnerable By Nature

Hadoop is written in Java which is a widely used programming language hence it is easily exploited by cyber criminals which makes Hadoop vulnerable to security breaches.

3. Processing Overhead

In Hadoop, the data is read from the disk and written to the disk which makes read/write operations very expensive when we are dealing with tera and petabytes of data. Hadoop cannot do in-memory calculations hence it incurs processing overhead.

4. Supports Only Batch Processing

At the core, Hadoop has a batch processing engine which is not efficient in stream processing. It cannot produce output in real-time with low latency. It only works on data which we collect and store in a file in advance before processing.

5. Iterative Processing

Hadoop cannot do iterative processing by itself. Machine learning or iterative processing has a cyclic data flow whereas Hadoop has data flowing in a chain of stages where output on one stage becomes the input of another stage.

6. Security

For security, Hadoop uses Kerberos authentication which is hard to manage. It is missing encryption at storage and network levels which are a major point of concern.

So, this was all about Hadoop Pros and Cons. Hope you liked our explanation.

Summary – Advantages and Disadvantages of Hadoop

Every software used by the industry comes with its own set of drawbacks and benefits. If the software is essential for the organization then one can exploit the benefits and take measures to minimize the faults. We can see that Hadoop has benefits which outweigh its shortcomings making it a strong solution to Big Data needs. Still, if you have any query regarding Hadoop advantages and disadvantages, ask through the comments.

Did you like our efforts? If Yes, please give DataFlair 5 Stars on Google

Hadoop cannot do in-memory calculations… – what about Spark?

sir tell me data in rest encryption algo that is used by the researcher the area algorithm used and all other so whats about if i will implement the secure kerboes is it possible and do in memory calculation also.

is it possible to work in interactive processing in hadoop big data