Hadoop getmerge Command – Learn to Execute it with Example

In this blog, we are going to discuss Hadoop file system shell command getmerge. It is used to merge n number of files in the HDFS distributed file system and put it into a single file in local file system. So, let’s start Hadoop getmerge Command.

Hadoop getmerge Command

Usage:

hdfs dfs –getmerge [-nl] <src> <localdest>

Takes the src directory and local destination file as the input. Concatenates the file in the src and puts it into the local destination file. Optionally we can use –nl to add new line character at the end of each file. We can use the –skip-empty-file option to avoid unnecessary new line characters for empty files.

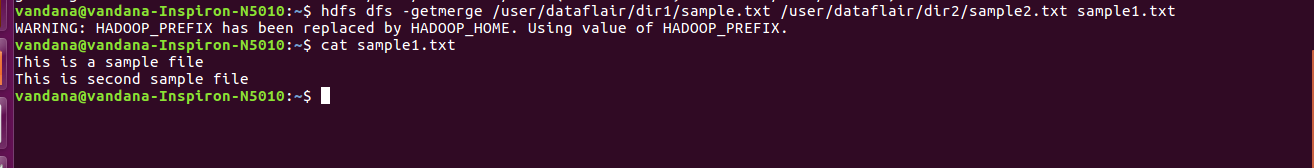

Example of getmerge command

hdfs dfs -getmerge /user/dataflair/dir1/sample.txt /user/dataflair/dir2/sample2.txt /home/sample1.txt

You Must Explore – Most Frequently Used Commands in Hadoop

Why Do We Use Hadoop getmerge Command?

The getmerge command in Hadoop is for merging files existing in the HDFS file system into a single file in the local file system.

The command is useful to download the output of MapReduce job. It has multiple part-* files into a single local file. We can use this local file later on for other operations like putting it in excel file for presentation and so on.

Conclusion

We conclude that getmerge is a very useful HDFS file system shell command. In practice, we can use it to merge the output of MapReduce program into a local file.

Still, if you have any confusion regarding Hadoop getmerge command, ask in the comment section.

Technology is evolving rapidly!

Stay updated with DataFlair on WhatsApp!!

You give me 15 seconds I promise you best tutorials

Please share your happy experience on Google

When using getmerge command the destination file should be always new one or it works with the existing files too. If it doesn’t work with existing files how can we work on that in Hadoop. Bcz I have three files. One file is just having headers. So I thought to first keep header data in the target file and then merge remaining file. If I cannot follow this approach then header will get merged somewhere in between. Can you please help me in that. Thanks in Advance

What is the difference between getMerge and appendToFile command ?