3 Easy Steps to Execute Hadoop copyFromLocal Command

Hadoop file system shell commands have a similar structure to Unix commands. People working with Unix shell command find it easy to adapt to Hadoop Shell commands. These commands interact with HDFS and other file systems supported by Hadoop. For example, local file system, S3 file system and so on. Today, we will explore Hadoop copyFromLocal Command and its use.

Hadoop copyFromLocal

We use this command in Hadoop to copy the file from the local file system to Hadoop Distributed File System (HDFS). Here is one restriction with this command and that is, the source file can reside only in the local file system.

coprFromLocal has an optional parameter –f which gets used to replace the files that already exist in the system. This is useful when we have to copy the same file again or update it. By default, the system will throw the error if we try to copy a file in the same directory in which it already exists. One way to update a file is to delete the file and copy it again and another way is to use –f.

Have a look at most frequently used Hadoop Commands

Shell Command

We can invoke the Hadoop file system by the following command:-

hadoop fs <args>

When the command gets executed the output is sent to stdout and errors to stderr. In most cases, both are the console screen.

We can also use the below method to invoke fs commands which is a synonym to hadoop fs:-

hdfs dfs -<command> <args>

Below statement shows the usage of copyFromLocal command:-

hdfs dfs –copyFromLocal <local-source> URI

We can write the command with –f option to overwrite the file if it is already present.

hdfs dfs –copyFromLocal –f <local-source> URI

Technology is evolving rapidly!

Stay updated with DataFlair on WhatsApp!!

Recommended Reading – Hadoop Distributed Cache

Steps to Execute copyFromLocal Command

We have to perform the following steps to perform copyFromLocal command:-

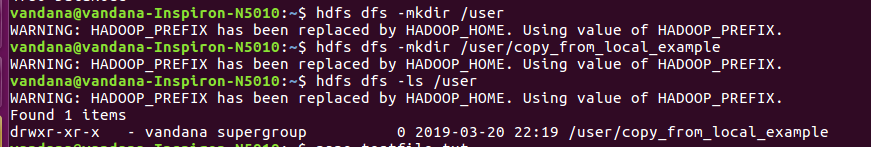

1. Make directory

hdfs dfs –mkdir /user/copy_from_local_example

The above command is used to create a directory in HDFS.

hdfs dfs –ls /user

The above command is to check if the directory is created in HDFS.

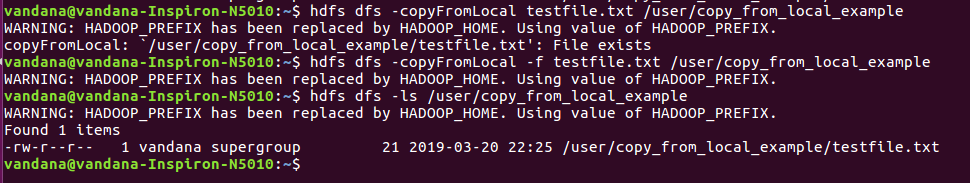

2. Copying the local file into the directory in HDFS

hdfs dfs –copyFromLocal testfile.txt /user/copy_from_local_example

The above command copies the file testfile.txt from local filesystem to hdfs directory.

hdfs dfs –ls /user/copy_from_local_example

The above command is to check the creation of testfile.txt in hdfs directory /user/copy_from_local_example

Do you know about Hadoop Automatic Failover?

3. Overwriting the existing file in HDFS

copyFromLocal command does not by default overwrite the existing files. If we try to copy the file with the same name in the same directory then we will get an error. We can see it from the below screenshot.

We have to use –f option of copyFromLocal file to overwrite the file.

hdfs dfs –copyFromLocal –f testfile.txt /user/copy_from_local_example

The above command will replace the existing file. To check whether the file has been successfully copied we use ls command. From the below screenshot we can see that file is indeed copied from the timestamp of 22:25 as compared to timestamp of 22:22 when the file was first created.

Conclusion

Thus, copyFromLocal is one of the important commands of the Hadoop FS shell. We can use this command to load the input file of the MapReduce job from the local file system to HDFS. In this article, we have taken an example to understand copyFromLocal command. And how to go about overwriting the already existing file. Still, if you have any doubt, feel free to ask through comments.

Did you know we work 24x7 to provide you best tutorials

Please encourage us - write a review on Google