Why Hadoop is Important – 11 Major Reasons To Learn Hadoop

In today’s fast-paced world we hear a term – Big Data. Nowadays various companies collect data posted online. This unstructured data found on websites like Facebook, Instagram, emails etc comprise Big Data. Big Data demands a cost-effective, innovative solution to store and analyze it. Hadoop is the answer to all Big Data requirements. So, let’s explore why Hadoop is so important.

Why Hadoop is Important

Below is the list of 11 features that answer – Why Hadoop?

1. Managing Big Data

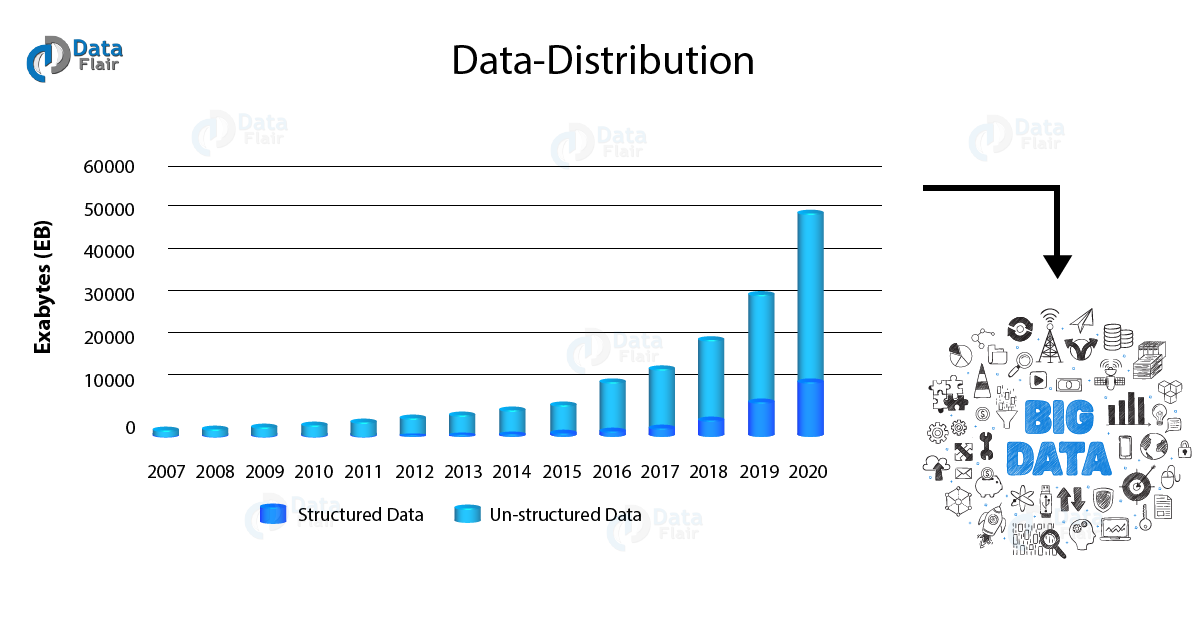

As we are living in the digital era there is a data explosion. The data is getting generated at a very high speed and high volume. So there is an increasing need to manage this Big Data.

As we can see from the above chart that the volume of unstructured data is increasing exponentially. Therefore in order to manage this ever-increasing volume of data, we require Big Data technologies like Hadoop. According to Google from the dawn of civilization till 2003 mankind generated 5 exabytes of data. Now we produce 5 exabytes every two days. There is an increasing need for a solution which could handle this much amount of data. In this scenario, Hadoop comes to rescue.

With its robust architecture and economical feature, it is the best fit for storing huge amounts of data. Though it might seem difficult to learn Hadoop, with the help of DataFlair Big Data Hadoop Course, it becomes easy to learn and start a career in this fastest growing field. So Hadoop must be learnt by all those professionals willing to start a career in big data as it is the base for all big data jobs.

2. Exponential Growth of Big Data Market

“Hadoop Market is expected to reach $99.31B by 2022 at a CAGR of 42.1%” – Forbes

Slowly companies are realizing the advantage big data can bring to their business. The big data analytics sector in India will grow eightfold. As per NASSCOM, it will reach USD 16 billion by 2025 from USD 2 billion. As India progresses, there is penetration of smart devices in cities and in villages. This will scale up the big data market.

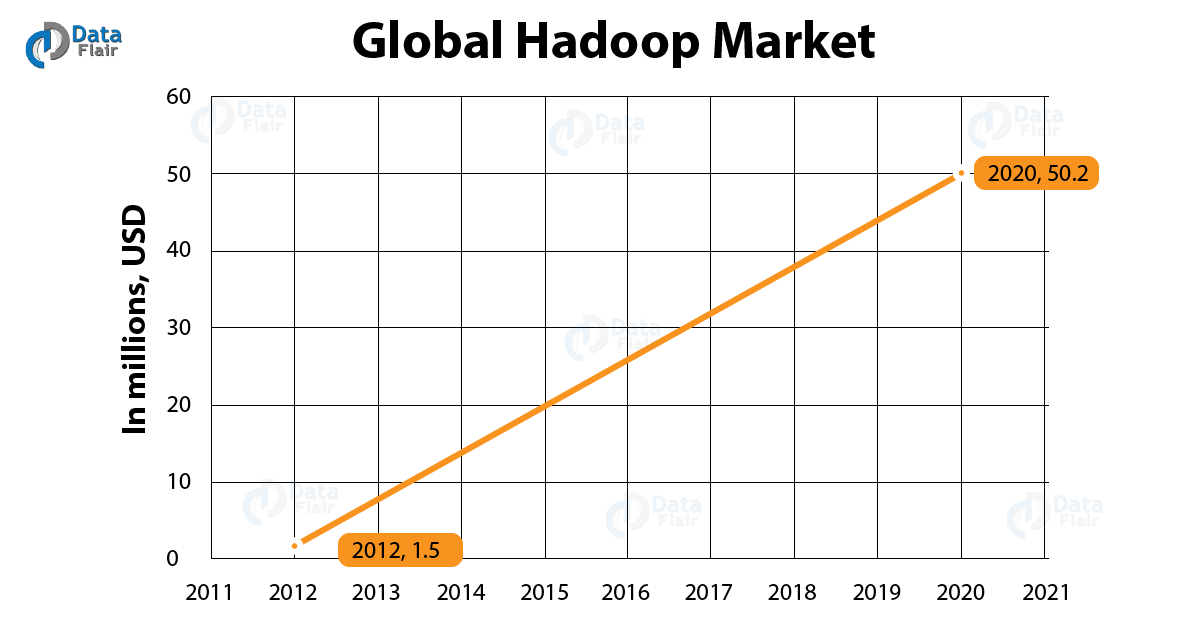

As we can see from the below image there is a growth in the Hadoop market.

There is a prediction that the Hadoop market will grow at a CAGR of 58.02% in the time period of 2013 – 2020. It will reach $50.2 billion by 2020 from $1.5 billion in 2012.

As the market for Big Data grows there will be a rising need for Big Data technologies. Hadoop forms the base of many big data technologies. The new technologies like Apache Spark and Flink work well over Hadoop. As it is an in-demand big data technology, there is a need to master Hadoop. As the requirements for Hadoop professionals are increasing, this makes it a must to learn technology.

3. Lack of Hadoop Professionals

As we have seen, the Hadoop market is continuously growing to create more job opportunities every day. Most of these Hadoop job opportunities remain vacant due to unavailability of the required skills. So this is the right time to show your talent in big data by mastering the technology before its too late. Become a Hadoop expert and give a boost to your career. This is where DataFlair plays an important role to make you Hadoop expert.

4. Hadoop for all

Professionals from various streams can easily learn Hadoop and become master of it to get high paid jobs. IT professionals can easily learn MapReduce programming in java or python, those who know scripting can work on Hadoop ecosystem component named Pig. Hive or drill is easy for those who know to the script.

You can easily learn it if you are:

- IT Professional

- Testing professional

- Mainframe or support engineer

- DB or DBA professional

- Graduate willing to start a career in big data

- Data warehousing professional

- The project manager or lead

5. Robust Hadoop Ecosystem

Hadoop has a very robust and rich ecosystem which serves a wide variety of organizations. Organizations like web start-ups, telecom, financial and so on are needing Hadoop to answer their business needs.

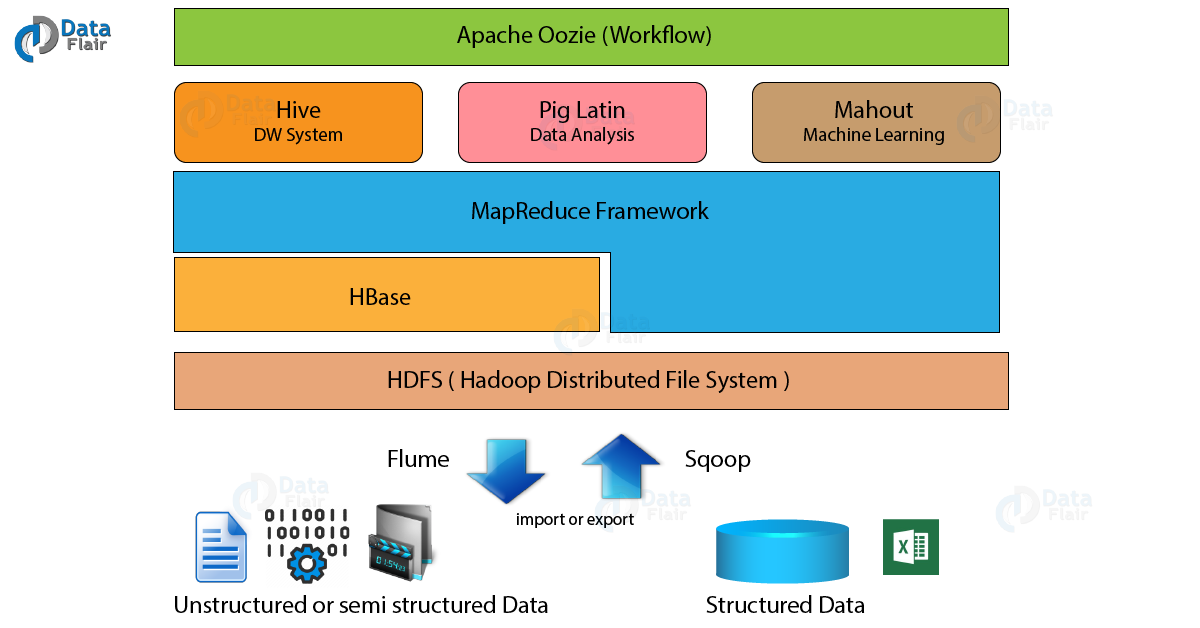

Hadoop ecosystem contains many components like MapReduce, Hive, HBase, Zookeeper, Apache Pig etc. These components are able to serve a broad spectrum of applications. We can use Map-Reduce to perform aggregation and summarization on Big Data. Hive is a data warehouse project on the top HDFS. It provides data query and analysis with SQL like interface. HBase is a NoSQL database. It provides real-time read-write to large datasets. It is natively integrated with Hadoop. Pig is a high-level scripting language used with Hadoop. It describes the data analysis problem as data flows. One can do all the data manipulation in it with Pig. Zookeeper is an open source server that coordinates between various distributed processes. Distributed applications use zookeeper to store and convey updates to important configuration information.

6. Research Tool

Hadoop has come up as a powerful research tool. It allows an organization to find answers to their business questions. Hadoop helps them in research and development work. Companies use it to perform the analysis. They use this analysis to develop a rapport with the customer.

Applying Big Data techniques improve operational effectiveness and efficiencies of generating great revenue in business. It brings a better understanding of the business value and develops business growth. Communication and distribution of information between different companies are feasible via big data analytics and IT techniques. The organizations can collect data from their customers to grow their business.

7. Ease of Use

Creators of Hadoop have written it in Java, which has the biggest developer community. Therefore, it is easy to adapt by programmers. You can have the flexibility of programming in other languages too like C, C++, Python, Perl, Ruby etc. If you are familiar with SQL, it is easy to use HIVE. If you are ok with scripting then PIG is for you.

Hadoop framework handles all the parallel processing of the data at the back-end. We need not worry about the complexities of distributed processing while coding. We just need to write the driver program, mapper and reducer function. Hadoop framework takes care of how the data gets stored and processed in a distributed manner. With the introduction of Spark in Hadoop, ecosystem coding has become even easier. In MapReduce, we need to write thousands of lines of code. But in Spark, it has come down to only a few lines of code to achieve the same functionality.

8. Hadoop is Omnipresent

There is no industry where Big Data has not reached. Big Data has covered almost all domains like healthcare, retail, government, banking, media, transportation, natural resources and so on. We can see this in the figure above. People are increasingly becoming data aware. This means they are realizing the power of data. Hadoop is a framework which can harness this power of data to improve the business.

Companies all over the world are trying to access information from various sources like social media. They are doing so in order to improve their performance and increase their revenue. Many organization face problem in processing heterogeneous data to extract value out of it. It has a capability to guide revolutionary transformation in research, invention and business marketing.

Big names like Walmart, New York Times, Facebook etc are all using Hadoop framework for their companies and thus demand a very good number of Hadoop experts. So become Hadoop expert now before its too late to get a job in your dream company.

9. Higher Salaries

In the current scenario, there is a gap between demand and supply of Big Data professional. This gap is increasing every day. According to IBM, demand for US data professionals will reach to 364000 by 2020. In the wake of the scarcity of Hadoop professionals, organizations are ready to offer big packages for Hadoop skills. As per indeed, the average salary for Hadoop skill is $112,000 per annum. It is 95% higher than the average salaries for all other job postings. There is always a compelling requirement of skilled people who can think from a business point of view. They are the people who understand data and can produce insights with that data. For this reason, technical persons with analytics skills find them in great demand.

10. A Maturing Technology

Hadoop is evolving with time. The new version of Hadoop i.e. Hadoop 3.0 is coming into the market. It has already collaborated with HortonWorks, Tableau, MapR, and even BI experts to name a few. New actors like Spark, Flink etc. are coming on the Big Data stage. These technologies promise the lightening speed of processing. These technologies also provide a single platform for various kinds of workloads. It is compatible with these new players. It provides robust data storage over which we can deploy technologies like Spark and Flink.

The advent of Spark has enhanced the Hadoop ecosystem. The coming of Spark in the market has enriched the processing capability of Hadoop. Spark creators have designed it to work with Hadoop’s distributed storage system HDFS. It can also work over HBase and Amazon’s S3. Even if you work on Hadoop 1.x you can take advantage of Spark’s capabilities.

The latest technology Flink also provides compatibility with Hadoop. You can use all the Map-Reduce APIs in Flink without changing a line of code. Flink also supports native Hadoop datatypes like Writable and WritableComparable. We can use Hadoop functions within Flink program. We can mix Hadoop functions with all the other Flink functions.

11. Hadoop has a Better Career Scope

Hadoop excels in processing a wide variety of data. We have various components of Hadoop ecosystem providing batch processing, stream processing, machine learning and so on. Learning it will open gates to a variety of job roles like:

- Big Data Architect

- Hadoop Developer

- Data Scientist

- Hadoop Administrator

- Data Analyst

By learning Hadoop you can get into the hottest field in IT nowadays. Even a fresher can get into this field with proper training and hard work. People already in the IT industry working as ETL, architect, mainframe professional and so on have an edge over freshers. But with a determination, you can build your career as a Hadoop professional. Companies use it almost in all domains like education, health care, insurance and so on. This enhances the chances of getting placed as a Hadoop professional.

Increased Adoption of Hadoop by Big Data Companies

Fortune 1000 companies are adopting Big Data for their growing business needs. Here is the status of big data adoption across various organizations –

- 12% of big data initiatives are under consideration

- 17% of big data initiatives are underway

- 67% of big data in production

From the above statistics, it is clear that the adoption of Hadoop is accelerating. This is creating a huge demand for Hadoop professionals with high salary bracket.

As said by Christy Wilson Hadoop is the way of the future. This is because companies aren’t going to be able to remain competitive without the power of big data. And there no viable, affordable options aside from Hadoop.

Hope you got the answer to why Hadoop.

Summary

Hence, in this why Hadoop article, we saw that this is the age of emerging technologies and tough competitions. The best way to shine is to have a solid understanding of the skill in which you want to build your career. Online training which is instructor-led is useful for learning Big Data technology. Also, the training with hands-on projects would give a good grip on the technology. Hadoop started off with just two components i.e. HDFS and MapReduce. As time passed more than 15 components got added to the Hadoop ecosystem and it is still growing. Learning these old components helps in understanding the newly added components.

The article is sufficient enough to decide why you should learn Hadoop. So start learning it today and DataFlair promises to help you in your Hadoop learning path. You can tell us your thoughts on why Hadoop article through comments.

Did you know we work 24x7 to provide you best tutorials

Please encourage us - write a review on Google

I am impressed with the views in the above article why learn Hadoop, as it provides the basic to major information which is necessary to know by a big data Hadoop beginner.

Hello Reema, thanks for the appreciation. DataFlair currently publishing some more articles of Hadoop. We recommend you to be regular on Dataflair for more interesting and updated Hadoop Tutorials.

I am also impressed with the content are up to date. Really awesome. Am a regular reader of Data flair Blogs.

Hello Premkumar,

Thanks for being a loyal reader of DataFlair. You can also be a part of our team by sharing our Hadoop tutorials with your peer groups.

Regards,

DataFlair

Great encouragement, i also impressed. awesome tutorials for bigdata. Thanks for good service.

although the article is somewhat directional I had always been existed for hadoop future for data business.

I am really enjoying to read this data flair article. Really interesting and awesome.

Thanks team for such a valuable information. Please try to keep your article free so that it can be reach to everyone.