Apache Kafka Security | Need and Components of Kafka

Free Kafka course with real-time projects Start Now!!

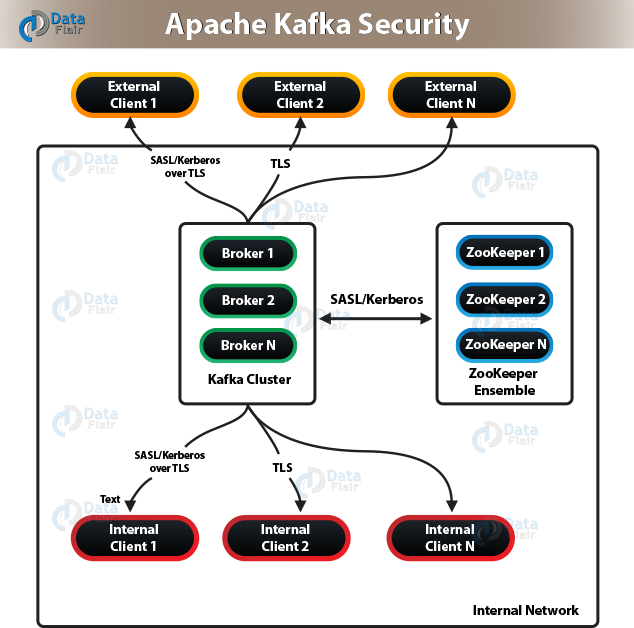

Today, in this Kafka Tutorial, we will see the concept of Apache Kafka Security. Kafka Security tutorial includes why we need security, introduction to encryption in detail.

With this, we will discuss the list of problems which Kafka Security can solve easily. Moreover, we will see Kafka authentication and authorization. Also, we will look at ZooKeeper Authentication.

So, let’s begin Apache Kafka Security.

What is Apache Kafka Security

There are a number of features added in the Kafka community, in release 0.9.0.0. There is a flexibility for their usage also, like either separately or together, that also enhances security in a Kafka cluster.

So, the list of currently supported security measures are:

- By using either SSL or SASL, authentication of connections to Kafka Brokers from clients, other tools are possible. It supports various SASL mechanisms:

- SASL/GSSAPI (Kerberos) – starting at version 0.9.0.0

- SASL/PLAIN – starting at version 0.10.0.0

- SASL/SCRAM-SHA-256 and SASL/SCRAM-SHA-512 – starting at version 0.10.2.0

2. Also, offers authentication of connections from brokers to ZooKeeper.

3. Moreover, it provides encryption of data which is transferring between brokers and Kafka clients or between brokers and tools using SSL, that includes:

- Authorization of reading/write operations by clients.

- Here, authorization is pluggable and also supports integration with external authorization services.

Note: Make sure that security is optional.

Need for Kafka Security

Basically, Apache Kafka plays the role as an internal middle layer, which enables our back-end systems to share real-time data feeds with each other through Kafka topics. Generally, any user or application can write any messages to any topic, as well as read data from any topics, with a standard Kafka setup.

However, it is a required to implement Kafka security when our company moves towards a shared tenancy model while multiple teams and applications use the same Kafka Cluster, or also when Kafka Cluster starts on boarding some critical and confidential information.

Problems: Kafka Security is solving

There are three components of Kafka Security:

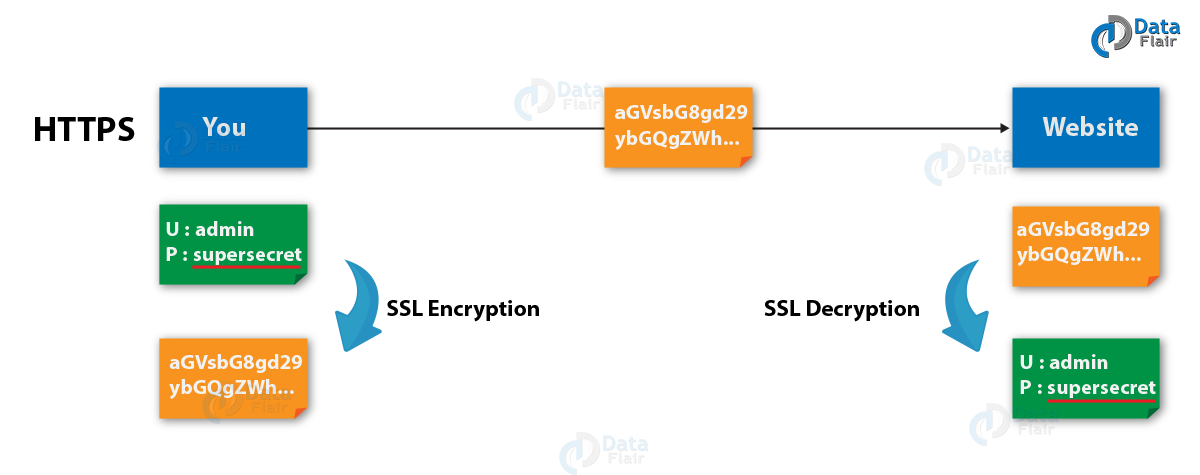

a. Encryption of data in-flight using SSL / TLS

It keeps data encrypted between our producers and Kafka as well as our consumers and Kafka. However, we can say, it is a very common pattern everyone uses when going on the web.

b. Authentication using SSL or SASL

To authenticate to our Kafka Cluster, it allows our producers and our consumers, which verifies their identity. It is the very secure way to enable our clients to endorse an identity. That helps well in the authorization.

c. Authorization using ACLs

In order to determine whether or not a particular client would be authorized to write or read to some topic, our Kafka brokers can run our clients against access control lists (ACL).

Encryption (SSL) in Kafka

Since our packets, while being routed to Kafka cluster, travel network and also hop from machines to machines, this solves the problem of the man in the middle (MITM) attack. Any of these routers could read the content of the data if our data is PLAINTEXT.

Our data is encrypted and securely transmitted over the network with enabled encryption and carefully setup SSL certificates. Only the first and the final machine possess the ability to decrypt the packet being sent, with SSL.

However this encryption comes at a cost, that means in order to encrypt and decrypt packets CPU is now leveraged for both the Kafka Clients and the Kafka Brokers. Although, SSL Security comes at the negligible cost of performance.

Note: The encryption is only in-flight and the data still sits un-encrypted on our broker’s disk.

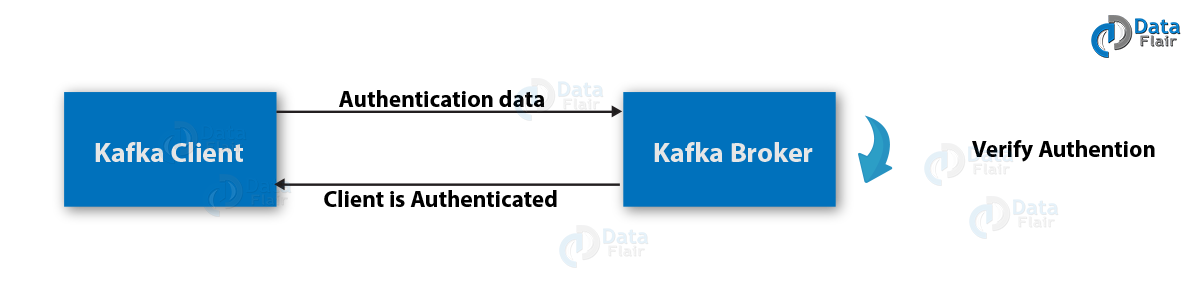

Kafka Authentication (SSL & SASL)

Basically, authentication of Kafka clients to our brokers is possible in two ways. SSL and SASL

a. SSL Authentication in Kafka

It is leveraging a capability from SSL, what we also call two ways authentication. Basically, it issues a certificate to our clients, signed by a certificate authority that allows our Kafka brokers to verify the identity of the clients.

However, it is the most common setup, especially when we are leveraging a managed Kafka clusters from a provider like Heroku, Confluent Cloud or CloudKarafka.

b. SASL Authentication in Kafka

SASL refers to Simple Authorization Service Layer. The basic concept here is that the authentication mechanism and Kafka protocol are separate from each other. It is very popular with Big Data systems as well as Hadoop setup.

Kafka supports the following shapes and forms of SASL:

i. SASL PLAINTEXT

SASL PLAINTEXT is a classic username/password combination. However, make sure, we need to store these usernames and passwords on the Kafka brokers in advance because each change needs to trigger a rolling restart.

However, it’s less recommended security. Also, make sure to enable SSL encryption while using SASL/PLAINTEXT, hence that credentials aren’t sent as PLAINTEXT on the network.

ii. SASL SCRAM

It is very secure combination alongside a challenge. Basically, password and Zookeeper hashes are stored in Zookeeper here, hence that permits us to scale security even without rebooting brokers.

Make sure to enable SSL encryption, while using SASL/SCRAM, hence that credentials aren’t sent as PLAINTEXT on the network.

iii. SASL GSSAPI (Kerberos)

It is also one of a very secure way of providing authentication. Because it works on the basis of Kerberos ticket mechanism. The most common implementation of Kerberos is Microsoft Active Directory.

Since it allows the companies to manage security from within their Kerberos Server, hence we can say SASL/GSSAPI is a great choice for big enterprises.

Also, communications which are encrypted to SSL encryption is optional with SASL/GSSAPI. However, setting up Kafka with Kerberos is the most difficult option, but worth it in the end.

- (WIP) SASL Extension (KIP-86 in progress)

To make it easier to configure new or custom SASL mechanisms that are not implemented in Kafka, we use it.

- (WIP) SASL OAUTHBEARER (KIP-255 in progress)

This will allow us to leverage OAUTH2 token for authentication.

However, to perform it in easier way use SASL/SCRAM or SASL/GSSAPI (Kerberos) for authentication layer.

Kafka Authorization (ACL)

Kafka needs to be able to decide what they can and cannot do, as soon as our Kafka clients are authenticated. This is where Authorization comes in, controlled by Access Control Lists (ACL).

Since ACL can help us prevent disasters, they are very helpful.

Let’s understand it with an example, we have a topic that needs to be writeable from only a subset of clients or hosts. Also, we want to prevent our average user from writing anything to these topics, thus it prevents any data corruption or deserialization errors.

ACLs are also great if we have some sensitive data and we need to prove to regulators that only certain applications or users can access that data.

we can use the kafka-acls command, to adds ACLs. It also even has some facilities and shortcuts to add producers or consumers.

kafka-acl --topic test --producer --authorizer-properties zookeeper.connect=localhost:2181 --add --allow-principal User:alice

The result being:

Adding ACLs for resource `Topic:test`:

User:alice has Allow permission for operations: Describe from hosts: *

User:alice has Allow permission for operations: Write from hosts: *

Adding ACLs for resource `Cluster:kafka-cluster`:

User:alice has Allow permission for operations: Create from hosts: *

Note: Store ACL in Zookeeper by using the default SimpleAclAuthorizer, only. Also, ensure only Kafka brokers may write to Zookeeper (zookeeper.set.acl=true). Else, any user could come in and edit ACLs, thus defeating the point of security.

ZooKeeper Authentication in Kafka

a. New Clusters

There are two necessary steps in order to enable ZooKeeper authentication on brokers:

- At first, set the appropriate system property just after creating a JAAS login file and to point to it.

- Set the configuration property zookeeper.set.acl in each broker to true.

Basically, the ZooKeeper’s metadata for the Kafka cluster is world-readable, but only brokers can modify it because inappropriate manipulation of that data can cause cluster disruption. Also, we recommend limiting the access to ZooKeeper via network segmentation.

b. Migrating Clusters

We need to execute the several steps to enable ZooKeeper authentication with minimal disruption to our operations, if we are running a version of Kafka that does not support security or simply with security disabled, and if we want to make the cluster secure:

- At first, perform a rolling restart setting the JAAS login file, which enables brokers to authenticate. At the end of the rolling restart, brokers are able to manipulate znodes with strict ACLs, but they will not create znodes with those ACLs

- Now, do it the second time, and make sure this time set the configuration parameter zookeeper.set.acl to true. Hence, as a result, that can enable the use of secure ACLs at the time of creating znodes.

- Moreover, execute the ZkSecurityMigrator tool. So, in order to execute the tool, use this script: ./bin/zookeeper-security-migration.sh with zookeeper.acl set to secure. This tool traverses the corresponding sub-trees changing the ACLs of the znodes.

with these following steps we can turn off authentication in a secure cluster:

- Perform a rolling restart of brokers setting the JAAS login file, which enables brokers to authenticate, but setting zookeeper.set.acl to false. However, brokers stop creating znodes with secure ACLs, at the end of the rolling restart. Although they are still able to authenticate and manipulate all znodes.

- Also, execute the tool ZkSecurityMigrator tool with this script ./bin/zookeeper-security-migration.sh with zookeeper.acl set to unsecure. It traverses the corresponding sub-trees changing the ACLs of the znodes.

- Further, do perform it a second time as well. Make sure this time omitting the system property which sets the JAAS login file.

Example of how to run the migration tool:

For Example,

./bin/zookeeper-security-migration.sh –zookeeper.acl=secure –zookeeper.connect=localhost:2181

Run this to see the full list of parameters:

./bin/zookeeper-security-migration.sh –help

c. Migrating the ZooKeeper Ensemble

We need to enable authentication on the ZooKeeper ensemble. Hence, we need to perform a rolling restart of the server and set a few properties, to do it.

So, this was all in Kafka Security Tutorial. Hope you like our explanation.

Conclusion

Hence, in this Kafka security tutorial, we have seen the introduction to Kafka Security. Moreover, we also discussed, need for Kafka Security, problems that are solved by Kafka Security. In addition, we discussed SSL Encryption and SSL and SASL Kafka authentication.

Along with this, in the authorization, we saw Kafka topic authorization. Finally, we looked at Zookeeper Authentication and its major steps. However, if any doubt occurs feel free to ask in the comment section.

We work very hard to provide you quality material

Could you take 15 seconds and share your happy experience on Google