Apache Kafka Load Testing Using JMeter

Free Kafka course with real-time projects Start Now!!

In this Apache Kafka tutorial, we will learn that by using Apache JMeter, how to perform Kafka Load Test at Apache Kafka.

Moreover, this Kafka load testing tutorial teaches us how to configure the producer and consumer that means developing Apache Kafka Consumer and Kafka Producer using JMeter. At last, we will see building the Kafka load testing scenario in Jmeter.

However, before Kafka Load Testing let’s study brief introduction to Kafka to understand further work well.

What is Apache Kafka?

In simple words, Apache Kafka is a hybrid of a distributed database and a message queue. In order to process terabytes of information, many large companies use it. Also, for its features, Kafka is widely popular.

For example, in a company like LinkedIn uses it to stream data about user activity, and the company like Netflix uses it for data collection and buffering for downstream systems like Elasticsearch, Amazon EMR, Mantis and many more.

Moreover, let’s throw light on some features of Kafka which are important for Kafka Load Testing:

- By default, the long message storing time – a week.

- Due to sequential I/O, high performance.

- Also, convenient clustering.

- To replicate and distribute queues across the cluster, high availability of data due to the capability.

- Apart from data transfer, it can also process by using Streaming API.

As we know, to work with a very large amount of data, we use Kafka. Hence, at the time of Kafka Load Testing with JMeter, pay attention to the following aspects:

- If we write data constantly to the disk, that will affect the capacity of the server. Because, it will reach a denial of service state, if insufficient.

- However, the distribution of sections and the number of brokers also affects the use of service capacity.

- However, everything becomes even more complicated while we use the replication feature. The reason behind it is, its maintenance requires even more resources, and the case when brokers refuse to receive messages becomes even more possible.

Although, it is the possibility that data may lose while it is processed in such huge amounts, even though most processes are automated. Hence, we can say testing of these services is very important and also it is essential to be able to generate a proper load.

Make sure, Apache Kafka load testing will be installed on Ubuntu, in order to demonstrate it. In addition, we will use the Pepper-Box plugin as a producer, due to its more convenient interface to work with message generation than kafkameter does.

However, no plugin provides consumer implementation so we have to implement the consumer on our own. And, we are going to use the JSR223 Sampler to do that. Now, let’s move towards Kafka Load Testing.

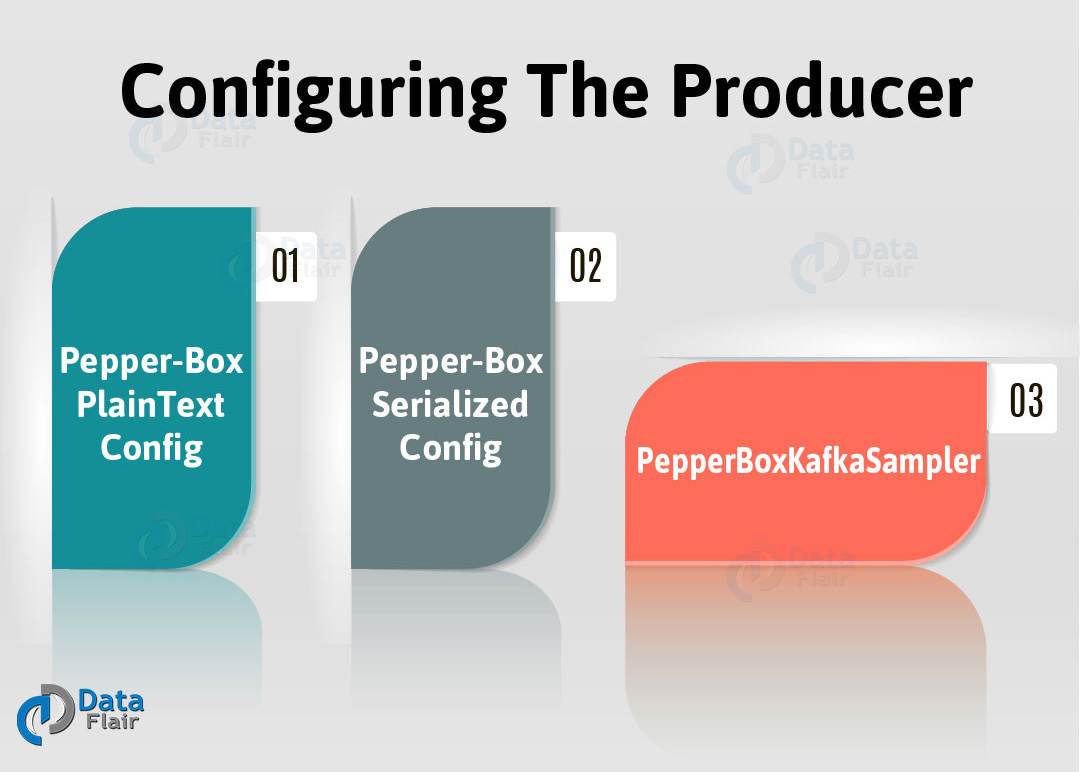

Configuring The Producer – Pepper-Box

Now, in order to install the plugin, we need to compile this source code or download the jar file. Further, we need to put it in the lib/ext folder and restart JMeter.

Basically, there are 3 elements of this plug-in:

- Pepper-Box PlainText Config

It allows building text messages according to a specified template.

- Pepper-Box Serialized Config

This config permits to build a message that is a serialized java object.

- PepperBoxKafkaSampler

It is designed to send the messages that were built by previous elements.

Let’s learn all these configurations for Kafka load testing in detail:

a. Pepper-Box PlainText Configuration

Follow these steps to add this item, first go to the Thread group -> Add -> Config Element -> Pepper-Box PlainText Config

Pepper-Box PlainText Config element in Kafka Load Testing has 2 fields:

i. Message Placeholder Key

While we want to use the template from this element, it is a key that will need to be specified in the PepperBoxKafkaSampler.

ii. Schema Template

This is the message template in which we can use JMeter variables and functions, and also can plugin functions. However, this message structure can be anything, from plain text to JSON or XML.

b. Pepper-Box Serialized Configuration

Now, follow several steps to add this element, first go to Thread group -> Add -> Config Element -> Pepper-Box Serialized Config

However, this element has a field for the key and the Class Name field, which is intended for specifying the Java class. Makes sure that the jar file with the class, must be placed in the lib/ext folder.

Hence, the fields with its properties will appear below just after it is specified, and also it is possible to assign desired values to them now. Although, here again, we repeated the message from the last element, it will be a Java object this time.

c. PepperBoxKafkaSampler

Further, in order to add this element, follow these steps. First go to Thread group -> Add -> Sampler -> Java Request. Afterward, select com.gslab.pepper.sampler.PepperBoxKafkaSampler from the drop-down list.

Basically, there are following settings of this element:

- bootstrap.servers/zookeeper.servers

Addresses of brokers/ zookeepers in the format of broker-ip-1: port, broker-ip-2: port, etc.

- kafka.topic.name

It is the name of the topic for message publication.

- key.serializer

However, it is a class for key serialization. If there is no key in the message, leave it unchanged.

- value.serializes

It is a class for message serialization. The field remains unchanged, for a simple text. we need to specify “com.gslab.pepper.input.serialized.ObjectSerializer”, when using Pepper-Box Serialized Config.

- compression.type

Basically, it is a type of message compression (none/gzip/snappy/lz4)

- batch.size

It is the largest message size.

- linger.ms

This is considered the message waiting time.

- buffer.memory

It is the producer’s buffer size.

- acks

This is the quality of service (-1/0/1 – delivery is not guaranteed/the message will surely be delivered/the message will be delivered once).

- receive.buffer.bytes/send.buffer.bytes

It the size of the TCP send/receive buffer. -1 – use the default OS value.

- security.protocol

This is the encryption protocol (PLAINTEXT/SSL/SASL_PLAINTEXT/ SASL_SSL).

- message.placeholder.key

It is the message key, which was specified in the previous elements.

- kerberos.auth.enabled,java.security.auth.login.config,java.security.krb5.conf,sasl.kerberos.service.name

These all are a field group responsible for the authentication.

Generally, we can add additional parameters using the prefix _ before the name, if necessary.

For example,

_ssl.key.password.

Configuring the Consumer

As we have a producer that creates the largest load on the server, the service has to deliver messages too. Hence, to more accurately reproduce the situations, we should also add consumers. Moreover, we can also use it to check whether all consumer messages have been delivered.

For example,

Let’s take the following source code and briefly touch upon its steps for a better understanding of Kafka

Load Testing:

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("group.id", group);

props.put("enable.auto.commit", "true");

props.put("auto.commit.interval.ms", "1000");

props.put("session.timeout.ms", "30000");

props.put("key.deserializer",

"org.apache.kafka.common.serializa-tion.StringDeserializer");

props.put("value.deserializer",

"org.apache.kafka.common.serializa-tion.StringDeserializer");

KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(props);

consumer.subscribe(Arrays.asList(topic));

System.out.println("Subscribed to topic " + topic);

int i = 0;

while (true) {

ConsumerRecords<String, String> records = con-sumer.poll(100);

for (ConsumerRecord<String, String> record : records)

System.out.printf("offset = %d, key = %s, value = %s\n",

record.offset(), record.key(), record.value());

}- Basically, a connection configuration is performed.

- Also, a topic is to be specified and a subscription is made to it.

- Moreover, Messages are received in the cycle of this topic and are also brought out to the console.

Hence, to the JSR223 Sampler in JMeter, all this code will be added with some modifications.

Building the Kafka Load Testing Scenario in JMeter

After learning all these necessary elements for creating the load, now let’s do posting several messages to the topic of our Kafka service. Hence, assume that we have a resource from which the data on its activities are collected. The information will be sent as an XML document.

- At first, add the Pepper-Box PlainText Config and also create a template. However, the structure of the message will be as follows: Message number, Message-ID, Item ID which the statistics are collected from, the Statistics, Sending date stamp.

- Further, add the PepperBoxKafkaSampler, and specify the addresses of bootstrap.servers and kafka.topic.name from our Kafka service.

- Then, add the JSR223 Sampler with the consumer code to a separate Thread Group. Also, we will need kafka-clients-x.x.x.x.jar files, for it to work. It carries classes for working with Kafka. We can easily find it in our Kafka directory – /kafka/lib.

Here, instead of displaying script in the console, we modified part of the script and now it saves the data to a file. It is actually done for a more convenient analysis of the results. Also, we have added the part which is necessary for setting the execution time of the consumer.

Updated part:

long t = System.currentTimeMillis();

long end = t + 5000;

f = new FileOutputStream(".\\data.csv", true);

p = new PrintStream(f);

while (System.currentTimeMillis()<end)

{

ConsumerRecords<String, String> records = consumer.poll(100);

for (ConsumerRecord<String, String> record : records)

{

p.println( "offset = " + record.offset() +" value = " + record.value());

}

consumer.commitSync();

}

consumer.close();

p.close();

f.close();As a result, the structure of the script looks as follows. Here, both the threads work simultaneously.

Kafka Producers begin to publish messages to the specified topics and the Kafka Consumers connect to the topics and wait for messages from Kafka. Also, it writes the message to a file, at the time the consumer receives the message.

- Finally, run the script and view the results.

We can see the received messages in the opened file. Although, we just have to adjust the number of consumers and producers to increase the load, after that.

Note: Do not use random data for messages during the testing, because they can differ in size significantly from the current ones, and that difference may affect the test results.

So, this was all about how to load test with JMeter in Apache Kafka. Hope you like our explanation of Kafka Load Testing using JMeter.

Conclusion

Hence, we have seen how to use a JMeter for load testing the Apache Kafka. Moreover, in Kafka Load Testing, we saw configuring the producer and consumer using JMeter and load testing tools for Kafka.

At last, we learned how to build the Kafka Load Testing Scenario in JMeter. However, if you have any doubt regarding Kafka Load Testing with JMeter, feel free to ask through the comment tab.

Did we exceed your expectations?

If Yes, share your valuable feedback on Google

Hi the above mentioned was how to configure in java,may i know how to configure in c# or .net

Is there a way to do load testing on Kafka with Neoload. If yes, could some one provide the steps??

b. Pepper-Box Serialized Configuration

Now, follow several steps to add this element, first go to Thread group -> Add -> Config Element -> Pepper-Box Serialized Config

However, this element has a field for the key and the Class Name field, which is intended for specifying the Java class. Makes sure that the jar file with the class, must be placed in the lib/ext folder. Hence, the fields with its properties will appear below just after it is specified, and also it is possible to assign desired values to them now. Although, here again, we repeated the message from the last element, it will be a Java object this time.

WHICH JAR FILE WE ARE TALKING ABOVE?

I too have the same query. gone through multiple websites regarding this Apache Kafka testing with JMeter. Documentation is not clear any where and we are ending up in errors and wasting up 3-4 days of time.

Sagar, are you able to find out answer for the above query and do the above setup successfully? Please help

Hi..

I am new to Kafka performance testing and my client is asking to test with Loadrunner or Jmeter..

Could you please provide some session on this ..

Appreciate for quick response

I am getting the below error.

Can you please help me here?

Response message:javax.script.ScriptException: In file: inline evaluation of: “//Define the certain properties that need to be passed to the constructor of a K . . . ” Encountered “,” at line 17, column 23.

in inline evaluation of: “//Define the certain properties that need to be passed to the constructor of a K . . . ” at line number 17

can i send an xml file to kafka topic message