Masking and Padding in Keras

Free Keras course with real-time projects Start Now!!

In Keras, we create neural networks either using function API or sequential API. In both the APIs, it is very difficult to prepare data for the input layer to model, especially for RNN and LSTM models. This is because of the varying length of the input sequence. The variable lengths of the input sequence of data need to be converted to an equal length format. This task is achieved using masking and padding in Keras or TensorFlow. Masking and padding in Keras reshape the variable length input sequence to sequence of the same length.

Padding in Keras

To ensure that all the input sequence data is having the same length we pad or truncate the input data points. The deep learning model accepts the input data points of standardized tensors.

We define the tensors as:

tensor(batch_size, length_sequence,features)

All the input to this deep learning model must have length equal lenght_sequence.

Using padding we pad placeholder values to input sequences if the length of the input is less than length_sequence. If the length of the input sequence is more than length_sequence we truncate the large sequence values from the input.

This API is available in Keras in the following module.

keras.preprocessing.sequence.pad_sequence(sample,maxlen,padding,truncating)

Setting padding=’pre’ refers to adding values at the start of index and padding=’post’ means adding values at the end. Similarly setting truncating=’post’ means removing large values from last and truncating=’pre’ means removing values from the beginning.

Example:

inputs = [

[123, 600, 51],

[103, 80, 215, 46,546],

[43, 61, 12, 645, 456,345],

]

padding_inputs = keras.preprocessing.sequence.pad_sequences(

inputs, padding="post"

)

Padded inputs would be:

[

[123, 600, 51,0,0,0],

[103, 80, 215, 46,546,0],

[43, 61, 12, 645, 456,345],

]

Masking in Keras

The concept of masking is that we can not train the model on padded values. The placeholder value subset of the input sequence can not be ignored and must be informed to the system. This technique to recognize and ignore padded values is called Masking in Keras.

We can perform masking in Keras in the following two ways:

1. Using Keras.layers.Masking layer

Before adding a masking layer the input NumPy array needs to be converted to the tensor data type. This is done using TensorFlow’s convert_to_tensor method.

from keras.layers import Masking import numpy as np import tensorflow as tf x_train=np.expand_dims(padding_input,-1) x_train=tf.convert_to_tensor(x_train,dtype=’float32’) mask_layer=Masking(mask_value=0.0) mask_x_train=mask_layer(x_train)

2. Using keras.layers.Embedding layer

Here we configure mask_zero = True in the Embedding layer for masking.

from keras.layers import Embedding embed=Embedding(input_dim=1000,output_dim=32,mask_zero=True) masking_output=embed(padding_input)

So, using masking and padding we can efficiently process the input sequence for training a deep learning model where the input sequence is of varying length. Generally, RNN and LSTM require varying length input sequence training.

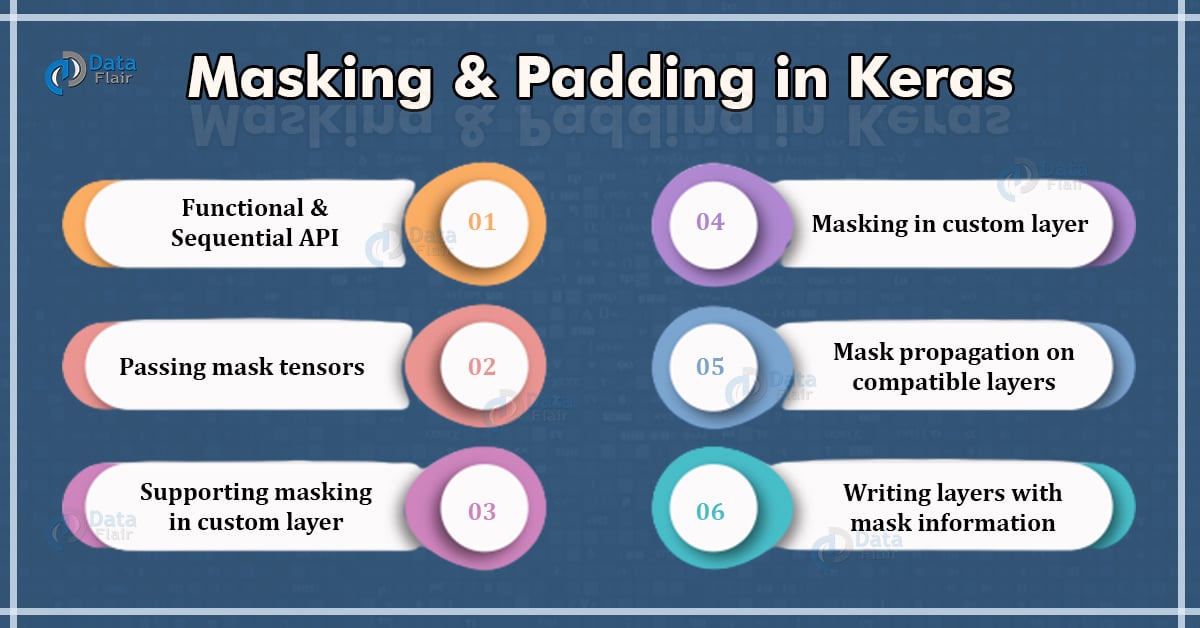

Keras Mask Propagation in Functional and Sequential API

We generate masks using Embedding or Masking Layer, this mask is then propagated through the neural network. Keras fetches the mask with respect to the input and passes it to another layer. This mask propagation happens in both Functional API and Sequential API.

Passing Mask Tensors

This sector describes how you can directly pass masks to layers. We do it using the compute_mask() method. This method takes the tensors and previous masks as input. To directly pass the mask, we pass the output of this method to the __call__ method.

from keras.layers import Layer,Embedding,LSTM

class NewLayer(Layer):

def __init__(self, **kwargs):

super(NewLayer, self).__init__(**kwargs)

self.embedding =Embedding(input_dim=5000, output_dim=16, mask_zero=True)

self.lstm = LSTM(32)

def call(self, inputs):

m = self.embedding(inputs)

compute_mask = self.embedding.compute_mask(inputs)

output_mask = self.lstm(x, mask=compute_mask)

return output_mask

layer = NewLayer()

m = np.random.random((32, 10)) * 100

m = m.astype("int32")

layer(m)

Supporting Masking in Custom Layer

This section will describe how to write the layers to generate a mask and layers to update the present mask. To support masking in the custom layer, we have to implement the compute_mask function while writing the custom layer.

First, let us see how to generate a mask.

from keras.layers import Layer

Class my_layer(Layer):

def __init__(self, input_dim, output_dim, mask_zero=False, **kwargs):

super(my_layer, self).__init__(**kwargs)

self.input_dim = input_dim

self.output_dim = output_dim

self.mask_zero = mask_zero

def build(self, input_shape):

self.embeddings = self.add_weight(

shape=(self.input_dim, self.output_dim),

initializer="random_normal",

dtype="float32",

)

def call(self, inputs):

return tf.nn.embedding_lookup(self.embeddings, inputs)

def compute_mask(self, inputs, mask=None):

if not self.mask_zero:

return None

return tf.not_equal(inputs, 0)

my_layer=my_layer(15,64)

compute_mask=compute_mask(input_layer)

Here my_layer is generating a mask from the input layer and input values. Below code shows how to write a layer to modify mask.

from keras.layer import Layer

Class my_layer(Layer):

def call(self, inputs):

return tf.split(inputs, 2, axis=1)

def compute_mask(self, inputs, mask=None):

if mask is None:

return None

return tf.split(mask, 2, axis=1)

ret_one,ret_two=my_layer()(embeddings)

Keras Masking in Custom Layer

This section describes how to generate or modify masks within your custom layer. For this purpose, we need to define the compute_mask function within our custom layer class. The custom_mask method will create a new mask using the input tensors and the current mask.

from keras.layers import Layer

Class CustomLayer(Layer):

def call(self, inputs):

return tf.split(inputs, 2, axis=1)

def compute_mask(self, inputs, mask=None):

if mask is None:

return None

return tf.split(mask, 2, axis=1)

one, two=CustomLayer()(previous_mask)

Mask Propagation on Compatible Layers

This section explains how to propagate current input masks in a custom layer. To do that, we set support_masking value equal to true in the constructor of the custom layer class. By doing so, the current mask is just passed to the next layer.

Example for mask propagation in custom layer:

from keras.layers import Layer

class NewLayer(Layer):

def __init__(self, **kwargs):

super(NewLayer, self).__init__(**kwargs)

self.supports_masking = True

def call(self, inputs):

return tf.nn.relu(inputs)

Writing Layers with Mask Information

Some custom layers accept mask arguments in their call method inside a custom class. We can propagate masks to the next layer by simply adding mask equal None in call method.

Example:

from keras.layers import Layer

Class CustomLayer(Layer):

def call(self, inputs, mask=None):

mask_initiation= tf.expand_dims(tf.cast(mask, "float32"), -1)

mask_exp = tf.exp(inputs) * mask_initiation

mask_sum = tf.reduce_sum(inputs * mask_initiation, axis=1, keepdims=True)

return mask_exp / mask_sum

Summary

In this article, we talked about masking and padding in keras and its implementation. Padding technique is useful to convert the input sequence to a constant size. In masking, we mask the values which were added during padding for not letting the model get trained on padded data.

We perform Padding using keras.preprocessing.sequence.pad_sequence API in Keras. While there are two ways for masking, either using the Masking layer (keras.layers.Making) or by using Embedding Layer (keras.layers.Embedding). This article then explains the topics of mask propagation, masking in custom layers, and layers with mask information.

Your opinion matters

Please write your valuable feedback about DataFlair on Google