Keras Loss Functions – Types and Examples

Free Keras course with real-time projects Start Now!!

In Deep learning algorithms, we need some sort of mechanism to optimize and find the best parameters for our data. We implement this mechanism in the form of losses and loss functions. Neural networks are trained using an optimizer and we are required to choose a loss function while configuring our model. It’s very challenging to choose what loss function we require. Different loss functions play slightly different roles in training neural nets. This article will explain the role of Keras loss functions in training deep neural nets. We will also see the loss functions available in Keras deep learning library.

Keras Loss and Keras Loss Functions

Generally, we train a deep neural network using a stochastic gradient descent algorithm. Here we update weights using backpropagation. The optimization algorithm tries to reduce errors in the next evaluation by changing weights.

While optimization, we use a function to evaluate the weights and try to minimize the error. This objective function is our loss function and the evaluation score calculated by this loss function is called loss. In simple words, losses refer to the quality that is computed by the model and try to minimize during model training.

This loss function has a very important role as the improvement in its evaluation score means a better network.

Available Loss Functions in Keras

1. Hinge Losses in Keras

These are the losses in machine learning which are useful for training different classification algorithms. In support vector machine classifiers we mostly prefer to use hinge losses.

Different types of hinge losses in Keras:

- Hinge

- Categorical Hinge

- Squared Hinge

2. Regression Loss functions in Keras

These are useful to model the linear relationship between several independent and a dependent variable.

Different types of Regression Loss function in Keras:

- Mean Square Error

- Mean Absolute Error

- Cosine Similarity

- Huber Loss

- Mean Absolute Percentage Error

- Mean Squared Logarithmic Error

- Log Cosh

3. Binary and Multiclass Loss in Keras

These loss functions are useful in algorithms where we have to identify the input object into one of the two or multiple classes.

Spam classification is an example of such type of problem statements.

- Binary Cross Entropy.

- Categorical Cross Entropy.

- Poisson Loss.

- Sparse Categorical Cross Entropy.

- KLDivergence

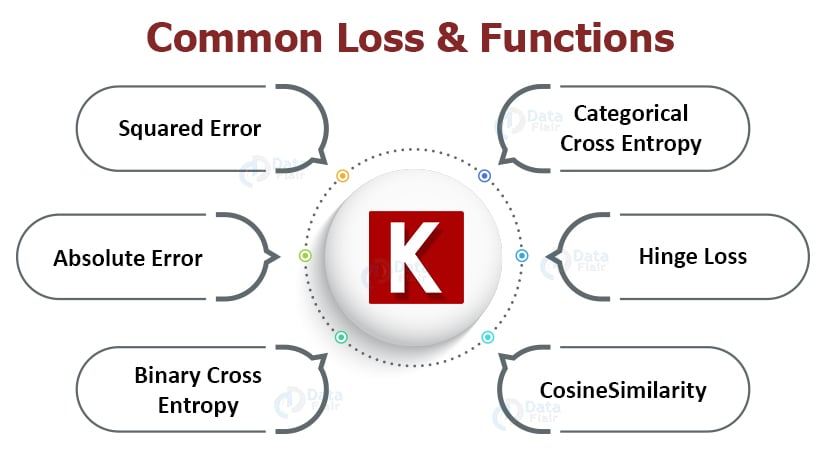

Common Loss and Loss Functions in Keras

1. Squared Error

In Squared Error Loss, we calculate the square of the difference between the original and predicted values. We calculate this for each input data in the training set. The mean of these squared errors is the corresponding loss function and it is called Mean Squared Error. This loss is also known as L2 Loss.

Available in keras as:

keras.losses.MeanSquaredError()

2. Absolute Error in Keras

In Absolute error, we take the mode of the difference of original and predicted values. The mean of these absolute errors is the corresponding mean absolute error.

Available as:

keras.losses.MeanAbsoluteError()

3. Binary Cross Entropy in Keras

It is used to calculate the loss of classification model where the target variable is binary like 0 and 1.

keras.losses.BinaryCrossentropy(

from_logits, label_smoothing, reduction, name="binary_crossentropy"

)

4. Categorical Cross Entropy in Keras

It is used for the classification models where the target classes are more than two. It is a generalization of binary cross-entropy.

keras.losses.CategoricalCrossentropy(

from_logits,

label_smoothing,

reduction,

name="categorical_crossentropy",

)

5. Hinge Loss in Keras

Here loss is defined as,

loss=max(1-actual*predicted,0)

The actual values are generally -1 or 1. And if it is not, then we convert it to -1 or 1.

This loss is available as:

keras.losses.Hinge(reduction,name)

6. CosineSimilarity in Keras

Calculate the cosine similarity between the actual and predicted values.

The loss equation is:

loss=-sum(l2_norm(actual)*l2_norm(predicted))

Available in Keras as:

keras.losses.CosineSimilarity(axis,reduction,name)

All of these losses are available in Keras.losses module. The below code shows an example of how to use these loss functions in neural network code.

from keras import Sequential from keras.layers import Dense model=Sequential() model.add(Dense(64,kernel_initializer=’uniform’,input_shape=(20,))) model.compile(loss=’categorical_crossentropy’,optimizer=’adam’,activation=’softmax’)

Custom Loss Function in Keras

Creating a custom loss function and adding these loss functions to the neural network is a very simple step. You just need to describe a function with loss computation and pass this function as a loss parameter in .compile method.

def custom_loss_function(actual,prediction): loss=(prediction-actual)*(prediction-actual) return loss model.compile(loss=custom_loss_function,optimizer=’adam’)

Losses with Compile and Fit methods

The .compile() method in Keras expects a loss function and an optimizer for model compilation. These two parameters are a must.

We add the loss argument in the .compile() method with a loss function, like:

from keras.losses import CategoricalCrossentropy from keras.layers import Dense from keras import Sequential model=Sequential() model.add(Dense(32,kernel_initializer=’uniform’,input_shape=(20,))) model.add(Activation(‘softmax’)) loss_function=CategoricalCrossentropy() model.compile(loss=loss_function,optimizer=’adam’)

Using Inbuilt Loss Function in Keras

To use inbuilt loss functions we simply pass the string identifier of the loss function to the “loss” parameter in the compile method.

For example, to use binary_crossentropy:

from keras.models import Sequential model=Sequential() model.add(Dense(64,input_shape=(1,),activation=’relu’)) model.add(Dense(32,activation=’relu’)) model.compile(loss=’binary_crossentropy’,optimizer=’adam’)

add_loss() API in Keras

Using this API user can add regularization losses in the custom layers. We use this API in the call method of the custom class. This API keeps the track of loss terms.

from keras.layers import Layer class Custom_layer(Layer): def __init__(self,rate=1e-2): super(Custom_layer,self).__init__() self.rate=rate def call(self,inputs): self.add_loss(self.rate*tf.square(inputs)) return inputs

Summary

This article is a guide to keras.losses module of Keras. It explains what loss and loss functions are in Keras. It describes different types of loss functions in Keras and its availability in Keras. We discuss in detail about the four most common loss functions, mean square error, mean absolute error, binary cross-entropy, and categorical cross-entropy. At last, there is a sample to get a better understanding of how to use loss function.

Your opinion matters

Please write your valuable feedback about DataFlair on Google