Wide and Deep learning With TensorFlow in 10 Min

Free TensorFlow course with real-time projects Start Now!!

Today, in this article “wide and deep learning with TensorFlow”, we are going to learn the wide model and deep learning model in TensorFlow. Moreover, we will discuss the working of wide and deep learning with TensorFlow.

Along with this, we will see how can we combine these two models and also set up for these models. Also, we will learn how to define base feature column and training and evaluating the deep model in neural networks with embedding.

So, let’s start wide and deep learning with TensorFlow.

TensorFlow Wide and Deep Learning

Programmers are regularly trying to make machines learn just like humans do, in a non-predictive and adaptive way and this field called the machine learning is at rising. The brain of a human is a complex interconnection of neurons that keep sending signals throughout the lifespan of the human.

The analogy is drawn in machines called neural networks that produce similar results. The learning method is tried to be made similar to that of humans by having a memory and the ability to recollect the past learnings and connect the present learnings.

The concept is to join the two methods of memorizing and generalizing the learnings by making a wide linear model and a deep learning model respectively together called Wide and Deep Learning. Do check out the previous tutorials on Linear Models for a better understanding of this tutorial.

How TensorFlow Wide and Deep Learning Works?

We will understand the working of wide and deep learning with TensorFlow with the help of an example where you want to create an app that guesses which food you are craving for and orders that food for you.

The two measurements that are needed here are the consumption rate, if that food was consumed by the user, the label score turns 1, which is otherwise always 0.

The classification of 0 and 1 was simple but you might want to consider adding a learning element to your model since a fried chicken can be interpreted as chicken fried rice. Making the model learn will help differentiate such ambiguous cases.

TensorFlow Wide model

We associate this model with the memory element. You train a linear model with a wide set of cross-linked product features like the one described above and the correlation of this related data with the assigned label.

The model can predict for example given the item, the probability of consumption, P (consmp | item) for each item, and the app will tell you the item that has the highest probability or the highest consumption rate.

For instance, the model will learn to differentiate that AND (“fried chicken”, item=”chicken and pasta”) has a higher probability than AND (“fried chicken”, “chicken fried rice”) even though both have the same crux, i.e. chicken.

Therefore, the app memorized the words would be able to do a nice job of logging what the users prefer.

TensorFlow Deep Model

Now, suppose the users of the app are tired of the recommendations with the same food and want a surprise element. This is where the deep learning can come in with the use of embedding vectors for every query and item.

The application would then be able to generalize by coupling the items and queries. An example will be that people who order fried chicken often don’t mind getting a bottle of coke as well.

Combining Two Models

Generalizing too much can give irrelevant results. For countering this, let us dig deeper. There are two ways in which the queries and items can be related to each other described as follows.

The first type being very specific where the users have very specific demands like an iced decaf with two cubes of sugar, which is pretty close to a latte with no sugar but it isn’t an acceptable alternative. While designing there is an uncountable number of such rules and this can turn out to be a disadvantage.

Contrary to this, the queries that are more general like “Chinese” or “continental” may have a much broader set of related items. Which is the reason for combining the two models together?

For more examples, it is recommended to browse through the GitHub repo of TensorFlow.

TensorFlow Wide and Deep Learning Tutorial

In the tutorial of the linear model, you trained the model with logistic regression to guess the income of a person using the census dataset. As you might have already realized by now, TensorFlow is great for deep neural networks too.

This tutorial will show you how to use the tf.estimator API to train a linear model (wide) together with a deep neural network which will give you both memorization and generalization.

Wide and Deep Spectrum of Models

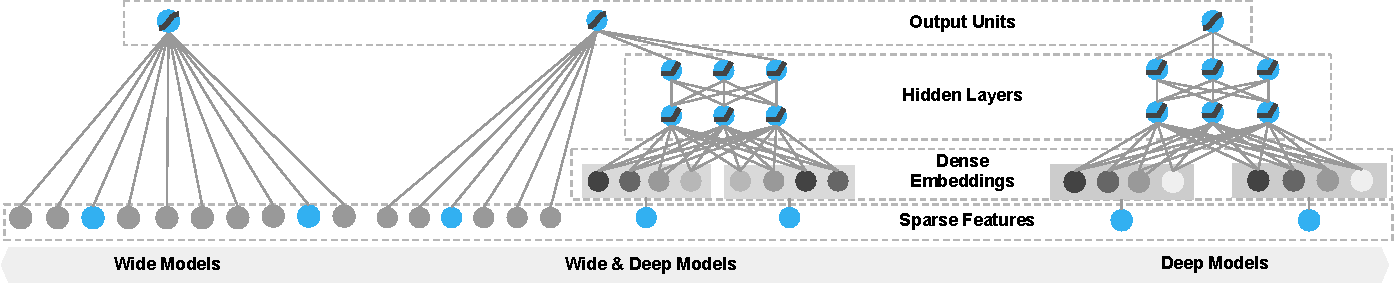

The figure shown at the start gives out the difference between the linear model and the deep neural network with embeddings and hidden layers along with the combined wide and deep model. There are mainly three steps to configure any model in general with the tf.estimator API:

- Wide model features: Choosing the base columns and crossed columns.

- Deep model features: Choosing the continuous columns, the dimension for each categorical column, and hidden layer sizes.

- Combining these into a single model with DNNLinearCombinedClassifier.

Setup for Wide and Deep Learning

Install TensorFlow in your system and then execute the files given below.

$ python data_download.py

The tutorial code lies within the wide_deep.py and uses this to train your model.

$ python wide_deep.py

Define Base Feature Columns

To start, you’ll initialize the base and feature columns that will be the basic elements and will be used by both the models.

import tensorflow as tf

age = tf.feature_column.numeric_column('age')

education_num = tf.feature_column.numeric_column('education_num')

capital_gain = tf.feature_column.numeric_column('capital_gain')

capital_loss = tf.feature_column.numeric_column('capital_loss')

hours_per_week = tf.feature_column.numeric_column('hours_per_week')

education = tf.feature_column.categorical_column_with_vocabulary_list(

'education', [

'Bachelors', 'HS-grad', '11th', 'Masters', '9th', 'Some-college',

'Assoc-acdm', 'Assoc-voc', '7th-8th', 'Doctorate', 'Prof-school',

'5th-6th', '10th', '1st-4th', 'Preschool', '12th'])

marital_status = tf.feature_column.categorical_column_with_vocabulary_list(

'marital_status', [

'Married-civ-spouse', 'Divorced', 'Married-spouse-absent',

'Never-married', 'Separated', 'Married-AF-spouse', 'Widowed'])

relationship = tf.feature_column.categorical_column_with_vocabulary_list(

'relationship', [

'Husband', 'Not-in-family', 'Wife', 'Own-child', 'Unmarried',

'Other-relative'])

workclass = tf.feature_column.categorical_column_with_vocabulary_list(

'workclass', [

'Self-emp-not-inc', 'Private', 'State-gov', 'Federal-gov',

'Local-gov', '?', 'Self-emp-inc', 'Without-pay', 'Never-worked'])

# hashing:

occupation = tf.feature_column.categorical_column_with_hash_bucket(

'occupation', hash_bucket_size=1000)

# Transforming.

age_buckets = tf.feature_column.bucketized_column(

age, boundaries=[18, 25, 30, 35, 40, 45, 50, 55, 60, 65])

Deep Model: Neural Network with Embeddings

As shown in the previous figure, the deep neural network is a feed-forward network. The high dimensional features are converted to lower dimensions and this representation is referred to as the embedding vector.

These lower dimensional vectors are then concatenated with the features and then fed to the hidden layers of a neural network. The values of the vector are randomly initialized, and along with other parameters trained to minimize the cost function.

The embedding_column is used to configure the embeddings for the columns and concatenate them with the continuous columns. You can use indicator_column to make multi-hot representations of categorical columns.

deep_columns = [

age,

education_num,

capital_gain,

capital_loss,

hours_per_week,

tf.feature_column.indicator_column(workclass),

tf.feature_column.indicator_column(education),

tf.feature_column.indicator_column(marital_status),

tf.feature_column.indicator_column(relationship),

# To show an example of embedding

tf.feature_column.embedding_column(occupation, dimension=8),

]The higher the dimension of the embedding is, the more degrees of freedom the model will have to learn the representations of the features. For simplicity, we set the dimension to 8 for all feature columns here.

Deep models will generalize better when then embeddings are denser. These embeddings will lead to predictions (non zero) for all the pairs of features whereas the linear models need a fewer number of parameters to memorize.

Combining Wide and Deep Models into One

The output logs of both the models are taken together to observe the combined result followed by a logistic regression function and you just have to create DNNLinearCombinedClassifier:

model = tf.estimator.DNNLinearCombinedClassifier(

model_dir='/tmp/census_model',

linear_feature_columns=base_columns + crossed_columns,

dnn_feature_columns=deep_columns,

dnn_hidden_units=[100, 50])Training and Evaluating the Model

Before training, you need to read the census data just like you did in the linear model tutorial. You need to check the data_download.py , input_fn in the wide_deep.py file.

Now, it’s time to train and evaluate the model:

# Train and evaluate the model every `FLAGS.epochs_per_eval` epochs.

for n in range(FLAGS.train_epochs // FLAGS.epochs_per_eval):

model.train(input_fn=lambda: input_fn(

FLAGS.train_data, FLAGS.epochs_per_eval, True, FLAGS.batch_size))

results = model.evaluate(input_fn=lambda: input_fn(

FLAGS.test_data, 1, False, FLAGS.batch_size))

# Display evaluation metrics

print('Results at epoch', (n + 1) * FLAGS.epochs_per_eval)

print('-' * 30)

for key in sorted(results):

print('%s: %s' % (key, results[key]))With the above-given model, your accuracy should come around 85%.

So, this was all about TensorFlow Wide and Deep learning Tutorial. Hope you like our explanation and understood TensorFlow deep learning & TensorFlow wide learning.

Conclusion

Hence, in this TensorFlow tutorial, we saw an example of implementing wide and deep learning together on a small dataset. Wide and Deep Learning is a very powerful feature of TensorFlow as it gives great results even with large datasets having an even larger number of features.

Moreover, we discussed how wide & deep learning works and also the wide and deep model. Further, we saw set up and spectrum for this models. Along with this, we discussed TensorFlow deep learning with neural networks embedding. Next up is the tutorial on Word2Vec.

Did you like this article? If Yes, please give DataFlair 5 Stars on Google

Hey, nice tutorial but I have a problem “name ‘FLAGS’ is not defined”, so how to definie FLAGS?

BR,

Maciek