TensorFlow Performance Optimization – Tips To Improve Performance

Free TensorFlow course with real-time projects Start Now!!

Today, in this TensorFlow Performance Optimization Tutorial, we’ll be getting to know how to optimize the performance of our TensorFlow code. The article will help us to understand the need for optimization and the various ways of doing it.

Moreover, we will get an understanding of TensorFlow CPU memory usage and also Tensorflow GPU for optimal performance.

So, let’s begin with TensorFlow Performance Optimization.

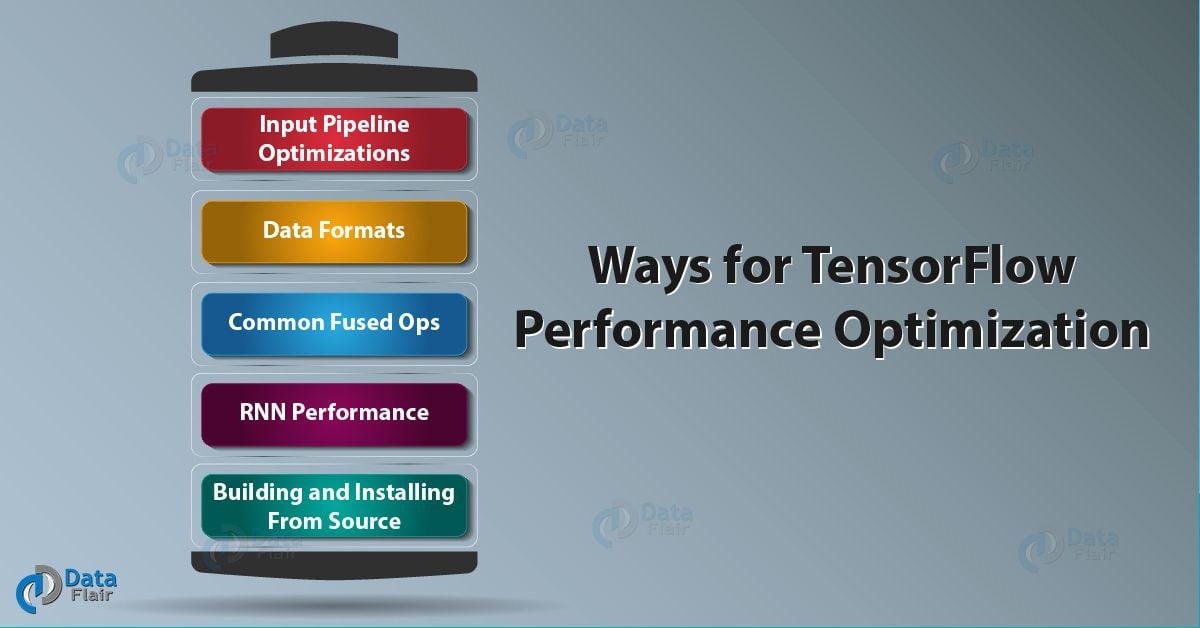

Ways for TensorFlow Performance Optimization

There a variety of ways through which you can optimize your hardware tools and models. Some of the ways that we are covering during the course of this TensorFlow Performance Optimization tutorial are:

- Input Pipeline Optimizations

- Data Formats

- Common Fused Operations

- RNN Performance

- Building & Installing from Source

a. Input Pipeline

The models that we build for our network get their input from the local disk drive and pre-processing is done on it before inputting it to the network. Consider, for example, a simple PNG image.

The process flow that follows includes the loading of the image from the disk, converting it to a tensor followed by manipulating the tensor by cropping, padding and then making a batch.

This is the process of Input Pipeline for TensorFlow Performance Optimization. The constriction occurs when the GPU’s do a faster pre-processing.

Determining this bottleneck in the pipeline is a boring job. We can do this task by making a small model after the pipelining and then compare it with the full model, if the difference comes out to be minimal, there might be a constriction in the input pipeline.

Other ways including keeping a tab on the GPU for its usage and utilization.

b. Data Formats

Data Formats is one of the ways for TensorFlow Performance Optimizations. As the name suggests, the structures of the input tensors that passes to the operations. Below are parameters of a 4D tensor:

- N is the number of images in the batch.

- H is the number of pixels in vertical dimensions.

- W is the pixels in horizontal dimensions.

- C is for channels.

The nomenclature of these data formats in broadly split into two:

- NCHW

- NHWC

The default is the latter whereas the firmer is the optimal choice while working on NVIDIA GPUs. It is good to build models that are compatible with both the formats so as to simplify the training on GPUs.

c. Common Fused Ops

These operations merge more than one operation into a single kernel to improve the performance. XLA creates these operations whenever possible to improve performance automatically. Below few Ops can improve the TensorFlow performance.

i. Fused Batch Normalization

An expensive process in TensorFlow Performance Optimization with a large amount of operation time. We use it to combine several operations into a single kernel to perform the batch normalization. Using this can speed up the process up to 12-30%. The two ways to perform batch norms are:

The tf.layers.batch_normailzation.

bn = tf.layers.batch_normalization(

input_layer, fused=True, data_format=‘NCHW’)

The tf.contrib.layers.batch_norm.

bn = tf.contrib.layers.batch_norm(input_layer, fused=True, data_format=‘NCHW’)

d. RNN Performance

We can specify the recurrent network in many ways. A reference that may be considered for implementation is the tf.nn.rnn_cell.BasicLSTMCell. Now, you have a choice between tf.nn.static_rnn and tf.nn.dynamic_rnn while running a cell.

An advantage of tf.nn.dynamic_rnn is that it can optionally swap memory from the GPU to the CPU which might be helpful in some with or you can get a degraded performance depending on the hardware. Multiple loops of tf.nn.dynamic_rnn and the tf.while_loop construct could be run in parallel.

The tf.contrib.cudnn_rnn should always be used in NVIDIA GPUs while in CPUs when tf.contrib.cudnn_rnn is not available, the fastest alternative is the tf.contrib.rnn.LSTMBlockFusedCell.

The other cell types which are less common, we can implement the graph like tf.contrib.rnn.BasicLSTMCell and will have same characteristics including degraded performance and usage of high memory.

e. Building and Installing From Source

We should run Tensorflow with all the optimizations available in order to compile using CPU. Building and installing from source lets you install the optimized version of TensorFlow. Now if the host platform differs from the target, then cross-compiler has the highest optimization.

Optimizing for GPU

This section covers the TensorFlow Performance Optimization methods which not usually practised. Optimal performance with the GPU seems to be the ultimate aim and one of the ways of doing this is with the use of data parallelism.

We can obtain parallelism by making multiple copies of the model that refers to as towers. The following points will help you to understand better:

- Placing one tower on each of the GPUs. The towers work on different batch sizes of data, updating variables (parameters) which shares between each tower.

- Updation of these parameters depends upon the model, the configuration of the hardware. Given is a corollary from benchmarking various platforms and configurations:

- Tesla K80: Here, the GPUs are on the same PCI express card and the peer to peer functionality of the NVIDIA is present, therefore, placing the variables equally across the GPUs that are used for training will give the best results.

- Titan X (Maxwell and Pascal), M 40, and P100: For models like ResNet and InceptionV3, variables should be placed on the GPU for optimum performance, but for models with variables that are large in numbers like AlexNet and VGG, NCCL is a better choice.

- In general, a method is here to find where operations place to manage variables and that method replaces the device name when calling with tf.device()

Optimizing for CPU

There are two configurations below for optimizing CPU performance.

- intra_op_parallelism: parallelization of nodes is achieved using multi-threads, that’ll schedule the individual pieces.

- inter_op_parallelism: The nodes that are ready are scheduled in this operation.

tf.ConfigProto, is used to set these configurations by passing to in the config attribute of tf.Session. For both parallelism configurations, is initialised as zero, they will revert to the number of logical CPU cores.

Equating the number of physical cores to the number of threads rather than using logical cores is another good way of optimization.

So, this was all about TensorFlow Performance Optimization. Hope you like and satisfied with our explanation of how to optimize TensorFlow Performance.

Conclusion – TensorFlow Performance Optimization

Hence, in this TensorFlow Performance Optimization tutorial, we saw, there are various ways of optimizing TensorFlow Performance of our computation, the main one being the up-gradation of hardware which often is costly.

Moreover, we saw Optimizing for GPU and Optimizing for CPU which also helps to improve TensorFlow Performance. There are other methods as you saw like data parallelism and multi-threading that will push the current hardware to their limit, giving you the best results that you can get out of them.

Next up, is the comparison between the two mobile platforms of TensorFlow. Furthermore, if you have any doubt regarding TensorFlow Performance Optimization, feel free to ask through the comment section.

Your opinion matters

Please write your valuable feedback about DataFlair on Google

Hello,

I am using the code at: https://keras.io/examples/imdb_fasttext/ for testing the performance of my PC. I have 1 GTX 2060, Ubuntu 18.04, Tensorflow 2.0, Cuda 10.1, cuDNN7.6. I got 22 secs/epoch using the bi-grams, however according to the page, only 2 secs/epoch are needed in a GTx 980M GPU. I was hoping to have a second per epoch with my configuration.

What could be the issue?

Many thanks,

Roxana