Basics of Memory Management in Computer

FREE Online Courses: Transform Your Career – Enroll for Free!

Computer memory is the data collection of a device in binary format. Binary language is what the machine understands and thus the computer converts every data into binary language before storing it in memory. This is how the computer stores data.

And the device capable of storing data for the short term or long term becomes a storage device. To ensure a device can optimize overall system performance, the function of memory management comes in place.

It is responsible for coordinating and monitoring the memory by assigning blocks to various programs on the device. It keeps a record of all the file locations despite the process of saving them. This decides the space that each file needs on the device and updates all additions and deletions of the files.

It is present everywhere in the device, the hardware, the operating system, and in all the programs as well. In the case of hardware, there is physical storing of data like RAM, memory chips, caches, and drives. For an operating system, its main role is to assign blocks to each program.

The memory management in applications, the function is to ensure that there is a proper data structure with adequate space for smooth running. Allocation and recycling are the two tasks in the applications. Allocation is to assign blocks to the program while recycling is when the previous data is no longer needed, the same is reassigned to another program.

Why Use Memory Management?

- It makes sure that every process gets an ideal amount of memory and at the right time.

- It updates the system by checking the inventory’s status as vacant or engaged.

- Provides space to application routines

- Coordinates to avoid interference between applications

- Protects processes from each other

- Ensures memory utilization by placing programs in the memory

Requirements of memory management

1. Relocation

There are many files and processes that exist together as a multiprogramming system, but it’s hard to know if a program will be in the memory during execution. Swapping is the main concept here which we study in detail below.

Swapping enables the operating system to have a better execution of the process. But this process after removing an item from the main memory, and the chances of it getting the same location at return are very less. Thus the idea of relocation becomes important.

2. Protection

Storing many processes together leads to a mix up in writing the address. This causes interference between the programs affecting their functioning. Thus every process needs protection from these kinds of situations.

Memory cannot predict the locations thus the absolute address is not accessible too. There is a dynamic calculator during the execution by the processor checking the validity of memory references.

3. Sharing

There is sharing in the main memory by many processes allowing them to access the same copy of the programs instead of having an unique one.

The memory management allows control over shared areas of memory while protecting them. The relocation is in a way to support sharing capabilities.

4. Logical organization

The main memory follows a linear organisation with one-dimensional address space made of bytes or words. They may be editable or readable only but an effective operating system and computer hardware sports all basic modules.

They are ideal because –

- Each module is independent and only the system resolves the references between them.

- There is a different degree of protection for each module.

- The mechanism allows sharing of modules with a program allowing users to experiment.

5. Physical organization

There is a physical organisation between the main and the secondary memory. Main one faster and costlier but the secondary is long term and non volatile. The flow of information between the two is very important for the programmer as they may engage in overlaying during insufficient space in the memory.

Many modules can stay in the same region of the memory. But it is time consuming for the user as they are unaware about the space inside the memory and the coding time.

Computer Memory Allocation

The main memory of the device usually has a partition. There is low memory where the operating system functions. And there is high memory for user processes. The operating system has two mechanisms for memory allocation –

1. Single-partition allocation

This type of mechanism follows a relocation-register scheme to protect the user’s processes while changing code and data on the system. The smallest value logical address goes to the relocation register while the range of these addresses goes to the limit register.

2. Multiple-partition allocation / Contiguous Memory Allocation

Here, there are fixed space partitions of the main memory and each of them has only one process. When there is a vacant partition, the process from the input gets uploaded here.

And once this process terminates, the partition becomes vacant again. The holes are the free block in the memory and each of them undergoes a test to see their capability before assignment.

They are of three types –

- First Fit – It is the first hole with a large size for program allocation.

- Best Fit – It is the smallest hole with a large size for program allocation.

- Worst Fit – It is the largest hole with a large size for program allocation.

Process Address Space

The logical space set is a process address with references in its code. An address with 32 bits can be in the range of 0 to 0x7fffffff with 2^31 possible numbers of 2 gigabytes size. The process of mapping a logical address to a physical one during allocation is by the operating system. The addresses are of three types –

Symbolic addresses – the source code is the address with variable, constant, and instruction labels in the address space.

Relative addresses – The compiler converts the symbolic address into relative during the compilation.

Physical addresses – The loaders make these during program loading in the memory.

During compilation and loading, virtual and physical addresses are the same and are different during execution-time and address-binding schemes. All the logical addresses that a program generates are logical address spaces.

The Memory Management Unit takes care of transferring virtual addresses to a physical one using certain mechanisms. The base register has all user-generated addresses, the users only interact with virtual addresses and not the physical ones.

Loading in Memory

The process of transferring secondary memory to main memory is Loading. It is mainly of two types, static loading, and dynamic loading. The decision of either one comes during computer program development.

The static loading compiles programs without leaving any external program or module dependency. There is linking of object programs with other necessary object modules creating an absolute program with logical addresses. At the loading stage, the absolute program needs to be in memory for final execution.

In the case of dynamic loading, the compiling is done for all the modules with only references, and the rest is done during execution. The dynamic routes of the library enter the memory through a relocatable disk only when the program needs it.

Linking

Enabling program execution, linking brings together modules or the functions of the program. It is also of two types – static linking and dynamic linking. The static linking leads to combining all the modules of the program into one executable document to save users from runtime dependency.

While dynamic linking, instead of compiling all the programs, a reference to the dynamic module is there during compilation and linking. Dynamic Link Libraries and Shared Objects are a few examples of dynamic libraries.

Swapping

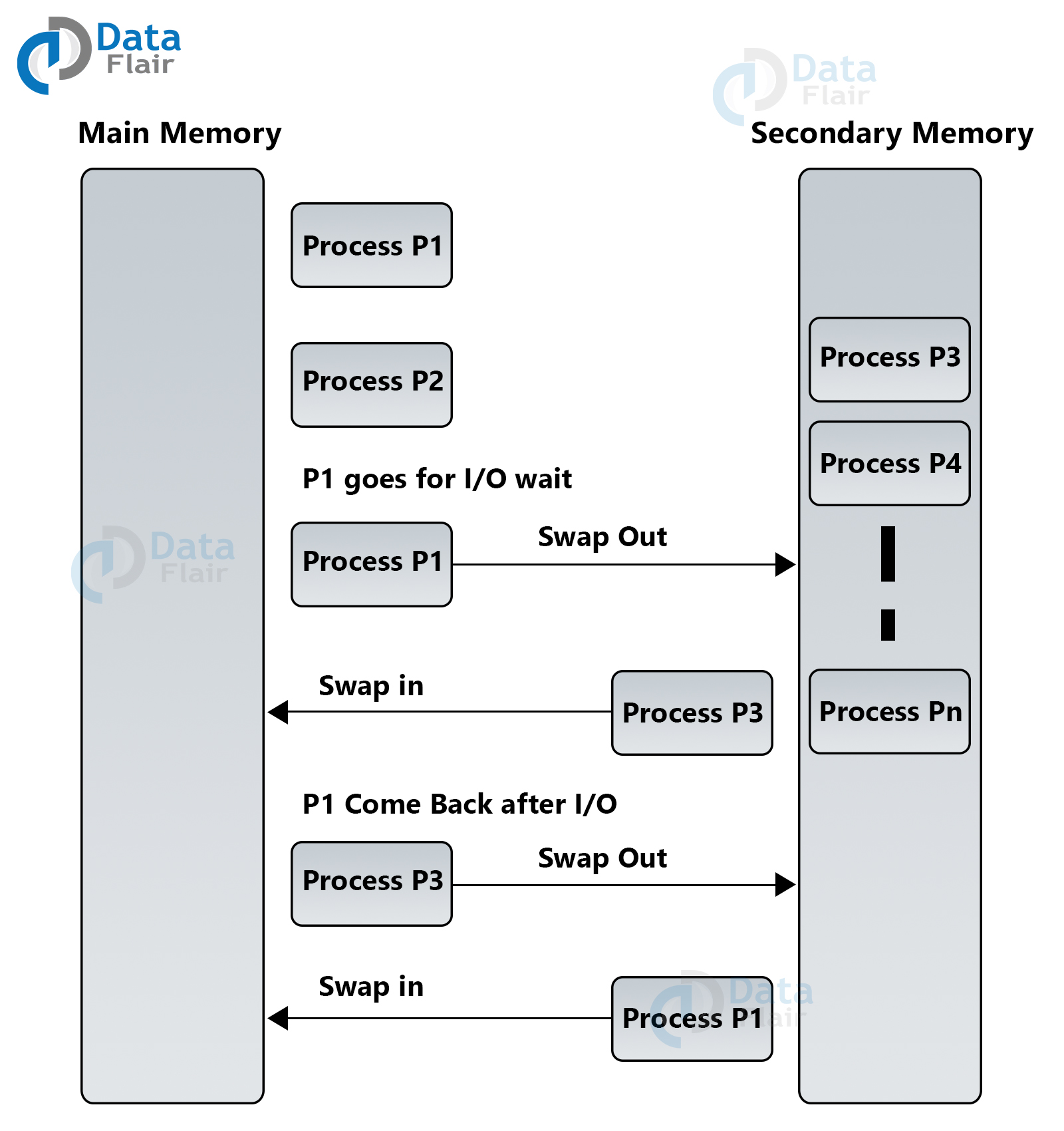

The process of removing a process temporarily out of the main memory to secondary storage and making it accessible for a new process is swapping.

After the work is done, the original process goes back to the main memory. This impacts the device performance but helps to run multiple and processes in parallel. The time is taken to do this process from the start till the end is process swapping.

For example –

Process Size – 2048KB

Transfer Rate – 1 MB/per second.

Actual Transfer – 1000K

2048KB / 1024KB per second

= 2 seconds

= 2000 milliseconds / one way

Some of its benefits are –

- High degree of multiprogramming

- Can follow a dynamic location leading to better functioning

- Efficient use of the memory

- Follows priority based schedule improving performance

- There is minimum wastage of CPU

Fragmentation

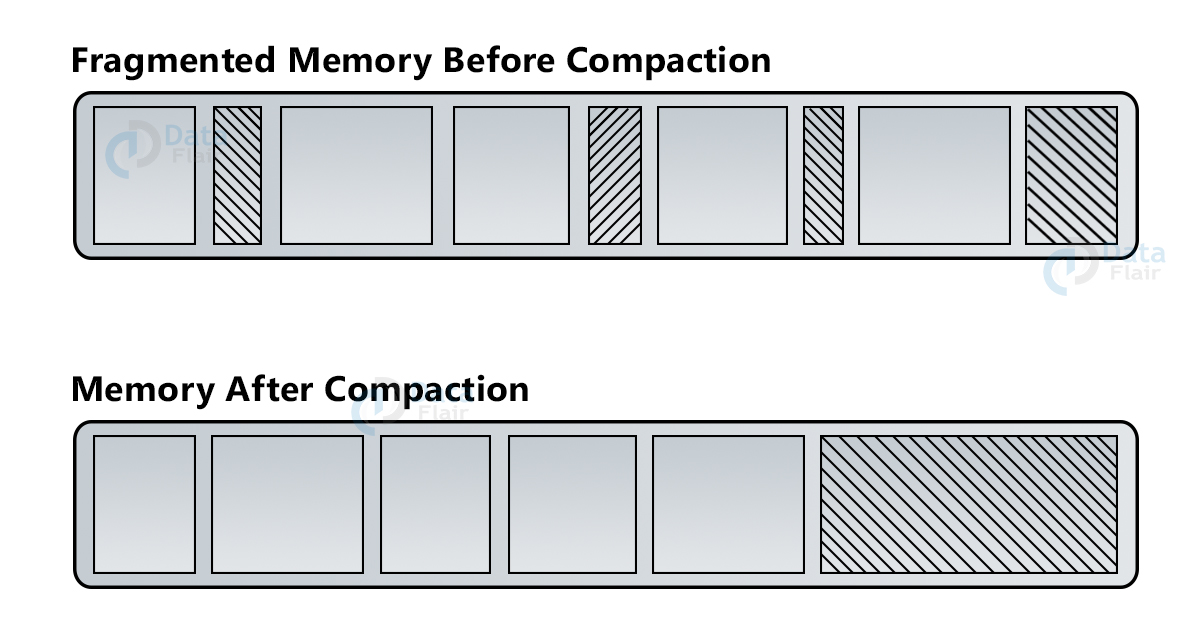

In the process of loading and removing, the free space in the memory gets into pieces. After a point, these pieces don’t accept any processes due to their small size and memory blocks. This problem is called fragmentation.

It is of two types – External fragmentation and Internal fragmentation.

1. External fragmentation

It has enough memory space for process residence but it is not contiguous becoming useless. Compaction of all free memory together in a block can reduce this.

2. Internal fragmentation

It is when the process gets a bigger block than needed leaving extra space left unused. Effective assigning of the process into a small partition but enough for it can reduce this problem as well.

Paging

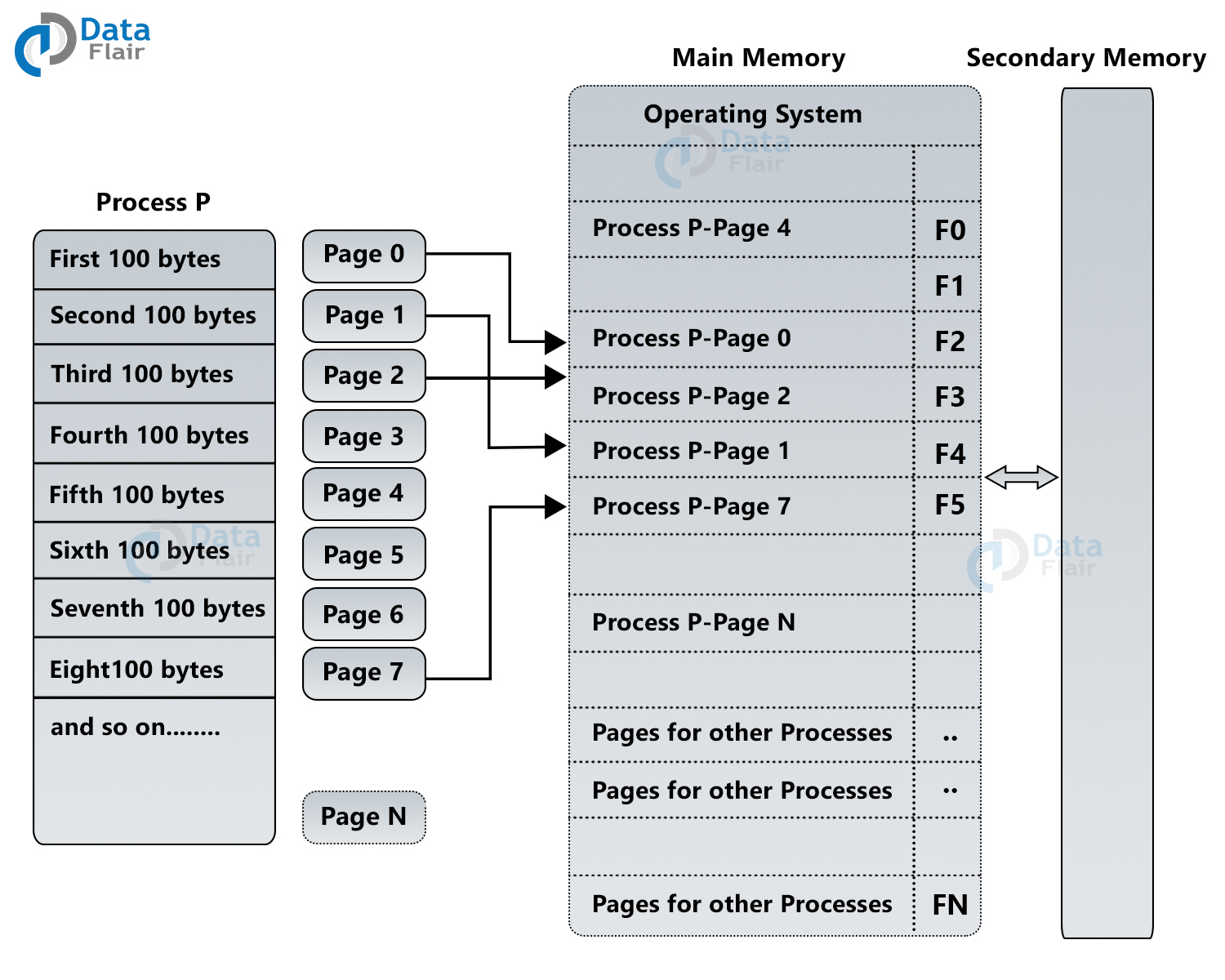

The computer can manage more memory than the physically installed one on the device. This extra memory is virtual memory and paging is a technique to manage it. This technique breaks process address space in same-size blocks called pages. Similarly, frames are the same size blocks of the main memory.

The page address is the same as the logical address and has a page number and offset of representation. Ie. Logical Address = Page number + page offset. The frame address is the same as the physical address and has a frame number and offset for representation. Ie. Physical Address = Frame number + page offset

A data structure is there to monitor the relation between pages and frames in memory. This structure is a page map table. The system allocates a frame to every page translating a logical address into a physical one. During the process execution, the pages need to load on vacant frames and this shall continue till the execution completes.

There are advantages but certain drawbacks to this method as well. It may control external fragmentation but leads to internal fragmentation. It’s simple to implement but swapping is easier because of the equal size of the pages and frames. Computers with small RAM cannot function with a page table.

Segmentation

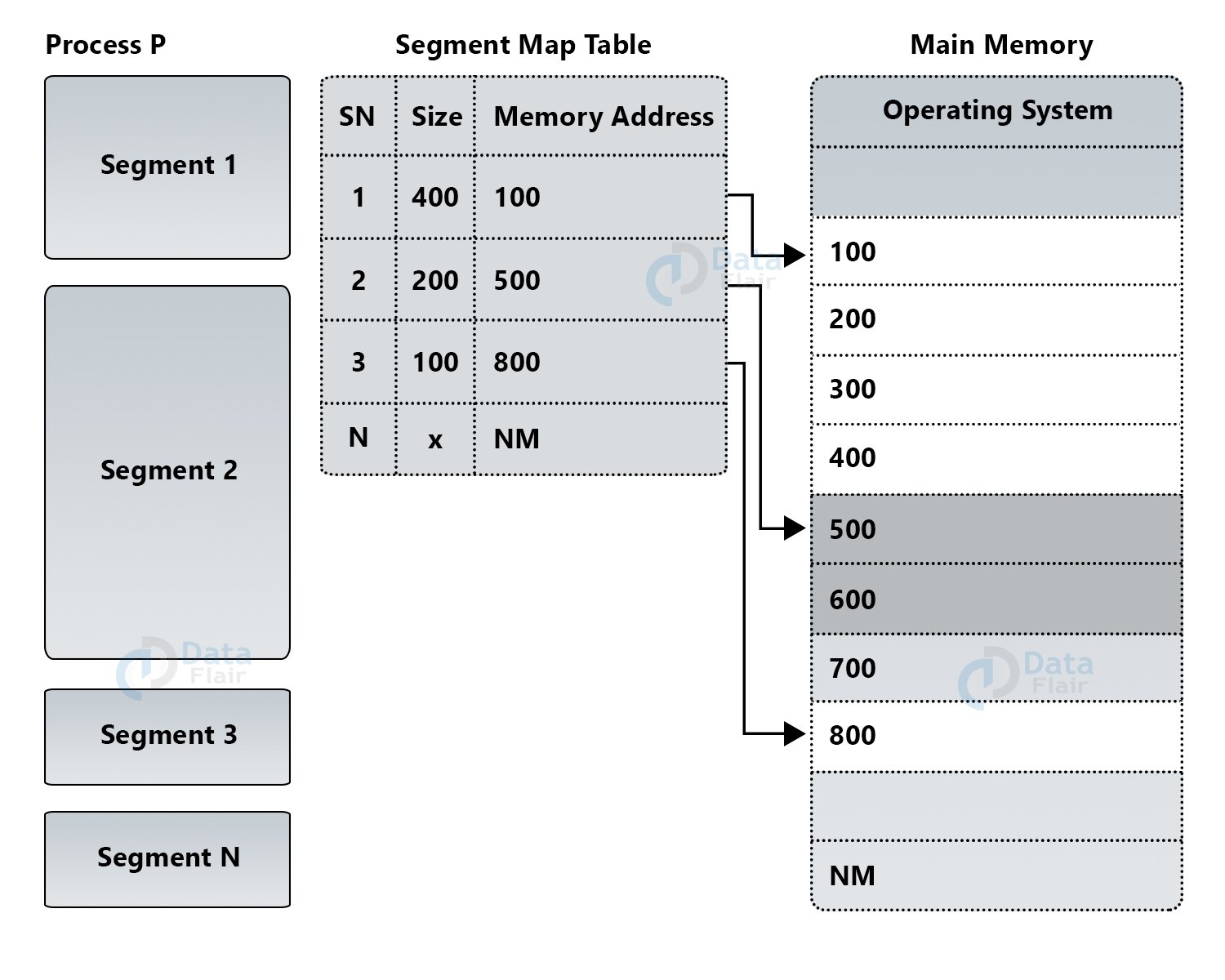

Dividing each job into several segments of different sizes with each module having a function is segmentation. Every segment has a different logical address space and they go in non-contiguous memory during execution. It is very similar to paging but has different-sized pages, unlike paging.

It has the program’s main function, utility functions, data structures, etc with a segment map to coordinate everything. For each segment, the starting address and its length are what the table stores. There is also a reference to the memory location of the segment and offset.

Segmentation with Paging is an ideal combination that leads to each segment divided into pages and they all go in the page table.

In this, the logical address has 3 parts :

- Segment numbers(S)

- Page number (P)

- The offset number (D)

Memory Protection

Controlling the memory access rights on the device to prevent processes from accessing it without allocation refers to memory protection. This protects the memory from bugs, storage violations, segmentation faults, and killing the process.

Memory life cycle

The basic life cycle of any process looks like this –

- Allocation of Memory

- Make use of the allocated memory

- Remove the allocated memory when done

- Make space for a new process and the same repeats

Allocation in JavaScript is a bit different. There is value initialization where JavaScript automatically allocates memory to declared values. Then there is using values, that is to read and write in the memory using variables or object property.

After this, the allocated memory is released because it’s not needed anymore. The developer takes this call after evaluation in case of a low-level language. But in high memory language, there is a garbage collector, an automatic memory manager.

Issues in Memory Management

Relocation and Address Translation

During address translation, the exact location of the physical memory remains unknown. The compiler assumes that the program will load from memory location x and at runtime it may relocate. This creates confusion in the address book.

Protection

There are no laid down measures to avoid interference of multiple programs. The compiler ensures to generate different addresses and relocation but hardware malfunction may lead to interference.

Sharing and Evaluation

Unlike protection, many processes may end up at the same memory location. There are very few norms to evaluate the efficiency of the memory management scheme like wasted memory, access time, and complexity.

Conclusion

The memory management for most of us was just till hard drives and internal memory of our devices. But as we learned, it is a lot more than that. The functioning of the units to manage the memory is quite complex and your basics must be clear to understand that.

The article covers all elements of basic memory management for competitive exams like the RRB exam, IBPS exam. SBI Exam, etc. All these exams have computer aptitude in their syllabus making it important for all applicants to study this topic.

If you are Happy with DataFlair, do not forget to make us happy with your positive feedback on Google