How Deep Learning Works with Different Neuron Layers

Free Machine Learning courses with 130+ real-time projects Start Now!!

Artificial Intelligence, Machine Learning, and Deep Learning come under Data Science. These terms are small but have changed technology. They have given a new direction to technology. The first step to understanding how deep learning works is to grasp the differences between AI, ML, and Deep Learning.

AI is a substitute for human intelligence in computers to perform certain tasks. learn more about AI through Complete AI Guide.

Machine Learning is a subset of AI that gives the machine the ability to learn and improve from past experiences. It needs no explicit programming.

Deep learning is a subset of machine learning that uses an algorithm inspired by the human brain. It learns from experiences. Its main motive is to simulate human-like decision making.

Let us now see working of deep learning neural network.

How Deep Learning works?

The inspiration for deep learning is the way that the human brain filters the information. Its main motive is to simulate human-like decision making. Neurons in the brain pass the signals to perform the actions. Similarly, artificial neurons connect in a neural network to perform tasks clustering, classification, or regression. The neural network sorts the unlabeled data according to the similarities of the data. That’s the idea behind a deep learning algorithm.

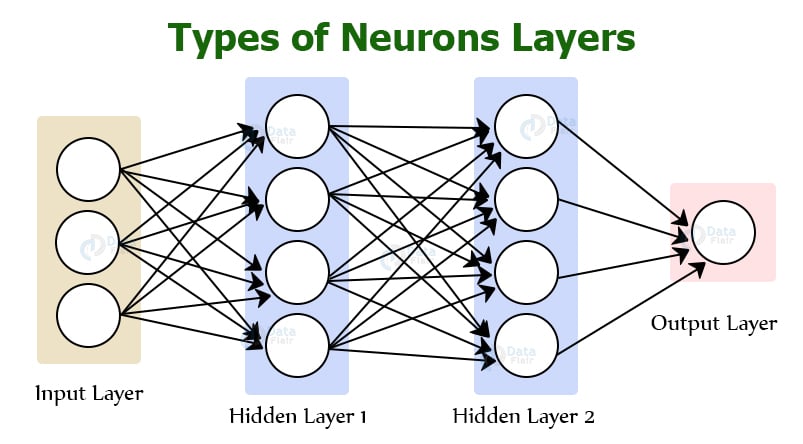

Neurons are grouped into three different types of layers:

a) Input layer

b) Hidden layer

c) Output layer

Technology is evolving rapidly!

Stay updated with DataFlair on WhatsApp!!

1. Input Layer

It receives the input data from the observation. This information breaks into numbers and the bits of binary data that a computer can understand. Variables need to be either standardized or normalized to be within the same range.

2. Hidden Layer

It performs mathematical computations on input data. To decide the number of hidden layers and the number of neurons in each layer is challenging. It does the non-linear processing units for feature extraction and transformation. Each following layer utilizes the output of the preceding layer as input. It forms the hierarchy concepts from the learning. In the hierarchy, each level grasps to transform the input data into a more and more abstract and composite representation.

The “deep” in Deep Learning refers to have more than one hidden layer.

3. Output Layer

It gives the desired result.

Eg: Consider an image of a face, whose input might be a matrix of pixels. The first layer encodes the edges and composes the pixels. The second layer composes the organizing of edges. The next layer encodes a nose and eyes. The next layer might acknowledge the face on the image, and so on.

The connection between neurons is called weight, which is the numerical values. The weight between neurons determines the learning ability of the neural network. During the learning of artificial neural networks, weight between the neuron changes. Initial weights are set randomly.

To standardize the output from the neuron, the “activation function” is used. Activation functions are the mathematical equations that calculate the output of the neural network. Each neuron has its activation function. It is difficult to understand without mathematical reasoning. It also helps to normalize the output in a range between 0 to 1 or -1 to 1. An activation function is also known as the transfer function.

How to train the Neural Network

Training the neural network is a difficult task. It requires a huge data set and a large amount of computational power. Iterating through the observations in the data set and comparing the outputs will produce a Cost Function. Cost Function refers to the difference between the actual value and the predicted value. Lower the cost function, closer it is to the desired output.

There are two processes for minimizing the cost function.

1) Backpropagation

Backpropagation is the core of neural network training. It is the prime mechanism by which neural networks learn. Data enters into the input layer and propagates in the network to give the output. After that, the cost function equates the output and desired output. If the value of the cost function is high then the information goes back, and the neural network starts learning to reduce the cost function by adjusting the weights. Proper adjustment of weights lowers the error rate and makes the model definitive.

2) Forward propagation

The information enters into the input layer and forwards in the network to get the output value. The user compares the value to the expected results. The next step is calculating errors and propagating the information backward. This permits the user to train the neural network and modernize the weights. Due to the structured algorithm, the user can adjust weights simultaneously. It will help the user to see which weight of the neural network is responsible for error.

Summary

In conclusion, Deep Learning has given a boom to technology industry. In this article, you have read how deep learning works like the human brain. It mimics the way our brain works and learns from the experiences. You have learned about the different layers of artificial neural networks. Moreover, you have learned about the weights, activation function and training of neural networks. Hope this will help you to understand the working of Deep learning.

Did you like this article? If Yes, please give DataFlair 5 Stars on Google