PySpark Pros and Cons | Characteristics of PySpark

In this PySpark Tutorial, we will see PySpark Pros and Cons. Moreover, we will also discuss characteristics of PySpark. Since we were already working on Spark with Scala, so a question arises that why we need Python.

So, here in article “PySpark Pros and cons and its characteristics”, we are discussing some Pros/cons of using Python over Scala. Also, we will see some characteristics of PySpark to understand it well.

Advantages of PySpark

Python’s Pros while using it over Scala:

i. Simple to write

We can say it is very simple to write parallelized code, for simple problems.

ii. Framework handles errors

While it comes to Synchronization points as well as errors, framework easily handles them.

iii. Algorithms

Many of the useful algorithms are already implemented in Spark.

iv. Libraries

In comparison to Scala, Python is far better in the available libraries. Since the huge number of libraries are available so most of the data science related parts from R are ported to Python. Well, this does not happen in the case with Scala.

v. Good Local Tools

For Scala, there are no good visualization tools but there are some good local tools available in Python.

vi. Learning Curve

As compared to Scala, the learning curve is less in Python.

vii. Ease of use

Again as compared to Scala, Python is easy to use.

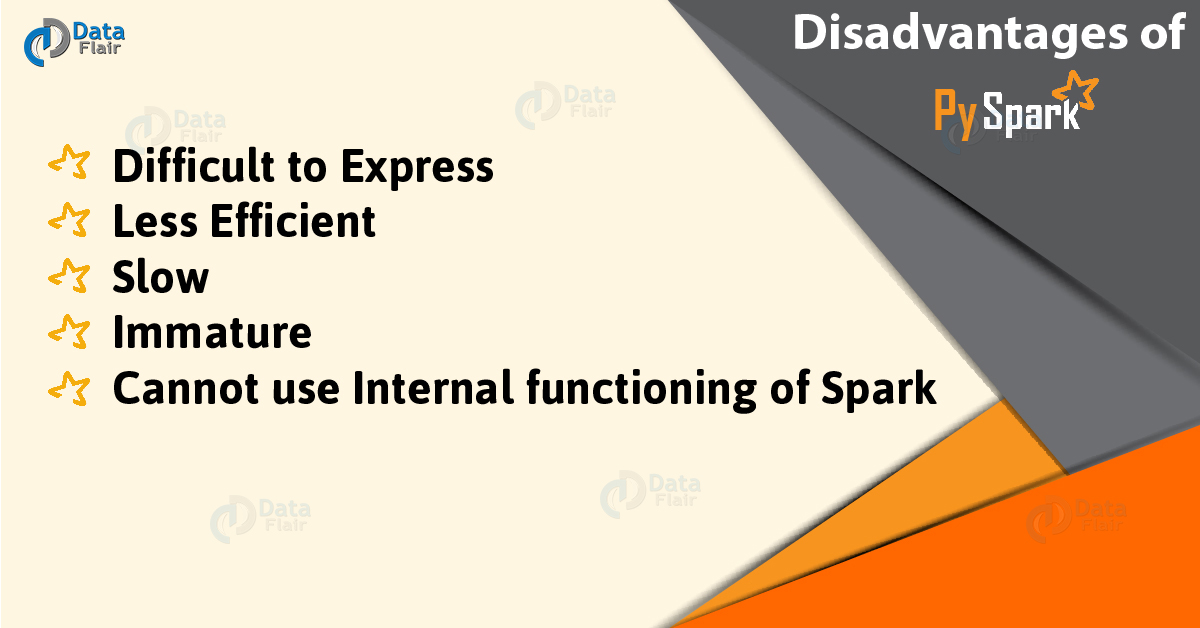

Disadvantages of PySpark

Python’s Cons while using it over Scala:

i. Difficult to express

While it comes to express a problem in MapReduce fashion, sometimes it’s difficult.

ii. Less Efficient

Pythons are less efficient as compared to other programming models. For example as MPI when we need a lot of communication.

iii. slow

Basically, Python is slow as compared to Scala for Spark Jobs, Performance wise. Approximately, 10x slower. That means if we want to do heavy processing then Python will be slower than Scala.

iv. Immature

In Spark 1.2, Python does support for Spark Streaming still it is not as mature as Scala as of now. So, we must go to Scala, if we need Streaming.

v. Cannot use internal functioning of Spark

As the whole of Spark is written in Scala, so we have to work with Scala if we want to or have to change from internal functioning of Spark for our project, we cannot use Python for it.

However, let’s understand it with an example, see using Scala in Spark core we can create a new RDD, but not create it using Python.

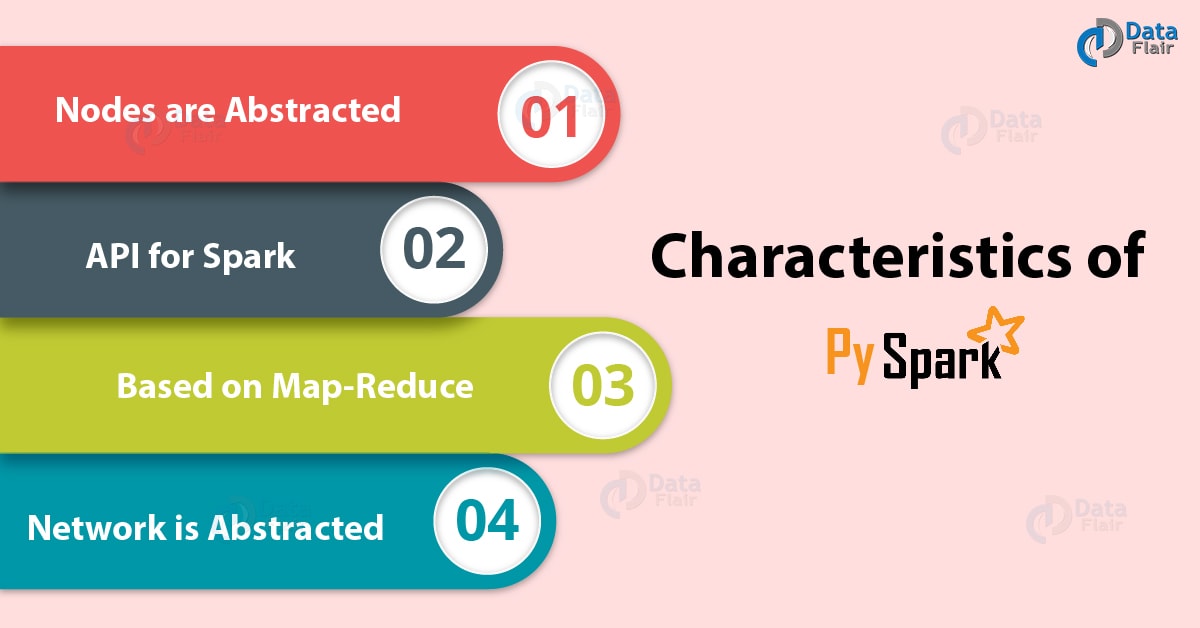

Characteristics of PySpark

Below, we are discussing some characteristics of PySpark:

i. Nodes are abstracted

That means we cannot address an individual node.

ii. Network is abstracted

Only implicit communication is possible here.

iii. Based on Map-Reduce

Moreover, programmers offer a map and a reduce function.

iv. API for Spark

PySpark is one of the API for Spark.

So, this was all about PySpark Pros and Cons. hope you like our explanation.

Conclusion: PySpark Pros and Cons

Hence, we have seen all the Pros and Cons of using Python over Scala. So, it definitely clears the concept of using PySpark, even after the existence of Scala. Moreover, we also discussed PySpark Characteristics. Still, if any doubt regarding PySpark Pros and Cons, ask in the comment tab.

Did we exceed your expectations?

If Yes, share your valuable feedback on Google