Scaling in Microsoft Azure

Free AWS Course for AWS Certified Cloud Practitioner (CLF-C01) Start Now!!

FREE Online Courses: Knowledge Awaits – Click for Free Access!

The demand for infrastructure resources like computation, storage, and networking is frequently dynamic. Users visit websites more frequently at different times of the day. When high-traffic events occur, such as the Super Bowl or the World Cup, the demand for content-delivery services increases, as does the consumption of the underlying CPU, memory, disc, and network. So, in today’s article, we will learn about the scalability feature available in Azure.

What does Azure Scalability Mean?

Scalability is the capability of a system to handle increased cargo. Services covered by the Azure Autoscale can gauge automatically to match the demand to accommodate the workload. These services gauge out to ensure capacity during workload peaks and return to normal automatically when the peak drops.

To achieve performance effectiveness, consider how your operation design scales and apply PaaS immolations that have erected-in scaling operations.

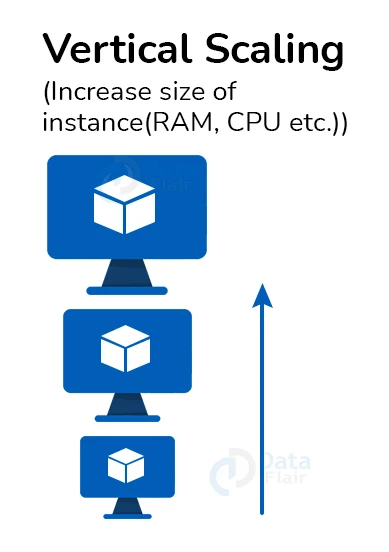

Two main ways an operation can gauge include perpendicular scaling and vertical scaling. Vertical scaling ( spanning up) increases the capacity of a resource, for illustration, by using a larger virtual machine (VM) size. It adds new cases of a resource, similar to VMs or database clones.

Vertical scaling in Azure

Vertical scaling, also known as scale up and scale down, is the process of changing the size of virtual machines (VMs) in response to a workload.

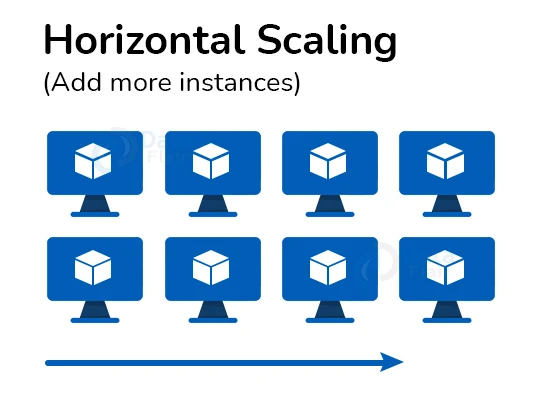

Compare this to horizontal scaling, also known as scale out and scale in, in which the number of virtual machines (VMs) is adjusted based on the workload.

Replacing an old VM with a new one is known as reprovisioning. When you want to raise or decrease the size of VMs in a virtual machine scale set, you can resize current VMs while keeping their data, or you can deploy new VMs of the new size. Both scenarios are covered in this document.

Advantages of Azure Vertical Scaling

Vertical scaling has the advantage of not requiring any modifications to the programme. However, there will come a moment when you can no longer scale up. Any subsequent scaling must be horizontal at this point.

Cons of Vertical Scaling in Azure

1. Vertical scaling can be more expensive in general. What makes vertical scaling so costly? Costs can rise if resources aren’t sized correctly — or at all.

2. There’s also the matter of downtime. Even in a cloud environment, vertical scaling frequently necessitates taking an application down for a period of time. As a result, environments or applications that cannot afford downtime would benefit more from horizontal scalability, which involves supplying additional resources rather than expanding the capacity of existing ones.

Horizontal Scaling in Azure

Horizontal scaling is the process of adding more servers to suit your demands, usually by spreading workloads between them to minimize the number of requests that each server receives.

In cloud computing, horizontal scaling is adding more instances rather than shifting to a bigger instance size.

Advantages of Azure Horizontal Scaling

1. True cloud scale: Applications are built to run on hundreds or even thousands of nodes, allowing them to grow to levels that would be impossible on a single node.

2. Horizontal scalability is pliable: you can add additional instances as the load grows, or delete instances when the load decreases. Automatic scaling out can be triggered on a timetable or in response to variations in load.

3. It’s possible that scaling out is less expensive than scaling up. Several small VMs can be run for less money than a single large VM.

4. By introducing redundancy, horizontal scaling can also improve resiliency. The application continues to execute even if an instance fails.

Cons of Horizontal Scaling in Azure

1. Your charges may be greater depending on the number of instances you require. Furthermore, without a load balancer, your machines are at risk of being overworked, which could result in an outage.

2. If you can estimate when you’ll need more compute capacity, you can take advantage of savings for Reserved Instances (RIs) on public cloud platforms.

Following the cloud cost management best practices will help you grow in and out of the cloud with ease.

Horizontal Scaling vs Vertical Scaling in Azure

The decision to scale horizontally or vertically in the cloud is based on your data’s needs. Even in cloud systems, remember that scalability remains a difficulty. From CPU resources to database and storage resources, every aspect of your application must scale. Any component of the scaling puzzle that is overlooked might result in unintended downtime or worse.

The best method might be a combination of vertical scaling to determine each instance’s optimal capacity and then horizontal scaling to manage demand spikes while maintaining uptime.

Azure Autoscaling

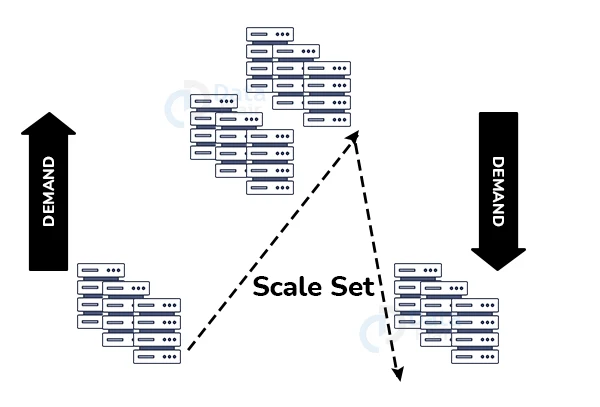

Autoscaling allows you to run the appropriate number of resources to handle your app’s load. It scales out resources to manage an increase in load, such as seasonal workloads.

For some business apps, autoscaling saves money by removing idle resources (called scaling in) during periods of lower load, such as nights and weekends.

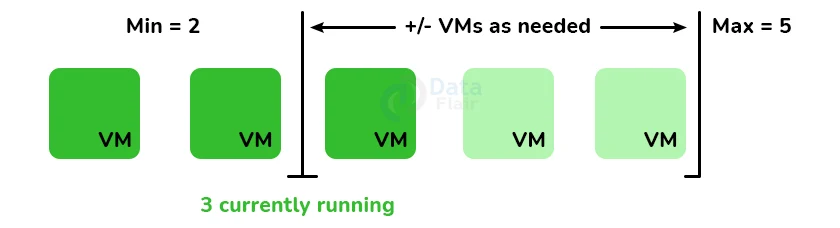

You can scale between the minimum and a maximum number of instances to run, as well as add and delete virtual machines (VMs) depending on a set of criteria.

Azure AutoScale Settings

The autoscale engine checks an auto scaling parameter to see if it should scale up or down.

You can develop unique autoscaling rules to suit your needs. The following are examples of rule types. They are as follows:

- Minimum Instance: The number of instances in your scale set that you intend to deploy.

- Maximum Instances: When scaling out, this is the maximum number of instances you want to deploy. (Note that you can only have 1000 instances in Azure.)

- Metric-based: It monitors application demand and adds or removes virtual machines as needed. When CPU utilization exceeds 50%, for example, add an instance to the scale set.

- Time-based: For example, in a given time zone, trigger an instance every Monday at 8 a.m.

Understanding Scale Targets in Azure

Scale actions (horizontal – altering the number of similar instances, vertical – switching to more/less powerful instances) might be quick, but they typically take time.

It’s critical to understand how this delay affects the programme under load and whether or not the resulting performance degradation is acceptable.

Taking Advantage of Platform Autoscaling Features

- When possible, use built-in auto scaling features rather than bespoke or third-party solutions.

- When possible, use planned scaling rules to ensure that resources are available.

- To cope with unexpected fluctuations in demand, add reactive autoscaling to the rules where applicable.

Autoscaling CPU or Memory-Intensive Applications

Scaling up to a larger machine SKU with more CPU or memory is required for CPU or memory-intensive workloads. Instances can revert to their former state once the demand for CPU or memory has been lessened.

You might have an application that processes photos, movies, or audio, for example. Given the procedure and needs, scaling up a server by adding CPU or memory to process the large media file fast may make sense.

While scaling out allows the system to handle more files at once, it does not affect the pace with which each file is processed.

Autoscaling with Azure Compute Services

Autoscaling works by gathering resources (CPU and memory consumption) and application measurements (requests queued and requests per second).

Then, depending on how the rule evaluates, rules can be developed to add and remove instances.

Autoscale rules for scale-out/scale-in and scale-up/scale-down can be set in an App Services app plan. Azure Automation is subject to scaling as well.

Features and Functionality of Microsoft Azure Cloud Scalability

Unlike on-premises scaling, which necessitates the procurement of extra hardware, resources in the Azure cloud environment may simply be scaled up and down based on the needs of the customer. Many fantastic scaling options are available in Azure, including:

- Scaling up and down

- Scaling in and out

- Autoscaling

1. Scaling up

When greater capacity and performance are required for an increased number of users hitting a specific demand, scaling up is often done on-premises.

Adding more resources to an existing physical or virtual server is referred to as scaling up. Adding CPU, memory, or storage resources is an example of this.

2. Scaling down

Scaling down, which entails eliminating resources from an existing server or virtual server, is typically not done on-premises. This reduces the server resource’s available capacity.

3. Scaling out

Scaling out is the process of adding more servers and capacity to an existing system. This entails adding more servers to run business-critical applications that share the burden of the application’s existing servers.

The new servers are added to the pool of resources using load balancers and other technologies, and traffic is directed to them accordingly.

4. Scaling In

The opposite of scaling out is scaling in. This entails removing a subset of server resources from the pool of servers running existing workloads and de-provisioning existing servers and capacity.

5. Auto Scaling in Azure

Autoscaling is a method of performing scalability operations such as scaling up/down or in/out based on workload demand in an automated manner.

Autoscaling ensures that the proper amount of resources is available for the workload demands while also ensuring operational efficiency. This aids in the elimination of squandered or unnecessary resources.

Azure PaaS Scalability

Application services are provided via Azure’s Platform-as-a-Service offering. This is an Azure managed infrastructure solution that lets operations and developers deploy apps on top of the service without worrying about the underlying infrastructure.

There are various tiers of PaaS services available, including the ones listed below. Each tier, aside from the free and shared, includes scalability options to take advantage of.

| Free | No scalability features included |

| Shared | No scalability features included |

| Basic | Manual scaling capabilities

|

| Standard | Automatic scaling capabilities |

| Premium | Automatic scaling capabilities |

Azure IaaS Scalability

Customers can host their own infrastructure platform in the Azure cloud using the Azure Infrastructure-as-a-Service (IaaS) offering. The difference with IaaS is that the customers are in charge of managing the infrastructure, such as virtual computers and so on.

Customers can use the Azure IaaS solution’s VM scale sets to tackle day-to-day operations difficulties like patching and other maintenance tasks.

Azure VM Scale Sets

VM scale sets allow you to construct and manage a collection of load-balanced, identical VMs. You can define VM instances that increase or decrease automatically in response to demand or a set timetable.

This aids in the application’s high availability and enables central administration of configuration, upgrading, and other duties.

The use of VM scale sets improves workload performance, redundancy, and distribution across several instances.

Traffic is easily diverted to another available application instance when maintenance, updates, reboots, and other procedures are required. Simply provision new application instances in the VM to scale the workloads.

VM Scale Set Auto Scaling

You can automatically raise or reduce the number of VM instances that run your application using the auto-scaling mechanism provided into the VM Scale Set.

This results in an automated and elastic behavior that helps to reduce management overhead in monitoring and adjusting your application’s performance.

The acceptable performance of the VMSS can be defined using rules.

The scale set distributes traffic through a load balancer after the VM instances are created.

Metrics such as CPU, memory, and how long a load must stay at a specific level are all monitored. Depending on the demands of the environment, auto-scaling rules can both increase and decrease resources.

Conclusion

With its supposed infinite scalability to the consumer, cloud computing allows us to take advantage of these patterns while keeping costs low. We can develop a system that automatically responds to user demand, allocating and deallocating resources as needed, if we correctly account for vertical and horizontal scaling approaches.

You give me 15 seconds I promise you best tutorials

Please share your happy experience on Google