Basics of PyTorch Neural Network

Free Machine Learning courses with 130+ real-time projects Start Now!!

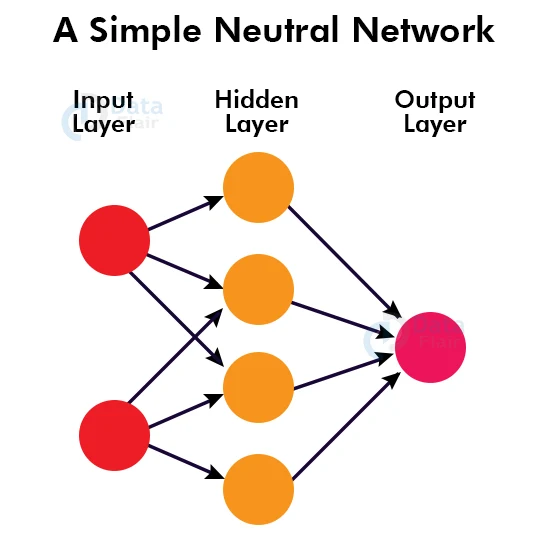

The inspiration for neural Networks in Deep Learning comes from the human brain’s neural networks. Analogous to the human brain, these neural networks, popularly called Artificial Neural Networks, are a collection of connected nodes that can transmit information from one layer to another. There are three kinds of layers – input, hidden and output layers. The input and output layers contain inputs and outputs, respectively. The hidden layer is used to find out information about the input, which is eventually used to find the output.

Structure of a Neural Network

The three layers of the neural network are the Input layer, the Hidden Layer and the Output layer.

a. Input layer

The input layer contains all the parameters we have collected about the data and fed into the deep learning model. It is often termed as a passive layer because the nodes in it cannot take any information from any other node.

b. Hidden Layer

There can be one or many hidden layers, with every layer having one or many nodes in a deep learning model. Every node performs a particular task depending on the input given to it. Every node has an activation function that defines the functionality of the node. A node can take a real number and transfer it to the next layer only if it is more than zero; otherwise, it can pass 0. It can take a real number and transmit its sine and cosine to the next layer.

There are infinite possible processing of the input that a node can do, which tries to extract some information about it that helps in prediction or classification. PyTorch is very useful in building these hidden layers. The more hidden layer a model has, the more accurate the results will be.

c. Output Layer

This layer produces the final result of the deep learning model. It is the last layer and takes input from the hidden layers.

Working of Neural Network

The figure above shows two, four and one nodes in the input, hidden, and output layers, respectively. All the nodes are connected to all the nodes in the next layer. All these junctions have some weights associated with them.

For example, let the inputs be x1 and x2, and the weights of the junction connecting the first node of the input to the four nodes in the hidden layer are w11,w12,w13 and w14, respectively. Similarly, the weights of the input’s second layer junctions are w21,w22,w23 and w24.

The input will be x1 * w11 + x2*w21 for the first node in the hidden layer. For the second, third and fourth nodes, the inputs are x1 * w12+ x2* w22, x1*w13+x2*w23 and x1*w14+x2*w24, respectively. Now, let f1(x), f2(x), f3(x) and f4(x) be the activation functions of the four nodes. Then the output of these nodes will be f1(x1 * w11 + x2*w21), f2(x1 * w12+ x2* w22), f3(x1*w13+x2*w23 ) and f4(x1*w14+x2*w24).

In the next step, the same transmission between the input and the hidden layer occurs between the hidden layer and the output layer. Also, the value of weights of all the junctions keeps varying during training. Finally, the output is compared to the actual label or value, as the case may be, and these weights are adjusted accordingly using gradient descent.

The process remains the same in a model with multiple hidden layers. We first find the input for a node and then compute the output using the activation function. Adding more layers to the model increases the accuracy of the training dataset but may decrease the accuracy of the test datasets. This phenomenon is what we call overfitting. Therefore we have to find the sweet spot where both the test and training datasets have acceptable accuracy.

Feed Forward Neural Network

A network with a unidirectional flow of information and input is called a Feed Forward Neural Network. These are the simplest forms of networks, as there are no feedback loops or convolutional layers. The output is calculated by simple multiplication of the inputs with their corresponding weights, which goes through appropriate activation. The output is then compared with the given labels, and the weights are adjusted accordingly.

Convolutional Neural Networks

Convolutional neural networks are pattern detectors mainly used for image classification. In such networks, the hidden layer has convolutional layers having filters to detect a specific property such as edges, circles, etc. For example, in the MNIST dataset, if the image of the number 8 is passed to the network, the filters will detect two circles. For 0, it will detect one circle, which is how it will differentiate between the two numbers. A filter is a matrix with values that help detect a feature.

For example, if the pixel data of an image is a 700 x700 matrix and the filter is a 5 x 5 matrix, the filter will find the filter matrix’ dot product and the first 5 x 5 elements of the pixels of the given image. Then it will do the same for all possible sets of 5 x 5 elements of the given image and store it in a new matrix which will be the output of the current layer. This process of calculating the dot product of the filter matrix with the given image matrix by sweeping over the entire matrix is what we call convolution.

Recurrent Neural Network

In Recurrent Neural Networks, there is a feedback loop in the hidden layer which enables the network to consider the present and previous states. These are mostly used in predicting sequences like the next word in a sentence.

Its performance improves by gating and LSTM. Gating means applying some logical gates to retain previous information, which, together with LSTM(Long Short Term Memory), determines how much information has to be forgotten.

Summary

Artificial Neural Networks operate just like the brain’s neural network. There are three layers: input, hidden, and output, and they perform the function as indicated by their name. It can be feed-forward, propagating in the forward direction, convolutional, having convolution hidden layers responsible for detecting patterns, or Recurrent, having feedback links and memory.

Did we exceed your expectations?

If Yes, share your valuable feedback on Google