Logistic Regression with Pytorch

Free Machine Learning courses with 130+ real-time projects Start Now!!

Logistic Regression is a Classification algorithm employed when only two possible outcomes exist. The applications having binary possibilities include spam detection, cancer tumour detection, fraudulent transaction detection etc. Let us learn about Logistic Regression with Pytorch.

Why Not Linear Regression?

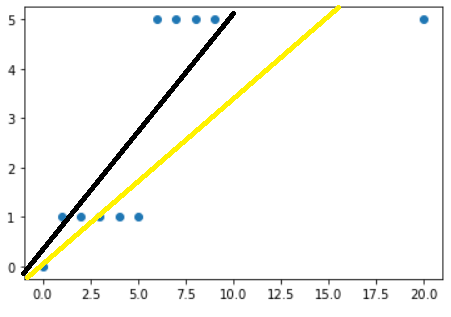

Linear Regression tries to fit a straight line to the training dataset. However, to tackle classification problems, we can consider fitting a line that would divide the dataset into two sets to achieve the goal.

However, this is inaccurate because even though we fit a line to the training dataset, it can be skewed just by adding one sample misclassifying the previously fitted samples.

In the above image, it is clear that we were able to classify the training samples using linear regression with minimum error (black line), but when we added one more point, many of the samples were misclassified (yellow line). Therefore, we can see that linear regression does not work well for classification problems.

Pytorch Logistic Regression Basics

Logistic Regression computes the probability of the sample being in the two categories and classifies it as belonging to the category with more probability. The hypothesis function is defined as hӨ(x), a probability of the sample belonging to class y=1.

if hӨ>=0.5 , y=1

And if hӨ<0.5, y=0

hӨ is calculated as follows,

, where X is the feature matrix of the input dataset.

Logistic Regression in Python

a. Importing the required libraries

from sklearn.datasets import make_blobs from sklearn.datasets import make_classification from matplotlib import pyplot as plt from sklearn.linear_model import LogisticRegression from sklearn.model_selection import train_test_split from sklearn.metrics import confusion_matrix import pandas as pd import numpy as np

b. Building the dataset

We will create some random data for the purpose of the study and try to build a logistic regression model to predict the results of the classifier on the test dataset.

x,y=make_blobs(n_samples=[200,200],random_state=2)

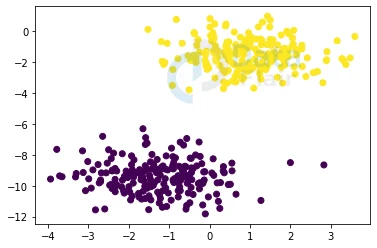

c. Visualising the dataset

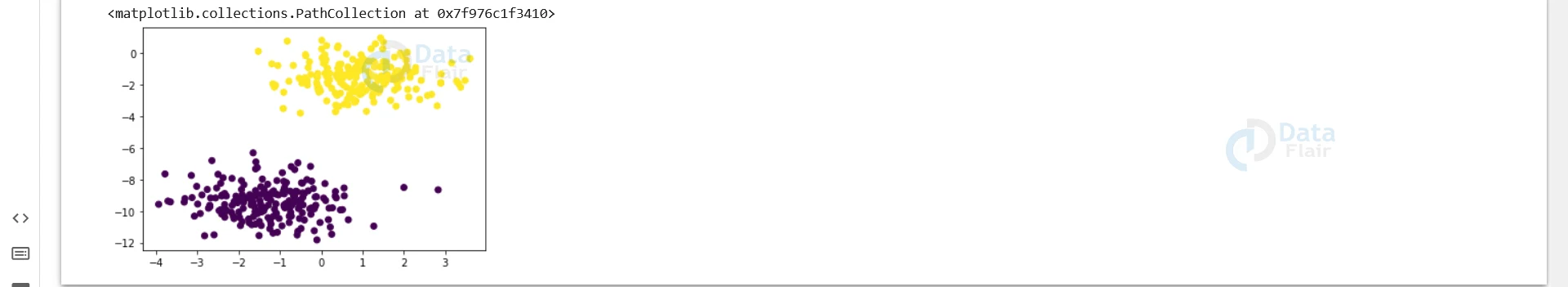

x_train=x y_train=y.reshape([-1,1]) plt.scatter(x[:,0],x[:,1],c=y)

d. Splitting the training and test data

To build a model, we need a training and test dataset. The model will be trained using the train dataset and then validated on the test dataset.

x_train, x_test, y_train, y_test = train_test_split(x, y, random_state=1)

e. Building the model

We create an instance of the Logistic Regression module of the sklearn library to initialise our model, followed by training it over the train dataset.

log_reg = LogisticRegression() log_reg.fit(x_train, y_train)

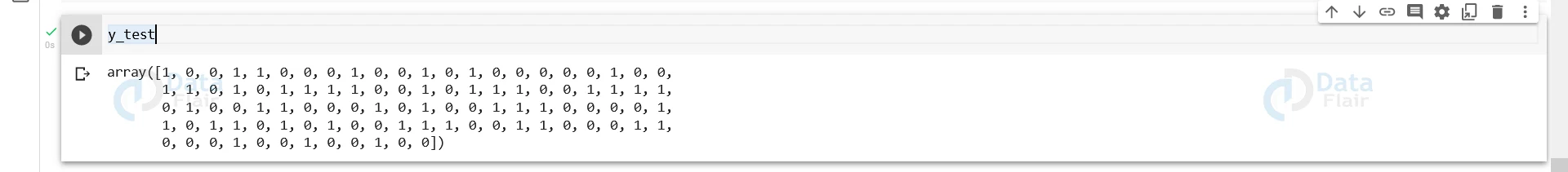

f. Prediction on the test dataset

Finally, we make predictions on the test dataset and determine its correctness using a confusion matrix.

pred=log_reg.predict(x_test) y_test

Output:

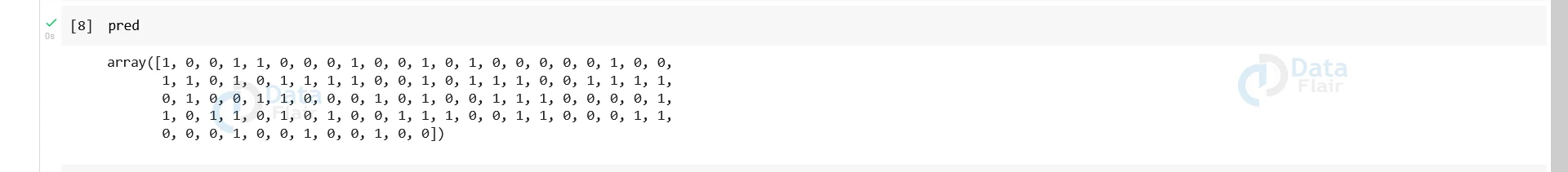

pred

Output:

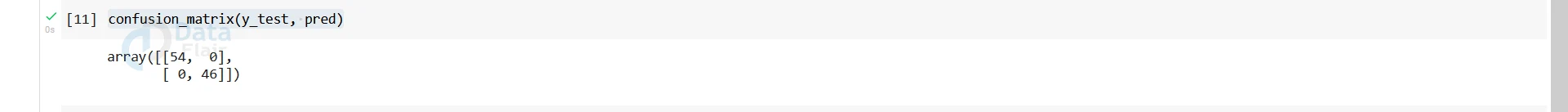

confusion_matrix(y_test, pred)

Output–

The confusion indicates that there are only true positives and true negatives in the predicted dataset, meaning that all the data are correctly classified.

Implementing Logistic Regression using PyTorch:

We can use PyTorch to build a logistic regression model.

a. Importing the required libraries:

from sklearn.datasets import make_blobs import torch import torch.nn as nn import numpy as np from matplotlib import pyplot as plt

b. Building the dataset

We will create random samples with labels 1 or 0 and convert them to tensors.

x,y=make_blobs(n_samples=[200,200],random_state=2) x_train=torch.from_numpy(x).type(torch.FloatTensor) y_train=torch.from_numpy(y).type(torch.FloatTensor).reshape([-1,1]) plt.scatter(x[:,0],x[:,1],c=y)

c. Building our Logistic Regression Model

class LogReg(nn.Module):

def __init__(self):

super(LogReg,self).__init__()

self.l1=nn.Linear(2,1)

def forward(self,x):

y_pred=torch.sigmoid(self.l1(x))

return y_pred

model=LogReg()

d. Training the model

Now we will define the optimiser and the loss function.

optimiser=torch.optim.SGD(model.parameters(),lr=0.01) criterion=torch.nn.BCELoss()

Finally, we train our model by running 100 epochs.

for epoch in range(100):

optimiser.zero_grad()

y_pred=model(x_train)

loss=criterion(y_pred,y_train)

loss.backward()

optimiser.step()

e. Predicting using the model

y_prediction=model(x_train) y_pred[:5]

Our prediction is a probability distribution. We need to convert it to 0s and 1s.

y_prediction=np.where(y_prediction>0.5,1,0) y_prediction[:5]

Output–

Summary

PyTorch provides an efficient and convenient way to build a logistic regression model, which can be used for image datasets as the tensors in PyTorch makes our job easier.

If you are Happy with DataFlair, do not forget to make us happy with your positive feedback on Google