Apache Flink Tutorial – Introduction to Apache Flink

Free Flink course with real-time projects Start Now!!

In this Apache Flink Tutorial, we will discuss the introduction to Apache Flink, What is Flink, Why and where to use Flink. Moreover, this Apache Flink tutorial will answer the question of why Apache Flink is called 4G of Big Data? The tutorial also briefs about Flink APIs and features.

So, let’s start Apache Flink tutorial.

Introduction to Apache Flink

Apache Flink is an open source platform which is a streaming data flow engine that provides communication, fault-tolerance, and data-distribution for distributed computations over data streams. Flink is a top-level project of Apache. It is a scalable data analytics framework that is fully compatible with Hadoop. Flink can execute both stream processing and batch processing easily.

Apache Flink was started under the project called The Stratosphere. In 2008 Volker Markl formed the idea for Stratosphere and attracted other co-principal Investigators from HU Berlin, TU Berlin, and the Hasso Plattner Institute Potsdam. They jointly worked on a vision and had already put the great efforts on open source deployment and systems building.

Later on, several decisive steps had been so that the project can be popular in commercial, research and open source community. A commercial entity named this project as Stratosphere. After applying for Apache incubation in April 2014 Flink name was finalized. Flink is a German word which means swift or agile. To learn more about Flink introduction.

Why Flink?

The key vision for Apache Flink is to overcome and reduces the complexity that has been faced by other distributed data-driven engines. This achieved by integrating query optimization, concepts from database systems and efficient parallel in-memory and out-of-core algorithms, with the MapReduce framework. So, Apache Flink is mainly based on the streaming model, Apache Flink iterates data by using streaming architecture. Now, the concept of an iterative algorithm bound into Flink query optimizer. So, Apache Flink’s pipelined architecture allows processing the streaming data faster with lower latency than micro-batch architectures (Spark).

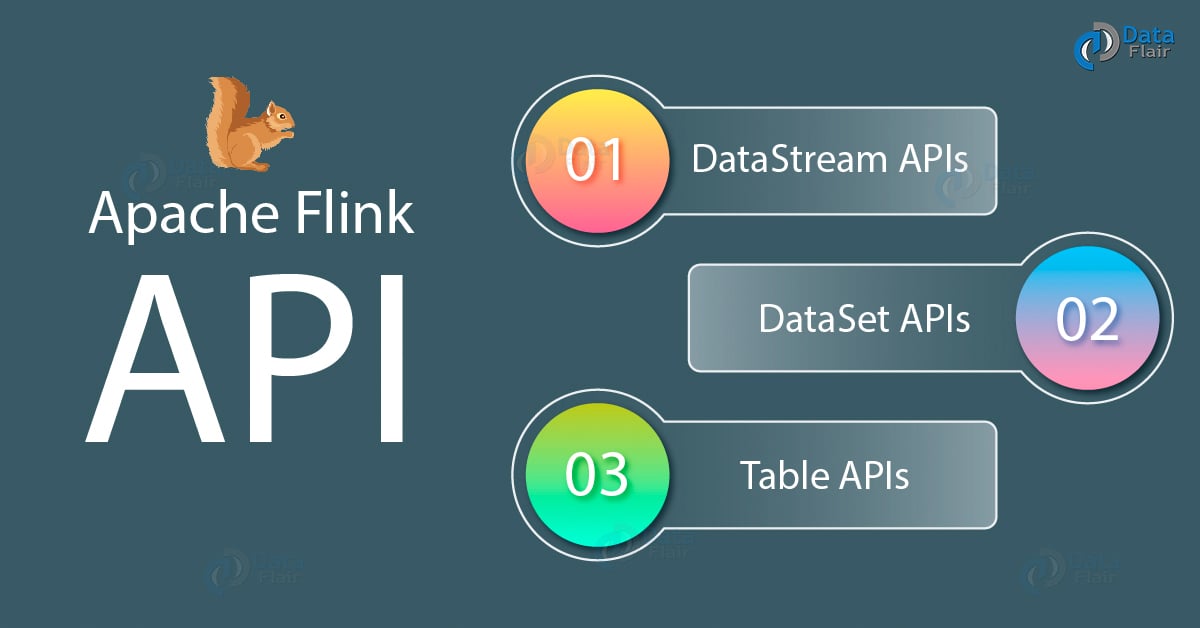

Apache Flink Tutorial – API’s

Apache Flink provides APIs for creating several applications which use flink engine-

i. DataStream APIs

Basically, it is a regular program in Apache Flink that implements the transformation on data streams For example- filtering, aggregating, update state etc. Results that return through sink which we can generate through write data on files or in a command line terminal.

ii. DataSet APIs

It is a regular program in Apache Flink that implements the transformation on data sets For example joining, grouping, mapping, filtering etc. We use this API for batch processing of data, the data which is already available in the repository.

iii. Table APIs

This API in Flink used for handling relational operations. So, it is a SQL-like expression language used for relational stream and batch processing which we can also integrate into Datastream APIs and Dataset APIs.

Features of Apache Flink

In this section of the tutorial, we will discuss various features of Apache Flink-

i. Low latency and High Performance

Apache Flink provides high performance and Low latency without any heavy configuration. Its pipelined architecture provides the high throughput rate. It processes the data at lightening fast speed, it is also called as 4G of Big Data.

ii. Fault Tolerance

The fault tolerance feature provided by Apache Flink is based on Chandy-Lamport distributed snapshots, this mechanism provides the strong consistency guarantees.

iii. Memory Management

So, the memory management in Apache Flink provides control on how much memory we use in certain runtime operations.

iv. Iterations

Apache Flink provides the dedicated support for iterative algorithms (machine learning, graph processing)

v. Integration

We can easily integrate Apache Flink with other open source data processing ecosystem. It can be integrated with Hadoop, streams data from Kafka, It can be run on YARN.

So, this was all in Apache Flink tutorial. Hope you like our explanation.

Conclusion – Apache Flink Tutorial

So, in this Apache Flink tutorial, we discussed the meaning of Flink. Moreover, we looked at the need for Flink. Also, we saw Flink features and API for Flink. Still, if you have any doubt in Apache Flink Tutorial, ask in the comment tabs.

What Next:

Install Apache Flink on Ubuntu and run Wordcount program, to install and configure Flink follow this installation guide

Did we exceed your expectations?

If Yes, share your valuable feedback on Google

Nice article , clears basic understanding .Thanks for this wonderful blog.

Thank you Lucky for appreciating our efforts. We are trying to help all the readers of Apache Flink by providing the new and most updated information. We hope you are exploring our sidebars too and share this information to help others as well.

Regards,

Data-Flair

Yes I agree, flink is future of IT analytics. It’s powerful than Spark

Hi Venu,

Thanks for taking part in our journey, we really like our readers approach us. Hope you like our blogs of different categories as well.

Regards,

Data-Flair

I have been an Apache Flink enthusiast for last ~1 year, playing around with it in my spare time (I am written a few blogs too, to capture my understanding). While I have come to like it very much personally, I am yet to come across a proven Use-Case where it is *decidedly* preferable to Apache Spark, driven by the technical approach and improvements that it brings in (I would love to be made wiser though). Having said that, I think the way it pushes the idea of streaming and computing – even data which has been stored post-facto and hence, a sure candidate for batch processing – quite compelling. The variety and fluidity of Time-based window operators are quite riveting for a programmer like me. The recent addition of CEP-like SQL operators can prove to be the key to its larger adoption. In my view, a good competitive technology for Apache Flink is not Apache Spark but Apache Apex. My 2 cents.

Very nice introduction to Apache Flink. Please share more material on this booming technology!!

Hi Rosemary,

It’s our pleasure that you liked our work on “Apache Flink Tutorial”, for more blogs on Flink please approach our sidebar and we recommend you to read our other blogs of different categories.

Regards,

Data-Flair