Free TensorFlow course with real-time projects Start Now!!

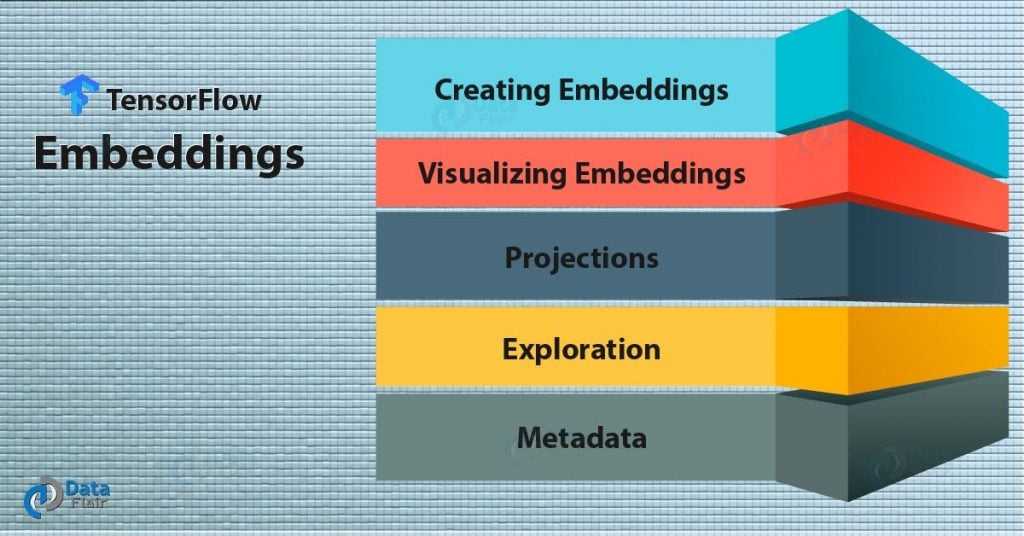

In this TensorFlow Embedding tutorial, we will be learning about the Embedding in TensorFlow & also TensorFlow Embedding example. Moreover, we will look at TensorFlow Embedding Visualization example.

Along with this, we will discuss TensorFlow Embedding Projector and metadata for Embedding in TensorFlow. At last. we will see how to create Embeddings in TensorFlow.

So, let’s start embeddings in TensorFlow.

What is Embedding in TensorFlow?

An Embedding in TensorFlow defines as the mapping like the word to vector (word2vec) of real numbers. A TensorFlow embedding example below where a list of colors represents as vectors:

Black: (0.01359, 0.00075997, 0.24608, …, -0.2524, 1.0048, 0.06259)

Blues: (0.01396, 0.11887, -0.48963, …, 0.033483, -0.10007, 0.1158)

Yellow: (-0.24776, -0.12359, 0.20986, …, 0.079717, 0.23865, -0.014213)

Oranges: (-0.35609, 0.21854, 0.080944, …, -0.35413, 0.38511, -0.070976)

The dimensions in these kinds of vectors usually don’t have any meaning, but the pattern of the matrix, as well as the location and distance between the vectors, contains some significant information that can be taken an advantage of.

As you are already aware, the classifiers and neural networks use these vectors of real numbers in their regular computation dense vectors are used to train them the best way. But many times the data involved such as words of text, don’t have a vector to be represented by.

Therefore, you could make use of standard embedding functions that prove to be an effective way to transform such inputs into useful vectors.

Another example for embeddings in TensorFlow can be assumed to be the representation of Euclidean distance or the angle between vectors which are commonly used to find the nearest neighbors as shown below, the word and the respective angles:

Black: (red, 47.6°), (yellow, 51.9°), (purple, 52.4°)

Blues: (jazz, 53.3°), (folk, 59.1°), (bluegrass, 60.6°)

Yellow: (yellow, 53.5°), (colored, 58.0°), (bright, 59.9°)

Oranges: (apples, 45.3°), (lemons, 48.3°), (mangoes, 50.4°)

Creating Embedding in TensorFlow

To create word embedding in TensorFlow, you start off by splitting the input text into words and then assigning an integer to every word. After that has been done, the word_id become a vector of these integers.

Let us take an example for embedding in TensorFlow, “I love the dog.” This could split into [“I”, “love”, “the”, “dog”, “.”]. The word_ids vector will now be of size [5] and will have 5 integers.

Now, for mapping the word_ids into vectors, you will create an embedding variable and should use the tf.nn.embedding_lookup function:

word_embeddings = tf.get_variable(“word_embeddings”,

[vocabulary_size, embedding_size])

embedded_word_ids = tf.nn.embedding_lookup(word_embeddings, word_ids)

After which the word_ids will be of size [5, embedding_size] and will contain the representation for every word.

Visualizing TensorFlow Embeddings

For visualization of embeddings in TensorFlow, TensorBoard offers an embedding projector, a tool which lets you interactively visualize embeddings. The TensorFlow embedding projector consists of three panels:

- Data panel – Which is used to run and color the data points.

- Projections panel – Which is used to select the type of projection.

- Inspector panel – Which is used to search for specific points and look at nearest neighbors.

TensorFlow Embedding Projector

In this TensorFlow Embedding Projector tutorial, we saw that embedding projector reduces the dimensionality of the dataset in the following three ways:

- t-SNE: An algorithm considered to be nondeterministic and on linear. It basically conserves the local neighborhoods in the data but affects the overall global structure.

- PCA: A linear and deterministic algorithm as compared to t-SNE. It is responsible for holding information about the variability of data in the least dimensions possible while affecting the local neighbors.

- Custom: A linear projection, projected onto a horizontal and a vertical axis. You can specify the axes by using the labels on your data. The projector then finds all the points whose label matches the assigned keyword and computes the centroid which use to define the axis.

Exploration of Embedding in TensorFlow

You can visualize by zooming, rotating or panning the graph in any way you that suits you. Pointing the mouse over a point will tell the metadata associated with that point. Other options are clicking on a point which will list the nearest neighbors with distances up to the current point.

Users also have a choice to select some particular points and perform the operations listed above on these selected points. You can do that in multiple ways:

- Click on a point, and select its nearest neighbors.

- Search for a point and select the matching queries.

- Clicking on a point and dragging can let you define a selection sphere.

Follow these steps by selecting “Isolate nnn points” button in the Inspector pane. The following image shows an example when select 101 points:

Tip: Always try to filter using custom projections. Given below, the result of filtering the nearest neighbors of the word “politics”, projected on the axes. The y-axis takes as random.

Use the bookmark panel to save the present state. The embedding projector will point to a cluster of one or more files, and will produce a panel as shown below:

How to Generate Metadata

You probably will add labels to your data points when working with embeddings in TensorFlow. You need to generate a metadata file which contains those labels and we can see in the data panel of the TensorFlow Embedding Projector.

The metadata can either labels or images, which stores in a separate file. Tab-Separated Values (TSV) format should be chosen for labels. For example:

Word\tFrequency

Boat\t345

Truck\t241

…

The (i+1) line in the metadata file corresponds to the i row of the variable. If the TSV file has a single column, there isn’t a header row, each row corresponds the label of the embedding. The table looks something like the one given below:

Table of metadata in TensorFlow-

So, this was all about Embeddings in TensorFlow. Hope you like our explanation of TensorFlow and TensorBoard Embeddings.

Conclusion

Hence, in this TensorFlow Embedding tutorial, we saw what Embeddings in TensorFlow are and how to train an Embedding in TensorFlow. Along with this, we saw how one can view the Embeddings with TensorBoard Embedding Projector.

Moreover, we saw the example of TensorFlow & TensorBoard embedding. Next up is debugging in TensorFlow. Furthermore, if you have any query feel free to ask through the comment section.