Apache Spark Compatibility with Hadoop

1. Objective

In this tutorial on Apache Spark compatibility with Hadoop, we will discuss how Spark is compatible with Hadoop? This tutorial covers three ways to use Apache Spark over Hadoop i.e. Standalone, YARN, SIMR(Spark In MapReduce). We will also discuss the steps to launch Spark application in standalone mode, Launch Spark on YARN, Launch Spark in MapReduce (SIMR), and how SIMR works in this Spark Hadoop compatibility tutorial.

2. How is Spark compatible with Hadoop?

It is always mistaken that Spark replaces Hadoop, rather it influences the functionality of Hadoop. Right from the starting Spark read data from and write data to HDFS (Hadoop Distributed File System). Thus we can say that Apache Spark is Hadoop-based data processing engine; it can take over batch and streaming data overheads. Hence, running Spark over Hadoop provides enhanced and more functionality.

3. Apache Spark Compatibility with Hadoop

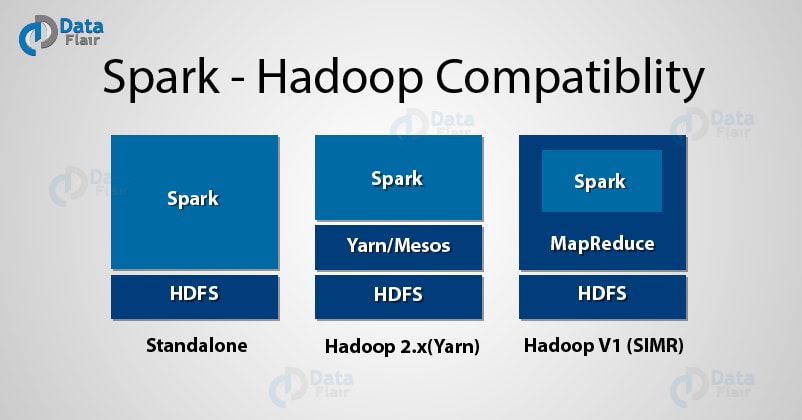

In three ways we can use Spark over Hadoop:

- Standalone – In this deployment mode we can allocate resource on all machines or on a subset of machines in Hadoop Cluster. We can run Spark side by side with Hadoop MapReduce.

- YARN – We can run Spark on YARN without any pre-requisites. Thus, we can also integrate Spark in Hadoop stack and take an advantage and facilities of Spark.

- SIMR (Spark in MapReduce) – Another way to do this is by launching Spark job inside Map reduce. With SIMR we can use Spark shell in few minutes after downloading it. Hence, this reduces the overhead of deployment, and we can play with Apache Spark.

Let’s discuss these three ways of Apache Spark compatibility with Hadoop one by one in detail.

3.1. Launching Spark Application in Standalone Mode

Before Launching Spark application in standalone mode refer this guide to learn how to install Apache Spark in Standalone Mode(single node cluster)?

Spark support two deployment modes for standalone cluster namely the cluster mode and the client mode. In client mode, the driver launch in the same process in which client submits the application. In cluster mode, the driver launch from one of worker node process inside the cluster, the client process exit as it submits the application without waiting for the application to finish.

a. Adding the jar

If we launch the application through Spark submit, It automatically distributes the application jar to all worker nodes. For any additional jar specify it through –jars flag, use comma as a delimiter. If the application exits with non-zero exit code, the standalone cluster mode will restart your application.

b. Running application in Standalone Mode

If we want to run Spark application in standalone mode by taking input from HDFS use the code:

[php]$ ./bin/spark-submit –class MyApp.class –master MyApp.jar –input hdfs://localhost:9000/input-file-path –output output-file-path[/php]

3.2. Launching Spark on YARN

Apache Spark running on YARN was added in version 0.6.0 and was improved in later releases.

If we want to run Spark job on Hadoop YARN cluster(Learn to configure Hadoop with yarn in pseudo distributed mode) we first need to merge Spark JAR. It merges all the essential and required dependencies. We can achieve this by setting Hadoop version and SPARK_YARN environment variable as:

SPARK_HADOOP_VERSION=2.0.5-alpha SPARK_YARN=true sbt/sbt assembly.

Make sure YARN_CONF_DIR or HADOOP_CONF_DIR indicates those directories which have a configuration file for Hadoop cluster. Using these configurations we write to HDFS and connect to YARN Resource Manager. The configurations that are contained in this directory are shared among YARN cluster so that all the containers that are used by applications use the same configuration.

To launch Spark application on YARN there are two deployment modes namely: the cluster mode and the client mode.

i. Cluster Mode – In cluster mode, the Spark driver runs inside Application Master Process and this is managed by YARN on the cluster.

ii. Client Mode – In this mode, the driver runs in client process and we use application master only for requesting a resource from YARN and providing it to the driver program.

In YARN mode the address of ResourceManager is taken from Hadoop Configuration. So, here the –master parameter is yarn.

If we want to launch Spark application in cluster mode use the command:

[php]$ ./bin/spark-submit –class path.to.your.Class –master yarn –deploy-mode cluster [options] <app jar> [app options][/php]

If we want to launch Spark application in client mode use the command (replace cluster in above by client)

[php]$ ./bin/spark-submit –class path.to.your.Class –master yarn –deploy-mode client[/php]

a. Adding other JARs

When we run in cluster mode, the driver runs on a different machine as that of the client, so to make available the files that are on the client to SparkContext.addJar, add those files with –jars option in the launch command.

For Example:

[php]$ ./bin/spark-submit –class my.main.Class \

–master yarn \

–deploy-mode cluster \

–jars my-other-jar.jar,my-other-other-jar.jar \

my-main-jar.jar \

app_arg1 app_arg2[/php]

If we want that Spark runtime jars to be accessible from YARN side, specify-

spark.yarn.archive or spark.yarn.jars.

If we do not specify these then Spark will form a zip file with all jar under $SPARK_HOME/jars and upload it to the distributed cache.

b. Debugging Application on YARN

In YARN, both the application master and executors run inside the “containers”. Once the application has completed YARN has two modes to handle container log.

i. If log aggregation is turned on

In the case where we turn the log aggregation on using the YARN.log-aggregation-enable config, the container logs will copy to HDFS and will delete from the local machine. And later if we want to view this file from anywhere on cluster use the command:

[php]yarn logs -applicationId <app ID>[/php]

This command will print out the content from all log files from all containers from given application.

We can also see the container log files in HDFS directly by using HDFS Shell or API. If we want to find the directory where the log file is present, use this command:

yarn.nodemanager.remote-app-log-dir and yarn.nodemanager.remote-app-log-dir-suffix

ii. If log aggregation is not turned on

In this case, logs keep locally on each machine under yarn_app_logs_dir, which generally configures to /tmp/logs or $HADOOP_HOME/logs/userlogs depending on the Hadoop version and installation.

If we want to view log from a container, we must first go to the host that contains it and look in the directory. Further, the sub-directory maintains log files by application ID and container ID.

3.3. Launching Spark in MapReduce (SIMR)

It is an easy way for Hadoop MapReduce 1 user to use Apache Spark. Using this we can run Spark job and Spark Shell without installing Spark or Scala, or have administrative rights. The only pre-requisites are HDFS access and MapReduce v1. SIMR is open-sourced and is a joint work of Databricks and UC Berkeley AMPLab. Once you download the SIMR, we can try it by typing

[php]./simr –shell[/php]

To use this user only need to download package of SIMR that matches Hadoop cluster. The package of SIMR contains 3 files:

SIMR runtime script: simr

simr-<hadoop-version>.jar

spark-assembly-<hadoop-version>.jar

To get usage information place all three in the directory and execute SIMR. The job SIMR is to launch MapReduce jobs with required number of map slots and makes sure that Spark/Scala and jobs are sent to all those nodes. One of the mappers is set as master and inside that mapper, Spark driver is made to run. On the remaining mapper SMIR launch the Spark executor, these executors will execute a task on behalf of the driver.

The master is selected by leader election by writing to HDFS, the mapper which writes first in the HDFS is set as the master mapper. And the remaining mapper finds the driver URL by reading a specific file from HDFS. Thus, in place of cluster manager SIMR uses MapReduce and HDFS.

a. How does SIMR work?

SIMR allows the user to interact with the driver program. On the master mapper, the SIMR runs the relay server and the relay client is run on the machine that launches SIMR. The input to the client and the output from the driver goes to and fro between the client and the master mapper. Hence, to achieve all this we extensively use HDFS.

4. Conclusion

In conclusion to Apache Spark compatibility with Hadoop, we can say that Spark is a Hadoop-based data processing framework; it can take over batch and streaming data overheads. Hence, running Spark over Hadoop provides enhanced and extra functionality. After studying Hadoop Spark compatibility follow this guide to learn how Apache Spark works?

If you feel any query about this post on Apache Spark Compatibility with Hadoop, please feel free to share with us. Hope we will solve them.

Reference-

http://spark.apache.org/

See Also-

Did you know we work 24x7 to provide you best tutorials

Please encourage us - write a review on Google