MapReduce InputSplit vs HDFS Block in Hadoop

1. Objective

In this Hadoop InputSplit vs Block tutorial, we will learn what is a block in HDFS, what is MapReduce InputSplit and difference between MapReduce InputSplit vs Block size in Hadoop to deep dive into Hadoop fundamentals.

2. MapReduce InputSplit & HDFS Block – Introduction

Let us start with learning what is a block in Hadoop HDFS and what do you mean by Hadoop InputSplit?

Block in HDFS

Block is a continuous location on the hard drive where data is stored. In general, FileSystem stores data as a collection of blocks. In the same way, HDFS stores each file as blocks. The Hadoop application is responsible for distributing the data block across multiple nodes. Read more about blocks here.

InputSplit in Hadoop

The data to be processed by an individual Mapper is represented by InputSplit. The split is divided into records and each record (which is a key-value pair) is processed by the map. The number of map tasks is equal to the number of InputSplits.

Initially, the data for MapReduce task is stored in input files and input files typically reside in HDFS. InputFormat is used to define how these input files are split and read. InputFormat is responsible for creating InputSplit. Learn more about MapReduce InputSplit.

3. MapReduce InputSplit vs Blocks in Hadoop

Let’s discuss feature wise comparison between MapReduce InputSplit vs Blocks-

i. InputSplit vs Block Size in Hadoop

- Block – The default size of the HDFS block is 128 MB which we can configure as per our requirement. All blocks of the file are of the same size except the last block, which can be of same size or smaller. The files are split into 128 MB blocks and then stored into Hadoop FileSystem.

- InputSplit – By default, split size is approximately equal to block size. InputSplit is user defined and the user can control split size based on the size of data in MapReduce program.

ii. Data Representation in Hadoop Blocks vs InputSplit

- Block – It is the physical representation of data. It contains a minimum amount of data that can be read or write.

- InputSplit – It is the logical representation of data present in the block. It is used during data processing in MapReduce program or other processing techniques. InputSplit doesn’t contain actual data, but a reference to the data.

iii. Example of Block vs InputSplit in Hadoop

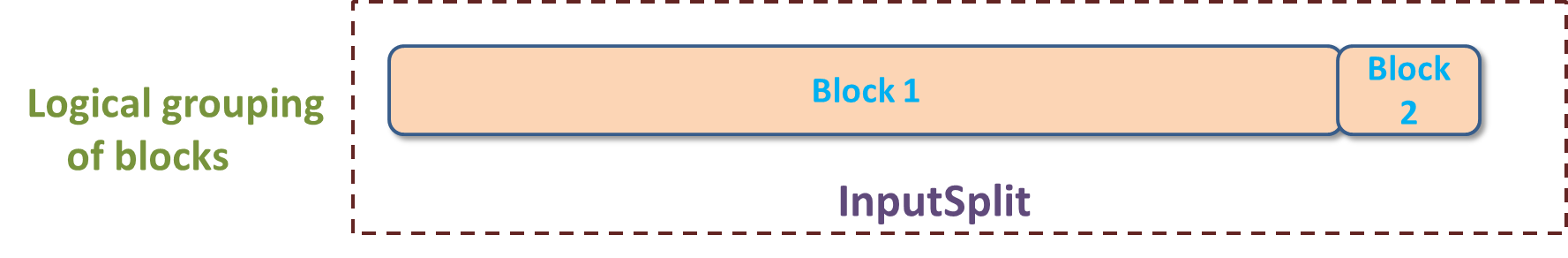

Consider an example, where we need to store the file in HDFS. HDFS stores files as blocks. Block is the smallest unit of data that can be stored or retrieved from the disk and the default size of the block is 128MB. HDFS break files into blocks and stores these blocks on different nodes in the cluster. Suppose we have a file of 130 MB, so HDFS will break this file into 2 blocks.

Now, if we want to perform MapReduce operation on the blocks, it will not process, because the 2nd block is incomplete. Thus, this problem is solved by InputSplit. InputSplit will form a logical grouping of blocks as a single block, because the InputSplit include a location for the next block and the byte offset of the data needed to complete the block.

4. Conclusion

From this, it is concluded that InputSplit is only a logical chunk of data i.e. it has just the information about blocks address or location.

During MapReduce execution, Hadoop scans through the blocks and create InputSplits and each inputSplit will be assigned to individual mappers for processing. Hence, Split act as a broker between block and mapper.

See Also-

If you are Happy with DataFlair, do not forget to make us happy with your positive feedback on Google

Hello, nice tutorial but i can read a little confusion:

In this tutorial you say that input split can be more than 128 mb, because is logical and could group two blocks of hdfs…

But from this part of the tutorial: https://data-flair.training/blogs/inputsplit-in-hadoop-mapreduce/

I ve read: s a user, we don’t need to deal with InputSplit directly, because they are created by an InputFormat (InputFormat creates the Inputsplit and divide into records). FileInputFormat, by default, breaks a file into 128MB chunks (same as blocks in HDFS) and by setting mapred.min.split.size parameter in mapred-site.xml we can control this value or by overriding the parameter in the Job object used to submit a particular MapReduce job. We can also control how the file is broken up into splits, by writing a custom InputFormat.

Which as i understand, perhaps im wrong, the input split should be 128 mb, and also in the quiz theres a question about the inputsplit of 541 mb file, and the answer is 5 splits…

Perhaps im wrong or misunderstood.

Thanks in advance

These are two different cases:

Default size of input split is 128 MB (same as block size), and yes it’s created by input format. But, it’s configurable in case of any requirements we can configure it as per the demand

So in this case i think you should point out in the tutorial that we have to configure the size of the block just that 130 mb ( 128 mb of file 1 + 2 mb file2) would be in one block…Thanks for your answer

I was talking about configuration of input split, block size will remain constant. In case if the record (multi-liner record like xml) is divided between two blocks here we need to configure input split, which will automatically fetch the data from second block.

“Now, if we want to perform MapReduce operation on the blocks, it will not process, because the 2nd block is incomplete. “. This comment is split from the above detail description. I did not get this point.